Apple ios 12 2 safari ar vr motion orientation access – Apple iOS 12.2 Safari AR/VR motion orientation access opens up exciting new possibilities for interactive web experiences. This in-depth look delves into the intricacies of augmented reality (AR) and virtual reality (VR) features within Safari, exploring how motion and orientation data are handled and utilized. We’ll examine the underlying APIs, potential use cases, and limitations, providing a comprehensive understanding of this powerful technology.

From the initial overview of AR/VR capabilities in iOS 12.2 Safari to a detailed analysis of the technical specifications and architecture, this exploration promises a comprehensive understanding of how motion and orientation data empowers developers to craft immersive web experiences. The integration with Safari, the data formats used, and the historical context of AR/VR in iOS browsers are all key components of this discussion.

Overview of iOS 12.2 Safari AR/VR Capabilities

iOS 12.2 Safari introduced limited but intriguing augmented reality (AR) and virtual reality (VR) capabilities. While not a full-fledged VR platform, these features allowed for basic AR experiences within the browser environment, extending the reach of web-based applications. This exploration of AR and VR within Safari highlighted the potential for immersive web experiences, albeit within the constraints of the platform.

ARKit and Vision Framework Integration

The integration of ARKit and Vision frameworks in iOS 12.2 Safari was a key component of its AR capabilities. ARKit, Apple’s AR platform, provided the core engine for handling spatial understanding and object tracking within the real world. Vision, on the other hand, offered image recognition and analysis tools. Combining these frameworks allowed developers to create applications that could overlay digital content onto the user’s real-world environment.

This integration, though limited, demonstrated a significant step towards making AR experiences more accessible through web browsers.

Safari Browser Interaction

The AR and VR features in iOS 12.2 Safari worked in conjunction with the browser. Users could access AR experiences directly from within a webpage, often triggered by specific elements or interactive elements within the content. This integration allowed for a seamless transition between the web browsing experience and an AR overlay. It emphasized the possibility of enriching online content with real-world context.

Impact on User Experience and Web Development

The impact on user experience was limited due to the early stage of development and the restrictions imposed by iOS 12.2. Nevertheless, these initial AR/VR capabilities within Safari sparked interest in immersive web experiences. Developers could now start experimenting with incorporating AR elements into web applications, showcasing the potential of the web as a platform for interactive experiences.

This innovation hinted at a future where websites could dynamically interact with the user’s physical surroundings.

Apple’s iOS 12.2 Safari AR/VR motion orientation access updates are pretty cool, but the tech world is buzzing about something else right now. Elizabeth Warren is asking the FTC to block Amazon’s purchase of iRobot, the maker of Roomba, raising serious concerns about potential monopolistic practices. This move might have some interesting implications for future app development and AR/VR experiences on iOS devices, though, especially considering how these types of technologies could be integrated with smart home products.

Key Differences Between AR and VR Experiences in iOS 12.2 Safari

| Feature | Augmented Reality (AR) | Virtual Reality (VR) |

|---|---|---|

| Environment | Overlays digital content onto the real world. The user’s physical surroundings are still visible. | Creates a completely immersive, computer-generated environment. The user is fully immersed in the virtual world. |

| User Interaction | Interaction is with both the digital and physical world. User interaction often involves physical movements and gestures. | Interaction is entirely within the virtual world. Interaction often relies on controllers or other input devices. |

| Accessibility | Potentially more accessible to a wider audience, as it leverages existing physical space. | Can be less accessible due to the need for specialized equipment. |

| Use Cases | Interactive product demonstrations, virtual try-ons, and location-based experiences. | Immersive gaming, educational simulations, and architectural walkthroughs. |

Motion and Orientation Access in iOS 12.2 Safari: Apple Ios 12 2 Safari Ar Vr Motion Orientation Access

iOS 12.2 Safari’s AR/VR capabilities rely heavily on accurate motion and orientation data to create immersive experiences. This allows developers to build applications that respond dynamically to the user’s movements and position, enhancing realism and interactivity. The seamless integration of these features within the browser environment expands the potential for AR/VR applications beyond dedicated apps.The Safari browser, leveraging the underlying iOS functionalities, provides a framework for developers to access and interpret motion and orientation data from various sensors.

This access is carefully managed to ensure user privacy and security, requiring explicit user consent and adhering to strict Apple guidelines. The precise methods and permissions associated with data access allow for a controlled and secure environment for developers to create innovative experiences.

Motion and Orientation Data Handling

Safari accesses motion and orientation data through the device’s built-in sensors. These sensors include accelerometers, gyroscopes, and magnetometers, which provide information about the device’s movement and orientation in space. The data is processed and filtered by iOS to deliver a stable and accurate representation to the browser. This involves calibration, smoothing, and removal of noise to ensure a consistent experience.

Data Access Mechanisms

The access to motion and orientation data is mediated through JavaScript APIs. These APIs, part of the broader Web APIs ecosystem, provide developers with a structured way to query and use the data. The JavaScript code running in Safari interacts with the iOS system, retrieving the sensor data and making it available to the application. The framework is designed for efficient data retrieval, avoiding performance bottlenecks.

Permissions and User Consent

Users grant permission to access motion and orientation data explicitly. The iOS system prompts the user to consent to the specific data access requirements, ensuring transparency and user control over their personal information. This approach aligns with Apple’s commitment to user privacy. The user has the right to revoke access at any time, ensuring a high level of control.

Security Considerations

Security is paramount when dealing with motion and orientation data. The data, although related to device movement, can still potentially reveal information about the user’s environment and activities. Safari, and iOS in general, employs security measures to mitigate this risk. This includes restrictions on data access, limitations on the use of the data, and encryption to protect the data during transmission.

Examples of Developer Usage

Developers can leverage this data for a wide range of AR/VR applications. For example, in an AR shopping app, motion and orientation data could be used to track the user’s movement around a room, enabling the placement of virtual objects in real-world space. Another example is a VR game that responds to the user’s head movements for a more immersive experience.

The possibilities are extensive, limited only by the developer’s imagination.

Supported Motion and Orientation Sensors

| Sensor | Description |

|---|---|

| Accelerometer | Measures acceleration forces acting on the device, providing information about linear movement. |

| Gyroscope | Measures the device’s rotational speed around its axes, enabling tracking of rotations and angular velocity. |

| Magnetometer | Measures the Earth’s magnetic field, allowing for accurate orientation and heading determination. |

Safari’s Integration with AR/VR and Motion/Orientation Data

iOS 12.2 Safari introduced exciting possibilities for web developers, enabling interactive AR/VR experiences and utilizing device motion and orientation data. This integration allows for creating immersive web applications that respond dynamically to the user’s physical environment. This capability opens doors for a new generation of engaging and interactive web experiences.Safari’s integration with AR/VR and motion/orientation data leverages the powerful hardware of iOS devices, transforming them into responsive platforms for dynamic web experiences.

Apple’s iOS 12.2 Safari AR/VR motion and orientation access features are fascinating, but the recent news about the Meta Quest Pro 2 being discontinued discontinued VR makes me wonder if Apple’s focus on these technologies will be further amplified. While the Quest Pro 2’s absence from the market is certainly notable, I’m still very interested in how Apple will develop and refine their AR/VR capabilities in the coming years.

The inherent capabilities of these devices are now available to web developers, enabling the creation of novel and engaging applications within the browser.

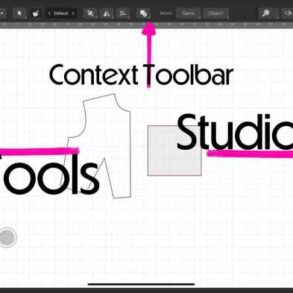

Available APIs and Programming Interfaces

iOS 12.2 Safari provides a suite of APIs for accessing motion and orientation data, enabling developers to build interactive experiences that respond to the user’s physical environment. These APIs provide access to data from the device’s motion sensors, enabling the creation of experiences that react to the user’s movements. Key APIs include `DeviceOrientationEvent` and `DeviceMotionEvent`, which are crucial for building experiences that respond to device movements.

These APIs allow for capturing data about the device’s rotation, acceleration, and other motion-related information, providing a rich set of data for interactive applications.

Data Formats for Motion, Orientation, and Spatial Information

The `DeviceOrientationEvent` and `DeviceMotionEvent` APIs deliver data in specific formats. `DeviceOrientationEvent` primarily provides the device’s orientation relative to the user, including alpha (rotation around the z-axis), beta (rotation around the x-axis), and gamma (rotation around the y-axis). The `DeviceMotionEvent` delivers more granular information, such as acceleration along the x, y, and z axes, and rotation rate. These data points, when combined, offer a comprehensive understanding of the device’s motion in 3D space.

The data format is typically represented in degrees for angles and acceleration in meters per second squared.

Building Interactive Web Experiences

Developers can use these APIs to create interactive web experiences that respond to user movement. For instance, a web-based game could adjust its gameplay based on the user’s device orientation, or an AR application could track the user’s head movements to interact with virtual objects. This process involves listening for `DeviceOrientationEvent` and `DeviceMotionEvent` events, processing the data, and updating the web page or application’s elements accordingly.

This real-time responsiveness enables engaging and interactive experiences.

Different Approaches to AR/VR Implementation

Multiple approaches exist for incorporating AR/VR features within Safari. One approach involves utilizing the device’s motion and orientation data to create basic AR overlays, adjusting the position and orientation of virtual elements based on the user’s movements. Another approach leverages the device’s camera to provide a more immersive and realistic AR experience. A more advanced approach integrates with external AR libraries, enabling richer AR features.

The choice of approach depends on the complexity and functionality required for the web application.

Common Errors Encountered

- Inaccurate Data: Motion and orientation data can sometimes be affected by environmental factors, leading to inaccurate readings. Calibration issues or interference from nearby electronic devices can also contribute to inaccuracies.

- Performance Issues: Processing and updating large amounts of motion and orientation data can impact the performance of the web application. Optimization techniques are crucial to ensure a smooth user experience, especially in complex AR/VR scenarios.

- Compatibility Issues: Different iOS devices and versions might have varying levels of support for the APIs and data formats, potentially leading to compatibility problems. Thorough testing across various devices and operating systems is vital.

Practical Use Cases and Limitations

Safari’s integration with AR/VR in iOS 12.2 presents exciting possibilities, but also introduces limitations that developers need to be aware of. While the initial access to motion and orientation data is a significant step, the context of these capabilities within the browser environment presents certain constraints that developers must consider. This section explores potential use cases, limitations, and security considerations for AR/VR experiences launched within Safari.The ability to leverage motion and orientation data within Safari opens doors for innovative web-based AR/VR experiences.

However, the limitations inherent in the browser environment and the security considerations are crucial for developing robust and reliable applications. The inherent trade-offs between browser-based AR/VR applications and native counterparts need careful analysis.

Real-World Use Cases

Web-based AR experiences can be useful for interactive product demonstrations, virtual try-ons, and educational applications. For example, a furniture retailer could allow customers to virtually place furniture in their homes via AR, or an educational app could superimpose 3D models of anatomical structures onto a user’s body for interactive learning.

Limitations of Motion and Orientation Data Access

Safari’s access to motion and orientation data is fundamentally different from native AR applications. The limited access to the device’s hardware sensors directly through the browser can restrict the accuracy and responsiveness of complex AR experiences. Users might experience lag or inaccuracies in real-time interactions, and the precision of tracking could be compromised, especially in complex or fast-moving scenarios.

Impact on Complex AR/VR Applications

Developing complex AR/VR experiences within Safari necessitates a careful consideration of the limitations. Sophisticated tracking, object recognition, and real-time rendering within a browser environment can be challenging. Furthermore, the inability to access the full range of device hardware capabilities might necessitate compromises in terms of visual fidelity or interactivity.

Security Considerations and Privacy Concerns

Security is paramount in any AR/VR application. The access of motion and orientation data through a browser raises concerns about the security of the user’s data and the potential for malicious use. It’s crucial for developers to employ secure coding practices and adhere to privacy guidelines to mitigate these risks. Robust authentication and authorization mechanisms should be implemented to prevent unauthorized access.

Performance Comparison Across Devices

The performance of AR/VR experiences within Safari on different iOS 12.2 devices will vary based on factors like processor speed, graphics capabilities, and sensor accuracy.

| Device | Processor | Graphics | Sensor Accuracy | Expected Performance |

|---|---|---|---|---|

| iPhone XS | A12 Bionic | GPU with advanced capabilities | High | Good to Excellent performance in most cases |

| iPhone XR | A12 Bionic | GPU with good capabilities | High | Good performance, potential slight lag in demanding applications |

| iPad Pro (11-inch) | A12Z Bionic | GPU with advanced capabilities | High | Excellent performance; suitable for demanding AR/VR tasks |

| iPhone SE (2nd generation) | A13 Bionic | GPU with good capabilities | Medium | Good performance, but may struggle with very demanding AR/VR applications |

Note: This table provides a general overview and performance may vary depending on specific application complexity and user environment.

Evolution of AR/VR in iOS Safari (Historical Context)

Safari’s integration with augmented reality (AR) and virtual reality (VR) technology has evolved significantly over the years, mirroring the broader advancements in mobile computing and the field of mixed reality itself. Early iterations of AR/VR in Safari focused on basic interaction and display, while later versions have seen a surge in capability and sophistication. This evolution is crucial for understanding the context and impact of iOS 12.2’s improvements.The initial stages of AR/VR support in Safari were limited, primarily focused on experimental implementations and proof-of-concept demonstrations.

This early phase lacked the sophisticated rendering and interaction capabilities seen in later iterations. The introduction of new technologies and frameworks, along with improvements in hardware capabilities, have led to a substantial increase in the possibilities offered by Safari’s AR/VR features.

Apple iOS 12.2’s Safari AR/VR motion and orientation access is fascinating, but I’m also digging into the latest Nintendo Switch 2 accessories, like the Dbrand Killswitch. It’s interesting to see how these different tech areas are evolving, though I’m definitely hoping Apple continues to improve the AR/VR features in iOS 12.2 Safari, as they are still quite new.

Early AR/VR Support in Safari

Early iOS versions provided rudimentary support for AR and VR experiences within Safari, mostly confined to specific web apps and experimental implementations. These early implementations often required specialized plugins or extensions, limiting accessibility and interoperability. The user experience was typically less intuitive and interactive compared to the later versions.

Key Improvements in iOS 12.2

iOS 12.2 marked a significant leap forward in Safari’s AR/VR capabilities. The introduction of native support for motion and orientation data, along with enhanced rendering capabilities, facilitated a more immersive and interactive experience for users. This direct integration eliminated the need for third-party plugins, streamlining the experience and improving accessibility. Furthermore, the seamless integration with existing web standards allowed for the creation of more sophisticated and complex AR/VR applications.

Emerging Trends and Future Possibilities, Apple ios 12 2 safari ar vr motion orientation access

The future of AR/VR in iOS Safari is likely to see a continuation of the trend toward more sophisticated and immersive experiences. As the technology matures and hardware capabilities improve, we can anticipate more seamless blending of the real and virtual worlds. This will likely include more intricate interactions, advanced tracking, and more complex rendering techniques, allowing for a wider array of use cases.

Examples include interactive 3D models in online stores or immersive educational experiences.

Comparison with Other Mobile Browsers

| Feature | iOS 12.2 Safari | Other Mobile Browsers (Example: Chrome, Firefox) |

|---|---|---|

| Motion and Orientation Access | Direct access through APIs | May require plugins or extensions |

| ARKit/RealityKit Integration | Seamless integration with AR frameworks | Limited or less seamless integration |

| VR Support | Limited VR support through web technologies | Varying levels of VR support, often through specialized plugins |

| Web Standards Compliance | Adherence to web standards for AR/VR development | May have variations in compliance across different browsers |

The table above highlights the distinct advantages of iOS 12.2 Safari’s direct integration of AR/VR capabilities. This native support for motion and orientation data, along with a clear commitment to web standards, sets it apart from competing mobile browsers.

Evolution of the AR/VR User Interface

The user interface for AR/VR experiences within iOS 12.2 Safari has seen improvements in intuitive design and ease of use. The seamless integration of motion and orientation data with web-based AR/VR applications allows for more natural and responsive interactions. This enhancement is evident in how users can interact with virtual objects or environments, adding a more engaging and practical application.

The user interface prioritizes clarity and simplicity, enabling users to navigate and manipulate AR/VR content without undue complexity.

Technical Specifications and Architecture

iOS 12.2 Safari’s foray into AR/VR and motion/orientation access represents a significant step in bridging the gap between web browsing and augmented reality experiences. This integration demands a nuanced understanding of the underlying technical specifications and architecture. The ability to leverage real-world data for interactive web experiences opens doors to innovative applications, but also introduces complexities in terms of hardware compatibility and API utilization.Safari’s AR/VR capabilities in iOS 12.2 rely on a layered approach, leveraging existing iOS frameworks while introducing new interfaces for web developers.

This approach prioritizes seamless integration with the broader iOS ecosystem, allowing developers to create AR/VR experiences within a familiar web context.

Accessing AR/VR and Motion/Orientation Data

The core mechanism for accessing AR/VR and motion/orientation data involves a series of well-defined interactions between Safari, iOS, and the device’s hardware. Safari acts as the intermediary, presenting the data to web applications in a standardized format. iOS handles the underlying complexities of sensor data acquisition and processing, ensuring accuracy and reliability. The hardware components, such as accelerometers, gyroscopes, and cameras, provide the raw data streams that are ultimately utilized.

Underlying Architecture of AR/VR Components

Safari utilizes a dedicated AR/VR component, built on top of existing iOS frameworks. This component handles the translation of web application requests for AR/VR data into corresponding iOS API calls. This architecture ensures that web applications can leverage AR/VR capabilities without requiring deep knowledge of iOS internals.

Interaction Between Safari, iOS, and Hardware

The interaction flow is as follows: A web application requests AR/VR data through a specific API within Safari. Safari forwards this request to the iOS AR/VR component. iOS retrieves the relevant data from the hardware sensors (accelerometer, gyroscope, camera). The data is then processed and formatted by iOS. Finally, the formatted data is returned to Safari, which presents it to the web application.

This carefully designed system ensures security and efficient data transfer between components.

Relevant APIs and Frameworks

A crucial aspect of this integration is the utilization of specific APIs and frameworks. These tools facilitate the communication and interaction between Safari, iOS, and the hardware components. CoreMotion, a framework within iOS, is extensively used for handling motion data. Other potential frameworks and APIs involved could include ARKit (if leveraging AR features) and various camera-related APIs, though the specific ones utilized in iOS 12.2 Safari are not publicly detailed.

Hardware Requirements for Optimal Performance

| Hardware Component | Requirement for Optimal Performance |

|---|---|

| Processor | A modern processor with sufficient processing power to handle real-time data streams and complex calculations is crucial. |

| Graphics Processing Unit (GPU) | A powerful GPU with dedicated hardware acceleration for rendering AR/VR content and handling complex graphical operations. |

| Motion Sensors | Accurate and responsive accelerometers and gyroscopes are necessary for smooth and precise tracking of motion and orientation. |

| Camera | A high-resolution camera with good image quality and stability is required for capturing and processing augmented reality elements. |

Optimal AR/VR performance depends on the combined capabilities of these hardware components. High-end devices generally offer a better experience, while lower-end devices may exhibit performance limitations or reduced responsiveness.

Wrap-Up

In conclusion, Apple iOS 12.2 Safari’s integration of AR/VR and motion/orientation data represents a significant advancement in mobile web development. While limitations exist, the potential for innovative applications is vast. This exploration provides a clear picture of the capabilities and challenges, empowering developers to leverage these features effectively and build truly immersive web experiences.