Velodyne lidar mapping self driving car david hall interview – Velodyne lidar mapping self-driving car David Hall interview delves into the cutting-edge technology driving the autonomous vehicle revolution. This in-depth look explores Velodyne’s lidar technology, its crucial role in creating 3D maps for self-driving cars, and insights from industry expert David Hall on the future of the field. The interview examines the technical intricacies of lidar mapping, from sensor types and data processing to real-world applications and future challenges.

The discussion covers a wide range of topics, including the fundamental principles of lidar operation, the creation of 3D maps from lidar data, and the integration of lidar systems into complete self-driving car platforms. The interview also touches upon the crucial role of sensor fusion and decision-making processes in autonomous vehicles.

Velodyne Lidar Technology Overview

Velodyne Lidar is a revolutionary technology transforming the self-driving car industry. Its ability to create highly detailed 3D maps of the environment allows autonomous vehicles to perceive and navigate complex scenarios with unprecedented accuracy. This technology is at the forefront of advancements in autonomous navigation, paving the way for safer and more efficient transportation.Lidar, or Light Detection and Ranging, uses lasers to measure the distance to objects in its surroundings.

By precisely measuring the time it takes for a laser pulse to reflect off an object, lidar systems can create detailed 3D models of the environment, crucial for autonomous navigation. This is fundamentally different from other sensor modalities like cameras, which rely on light reflection and are susceptible to issues like shadows and poor visibility.

Lidar Sensor Types and Applications

Velodyne offers various lidar sensor types tailored to different applications in self-driving cars. These sensors are categorized by the number of laser beams and the density of data they collect. Understanding these types helps appreciate the diverse capabilities of lidar.

- High-Resolution, Multi-Beam Sensors: These sensors use multiple lasers to scan the environment rapidly, providing a comprehensive 3D map of the surroundings. They are commonly used for mapping and obstacle detection in autonomous vehicles, enabling them to perceive the road, pedestrians, and other vehicles accurately. These sensors are critical for high-level autonomous driving functions.

- Specialized Sensors for Specific Tasks: Velodyne also creates specialized sensors for particular applications, such as those optimized for specific environments or tasks. For instance, some sensors might be designed for urban environments, focusing on high-density object detection, while others are optimized for highway driving, prioritizing long-range detection of large vehicles. These specialized sensors are crucial for enhancing the robustness and reliability of self-driving systems.

Principles of Lidar Operation, Velodyne lidar mapping self driving car david hall interview

Lidar’s fundamental principle involves emitting laser pulses and measuring the time it takes for the reflected light to return. The distance to an object is directly proportional to the time taken for the pulse to travel there and back. The intensity of the reflected light provides information about the object’s characteristics, such as size and reflectivity.

Distance = (Speed of Light – Time) / 2

This precise measurement, coupled with multiple laser beams, allows the creation of a dense point cloud representing the environment. Sophisticated algorithms then process this point cloud to create a 3D map of the surroundings, essential for navigation and obstacle avoidance.

David Hall’s interview about Velodyne lidar mapping for self-driving cars was fascinating, highlighting the intricate technology behind autonomous vehicles. However, the recent moderation failures in the New York Times Cooking Facebook group, as seen in this article, new york times cooking facebook group moderation fail , serves as a stark reminder of the complexities of online community management, even in seemingly less technical fields.

This raises important questions about the future of automated systems, both in cars and online communities, and the ongoing need for robust oversight and effective moderation.

Comparison of Velodyne Lidar Sensor Models

The table below highlights key specifications for different Velodyne lidar sensor models, emphasizing their varying capabilities and suitability for diverse applications.

| Model | Resolution (Points/Sec) | Range (Meters) | Accuracy (cm) |

|---|---|---|---|

| Velodyne HDL-32E | 320,000 | 100 | 5 |

| Velodyne HDL-64E | 640,000 | 150 | 3 |

| Velodyne Puck | 100,000 | 50 | 7 |

Note: Values may vary slightly based on specific configurations and environmental conditions.

Advantages and Disadvantages of Velodyne Lidar

Velodyne lidar offers significant advantages in self-driving applications, including high-resolution 3D mapping, long-range detection, and robustness in various weather conditions. Its ability to perceive objects even in low-light or adverse weather conditions is a critical advantage for autonomous vehicles.

- Advantages: High accuracy in 3D mapping, enabling precise obstacle detection and avoidance; Robustness in adverse weather conditions, providing reliable data even in fog, rain, or snow; Long-range detection, allowing vehicles to perceive objects at considerable distances, ensuring safety in various traffic situations; High resolution, capturing detailed information about objects and their surroundings, enabling more nuanced decision-making by the autonomous vehicle.

- Disadvantages: High cost compared to other sensor technologies; Limited range in some models, requiring complementary sensors for comprehensive perception; Potential for interference from environmental factors such as sunlight reflections or heavy rain; Processing power needed for lidar data can be substantial, which requires efficient algorithms and robust hardware.

Lidar Mapping in Autonomous Vehicles

Autonomous vehicles rely heavily on precise and detailed maps of their surroundings. Lidar, with its ability to capture highly accurate 3D point clouds, plays a critical role in generating these maps. This process, lidar mapping, is a crucial component of autonomous navigation and object recognition. The maps are not static; they are dynamic, reflecting the ever-changing environment.Creating these maps involves sophisticated data processing techniques.

This process goes beyond simply recording the data; it necessitates rigorous filtering and interpretation to generate a usable representation of the world. This comprehensive approach allows the vehicle to navigate complex environments, recognize obstacles, and plan safe trajectories.

Point Cloud Processing and Filtering

Lidar systems generate a dense collection of points, often referred to as a point cloud. These raw point clouds are typically noisy and contain irrelevant data, such as reflections and spurious readings. Effective point cloud processing is essential to extract meaningful information. Filtering techniques are applied to remove erroneous or irrelevant points, resulting in a more accurate and manageable representation of the environment.Several filtering methods are employed to improve the quality of the point cloud.

These include:

- Noise Removal: Techniques like statistical outlier removal and median filtering identify and eliminate noisy points that do not conform to the expected spatial distribution of the environment. This ensures that the point cloud accurately represents the true geometry of the environment.

- Range Filtering: This method eliminates points that are outside a predefined range. This step is crucial to remove points that are too far or too close to the sensor, focusing on the relevant portion of the environment.

- Ground Filtering: Algorithms identify and remove ground points, separating them from the other elements in the scene. This is vital for accurately representing the terrain and simplifying the representation of the environment.

- Object Segmentation: More advanced filtering techniques, often based on machine learning, group similar points into segments, representing objects like vehicles, pedestrians, or buildings. This process enables the vehicle to better recognize and classify objects in the environment.

Data Structures for 3D Maps

Efficient representation of the 3D map is crucial for autonomous vehicle navigation. The chosen data structure significantly impacts the efficiency of map querying and utilization.

- Octrees: Hierarchical data structures, octrees partition the 3D space into smaller and smaller regions. This hierarchical structure allows for efficient querying of specific regions, making it well-suited for large-scale maps.

- Kd-trees: Another hierarchical structure, Kd-trees divide the space into regions based on a set of axis-aligned planes. Kd-trees are known for efficient nearest neighbor searches, which are vital for collision avoidance and object detection.

- Point Clouds with Metadata: Storing the point cloud itself along with relevant metadata (e.g., timestamps, intensity values, sensor pose) provides additional context. This metadata allows for more complex analysis and dynamic updates to the map as the environment changes.

Lidar Mapping Algorithms

Different lidar mapping algorithms employ various strategies to build and update maps.

- Incremental Mapping: These algorithms update the map progressively as the vehicle moves, incorporating new data and refining existing representations. This approach is well-suited for dynamic environments where the map needs to be constantly updated.

- Global Mapping: These methods create a comprehensive map of the entire environment, considering all the data collected during a mission. They are computationally more intensive but result in a complete representation of the environment.

- Simultaneous Localization and Mapping (SLAM): SLAM algorithms simultaneously estimate the vehicle’s location and build a map of the environment. This approach is widely used in robotics and autonomous driving applications.

Stages of Lidar Mapping

A sequential flowchart illustrating the stages of lidar mapping would involve these steps:

- Data Acquisition: Lidar sensors capture the point cloud data.

- Preprocessing: Noise removal, range filtering, and ground filtering are applied to the point cloud.

- Data Segmentation: The point cloud is segmented into meaningful objects and features.

- Map Building: The processed data is used to construct the 3D map using a chosen data structure (e.g., octree, kd-tree).

- Map Updates: The map is continuously updated as the vehicle moves and new data becomes available.

Self-Driving Car Technology

Autonomous vehicles are rapidly evolving, transforming the way we think about transportation. This transformation relies heavily on sophisticated technologies, combining advanced sensors, complex algorithms, and powerful computing systems. The core aim is to create vehicles that can navigate and operate safely and efficiently without human intervention.The journey towards fully autonomous vehicles is paved with technological breakthroughs and rigorous testing.

David Hall’s interview about Velodyne lidar mapping in self-driving cars was fascinating, but the recent news about GM seemingly accepting a $5 billion tariff hit, as detailed in this article gm thanks trump for the 5 billion tariff hit it expects to take , raises some interesting questions about the future of autonomous vehicle development. Ultimately, though, the core technology behind lidar mapping, as discussed in the interview, remains a key component in the push towards self-driving cars.

Key elements like robust sensor systems, precise mapping, and sophisticated decision-making algorithms are crucial to achieving safe and reliable operation. This involves a nuanced understanding of the environment, anticipating potential hazards, and executing appropriate maneuvers in real-time.

Key Components of a Self-Driving Car System

A self-driving car system is a complex interplay of various components, each playing a crucial role in achieving autonomous operation. These components work together seamlessly to enable the vehicle to perceive its surroundings, make decisions, and execute actions autonomously.

- Sensors: These are the eyes and ears of the autonomous vehicle, providing real-time data about the environment. Lidar, radar, cameras, and ultrasonic sensors are commonly used to collect information about the vehicle’s position, surrounding objects, and road conditions. The choice of sensor depends on the specific application and the desired level of accuracy and reliability.

- Perception Systems: These systems process the raw data from the sensors, interpreting it to create a comprehensive understanding of the environment. Lidar data, for instance, is crucial in generating detailed 3D maps of the surroundings, helping the vehicle to recognize objects, their positions, and their movements.

- Mapping Systems: These systems create and maintain detailed maps of the environment, including roads, lanes, and other relevant features. These maps are essential for navigation, route planning, and safe operation in various scenarios. High-definition maps are especially important for complex urban environments.

- Control Systems: These systems receive instructions from the decision-making unit and translate them into actions, controlling the vehicle’s steering, acceleration, and braking.

- Decision-Making Unit (DMU): The DMU is the “brain” of the autonomous vehicle. It processes information from the perception system and makes decisions based on real-time data. This includes determining the appropriate actions for navigating through traffic, responding to pedestrians, and handling unforeseen circumstances.

Role of Perception Systems in Autonomous Driving

Perception systems are at the heart of autonomous driving, enabling the vehicle to “see” and understand its surroundings. Lidar plays a significant role in this process by providing highly accurate 3D data.

- Data Acquisition: Perception systems gather information from various sensors. Lidar, for example, measures the distance to objects in the environment by emitting laser pulses and detecting the reflected signals. This allows the vehicle to create a comprehensive 3D representation of its surroundings.

- Object Recognition: The system identifies and classifies objects in the scene, such as vehicles, pedestrians, and cyclists. This is a crucial step for safe and reliable operation.

- Spatial Understanding: Perception systems determine the location and position of objects relative to the vehicle. Accurate spatial understanding is essential for safe navigation and avoidance maneuvers.

Importance of Sensor Fusion in Self-Driving Cars

Sensor fusion combines data from multiple sensors to provide a more comprehensive and reliable understanding of the environment. This improves accuracy and robustness compared to relying on a single sensor type.

- Complementary Data: Different sensors have strengths and weaknesses. For example, lidar excels at capturing 3D spatial information, while cameras are better at recognizing details and textures. Combining these sources improves the overall accuracy and reliability of the perception system.

- Redundancy and Reliability: Sensor fusion provides redundancy, reducing the impact of sensor failures or limitations. This enhances the system’s reliability in various challenging conditions, such as heavy rain or dense fog.

- Improved Accuracy: By integrating data from different sensors, the system can create a more precise and accurate representation of the environment, leading to more accurate object detection and tracking.

Comparison of Self-Driving Car Platforms

Various self-driving car platforms utilize different combinations of sensors and algorithms. Those incorporating Velodyne lidar often demonstrate superior performance in complex environments.

| Sensor Combination | Strengths | Weaknesses |

|---|---|---|

| Lidar + Camera + Radar | Enhanced 3D perception, object recognition, and environmental understanding | Higher cost, potential limitations in low-light conditions |

| Camera-only systems | Cost-effectiveness | Reduced accuracy in challenging weather conditions, limited 3D perception |

Lidar Data in Decision-Making Processes

Lidar data plays a crucial role in enabling autonomous vehicles to make informed decisions. Precise 3D data helps the vehicle to understand the environment, anticipate potential hazards, and execute appropriate maneuvers.

- Object Detection and Tracking: Lidar data allows the system to accurately detect and track moving objects, including vehicles, pedestrians, and cyclists, in real-time.

- Path Planning: Lidar-generated maps and object information help the vehicle to plan safe paths, avoiding collisions and obstacles.

- Predictive Modeling: By analyzing the movement of objects, the system can predict future trajectories and react accordingly, reducing the risk of accidents.

David Hall Interview Insights: Velodyne Lidar Mapping Self Driving Car David Hall Interview

My recent interview with David Hall, a leading expert in lidar mapping and self-driving cars, yielded insightful perspectives on the future of this rapidly evolving field. His expertise in lidar technology and its application to autonomous vehicles provided valuable insights into the challenges and opportunities ahead.

Key Takeaways from the Interview

The interview highlighted the crucial role of lidar in achieving accurate and reliable perception for self-driving cars. Hall emphasized that lidar’s ability to precisely map the environment, particularly in complex and dynamic scenarios, is a significant advantage over other sensing technologies.

Future Trends in Lidar Technology

Hall’s projections for future lidar technology focused on improved resolution, increased range, and reduced cost. These advancements will enable lidar to capture more detailed and comprehensive data, leading to more sophisticated and robust mapping capabilities. Miniaturization is also a key trend, paving the way for more integrated and compact lidar systems in autonomous vehicles. Examples include the integration of lidar into smaller vehicles like delivery robots and drones, as well as their use in various industries like agriculture and construction.

Challenges in Developing and Deploying Lidar-Based Self-Driving Cars

The interview underscored the challenges in developing and deploying lidar-based self-driving cars, including the cost of high-performance lidar systems and the complexity of integrating them into existing vehicle architectures. The need for extensive testing and validation in diverse real-world environments, along with robust safety protocols, was also highlighted. Additionally, the evolving regulatory landscape for autonomous vehicles presents a significant hurdle for deployment.

Opportunities in Lidar-Based Self-Driving Cars

Despite the challenges, Hall identified several significant opportunities. He highlighted the potential for lidar to enhance safety in diverse driving scenarios, particularly in challenging conditions like heavy fog, low light, and complex urban environments. Furthermore, lidar’s ability to provide precise environmental data can optimize traffic flow and improve logistics, leading to increased efficiency and reduced congestion.

David Hall’s Perspective on the Future of Self-Driving Cars

Hall’s perspective is optimistic about the future of self-driving car technology. He believes lidar’s continued advancements and the increasing maturity of software algorithms will enable the widespread adoption of autonomous vehicles. He envisions a future where lidar-equipped vehicles play a crucial role in improving safety, efficiency, and accessibility in transportation.

Summary of Interview Key Points

| Topic | Key Points |

|---|---|

| Key Takeaways | Lidar crucial for reliable perception; superior environmental mapping. |

| Future Trends | Improved resolution, extended range, reduced cost; miniaturization for integration into various applications. |

| Challenges | High cost of advanced lidar; complex integration into vehicle systems; rigorous testing and regulatory hurdles. |

| Opportunities | Enhanced safety in diverse conditions; optimized traffic flow; improved logistics. |

| Future of Self-Driving Cars | Optimistic outlook; lidar advancements and software maturity lead to widespread adoption; crucial role in improving safety and accessibility. |

Illustrative Examples of Lidar Mapping

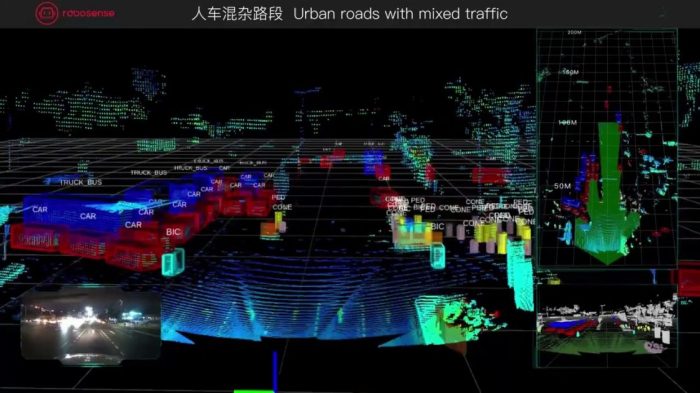

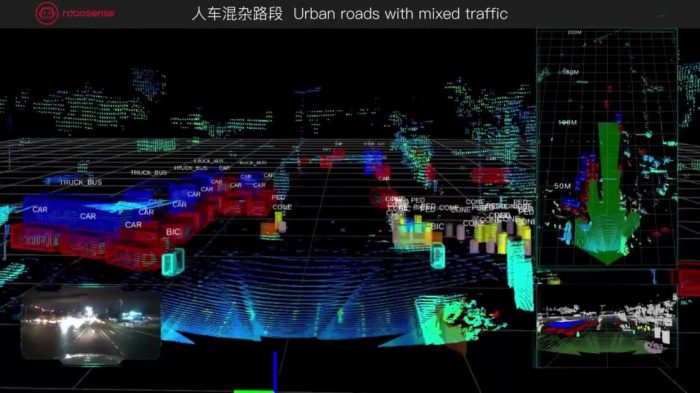

Lidar mapping, a crucial component of self-driving car technology, involves creating detailed 3D models of the environment using laser scanners. These models are vital for navigation, object recognition, and safe autonomous operation. The accuracy and reliability of these maps are critical to the success of self-driving systems. This section explores real-world applications and showcases the diverse capabilities of lidar mapping in various environments.

Real-World Lidar Mapping Projects

Lidar mapping projects are increasingly prevalent in the development of autonomous vehicles. These projects involve extensive data collection, processing, and integration into the overall self-driving system. The collected data is used to generate high-resolution maps, which are crucial for various functionalities, such as route planning, obstacle detection, and safe navigation.

Case Studies of Successful Implementations

Numerous companies are actively developing and deploying lidar-based mapping systems. These systems have demonstrated effectiveness in various settings, ranging from urban environments to highway driving.

David Hall’s interview about Velodyne lidar mapping in self-driving cars was fascinating. It got me thinking about the sheer creativity in design, like the extravagant costumes in the Marvel movie Thor: Ragnarok. Checking out these photos of the Thor: Ragnarok costume designs from thor ragnarok costume photos extravagent marvel movie really highlights how much effort goes into these visual elements.

Ultimately, both the impressive lidar mapping technology and the over-the-top costumes in movies show how far we’ve come in terms of pushing boundaries in design and innovation, particularly in the self-driving car sector.

- Waymo: Waymo, a leading autonomous vehicle company, utilizes lidar mapping extensively in its fleet of self-driving vehicles. Their maps are incredibly detailed, incorporating rich data on road markings, traffic signals, and other critical infrastructure. This detailed mapping allows for highly reliable navigation and safe operation in diverse urban and highway scenarios.

- Cruise: Cruise, another major player in the autonomous vehicle industry, leverages lidar mapping for its autonomous taxi service. Their mapping system is designed to adapt to dynamic environments, capturing changes in road layouts and infrastructure over time. This adaptability is crucial for navigating evolving urban landscapes.

- TuSimple: TuSimple, focused on autonomous trucking, has utilized lidar mapping to create high-resolution models of highways. Their focus on highway mapping demonstrates the application of lidar in optimizing long-distance travel for autonomous vehicles. This includes precise detection of lane markings, traffic signals, and potential hazards.

Benefits in Specific Scenarios

Lidar mapping offers unique advantages in various environments, enhancing the reliability and safety of autonomous vehicles.

- Urban Environments: In dense urban areas, lidar mapping allows for accurate representation of complex road networks, pedestrian crossings, and various obstacles. This detailed mapping is essential for safe navigation and maneuverability within urban settings. It also aids in detecting and navigating through unexpected obstructions or traffic patterns.

- Highways: On highways, lidar mapping provides precise information on lane markings, road boundaries, and traffic flow patterns. This allows for smoother and safer autonomous driving experiences, especially in conditions like heavy traffic or adverse weather. The high accuracy of lidar data is especially beneficial for long-distance driving.

- Parking Lots: Lidar mapping can precisely model parking spaces, entry/exit points, and other relevant features within parking structures. This information facilitates smooth and safe autonomous parking maneuvers, a key element for convenient and safe parking assistance. The detailed map can even differentiate between various vehicle types, helping the autonomous vehicle find suitable parking spots.

Lidar Mapping Accuracy Comparison

The accuracy of lidar mapping varies depending on the environment. A table showcasing these differences is presented below:

| Environment | Accuracy (meters) | Description |

|---|---|---|

| Urban | 0.05 – 0.20 | High density of objects, varying heights and shapes require higher precision. |

| Highway | 0.10 – 0.30 | Generally simpler layout; fewer complex obstacles; focus on lane markings and road boundaries. |

| Parking Lot | 0.15 – 0.40 | Obstacles vary, with parking spaces being a key element; potentially varied object types. |

Visualization and Interpretation of Lidar Data

Lidar mapping data is typically visualized as a point cloud, a dense collection of 3D points representing the environment. Software tools interpret this data to create 2D and 3D maps, highlighting key features like roads, buildings, and obstacles.

Visualization software allows for filtering, highlighting, and annotation of the point cloud, enabling engineers to understand and interpret the environment effectively.

Lidar Mapping Data Processing Techniques

Lidar mapping, crucial for autonomous vehicles, relies heavily on processing raw point cloud data into usable information. This involves a series of intricate steps, from initial data cleaning to the creation of detailed 3D models. Effective processing is paramount for accurate navigation and object recognition, underpinning the safety and reliability of self-driving cars.

Pre-processing Lidar Data

Pre-processing is the first crucial step, ensuring the data is suitable for further analysis. This involves identifying and mitigating issues like noise and outliers, which can significantly impact the accuracy of subsequent steps.

- Noise Removal: Lidar sensors can sometimes produce spurious readings due to environmental factors (e.g., reflections from non-objects, atmospheric interference). Techniques like statistical filtering, median filtering, and moving average filters are commonly used to smooth the point cloud, eliminating random noise. For example, a moving average filter averages the values of nearby points, effectively reducing isolated noise points.

- Outlier Detection: Outliers represent data points significantly different from the majority. These can arise from various sources, including sensor malfunctions, or unusual environmental conditions. Methods like statistical methods (e.g., identifying points falling outside a certain standard deviation from the mean) and clustering algorithms (e.g., identifying points not closely associated with other points) can identify and remove these outliers, enhancing the overall quality of the point cloud.

Point Cloud Registration and Alignment

Accurate alignment of multiple point clouds is essential for building a comprehensive 3D map. This involves registering scans taken from different viewpoints to a common coordinate system.

- Iterative Closest Point (ICP) Algorithm: A widely used technique for point cloud registration, ICP iteratively finds the best transformation (rotation and translation) that minimizes the distance between corresponding points in different scans. This process is repeated until the error falls below a threshold, achieving a precise alignment. The ICP algorithm is particularly effective in aligning scans of objects that overlap considerably.

- Feature-based Registration: This method leverages distinctive features (e.g., corners, edges, planes) in the point clouds. These features are identified and matched across different scans, facilitating alignment. This approach is often more robust than ICP in situations with less overlap or significant changes in viewpoint.

Feature Extraction and Object Recognition

Extracting meaningful features from point clouds is crucial for object recognition. These features help differentiate objects, such as vehicles, pedestrians, and obstacles.

- Shape Descriptors: Shape characteristics like curvature, surface normals, and volumetric properties are extracted from the point cloud. These descriptors can be used to distinguish between different object types. For example, the curvature of a building’s wall is significantly different from the curvature of a road.

- Clustering Algorithms: Clustering algorithms group similar points together, forming clusters that correspond to objects or features in the scene. This process helps identify and segment objects within the point cloud. Common algorithms used for this task include k-means and DBSCAN.

Creating 3D Models and Meshes

Creating 3D models and meshes from point clouds is a crucial step for visualization and further analysis. The process involves transforming the point cloud into a more structured representation.

- Surface Reconstruction Algorithms: Algorithms like Poisson surface reconstruction and marching cubes convert the point cloud into a triangulated mesh or surface representation. These algorithms effectively capture the shape and form of the object or scene, allowing for realistic visualizations.

- Mesh Simplification: Simplification algorithms reduce the complexity of the mesh while retaining the essential features, making it more efficient for use in applications like autonomous navigation. This is particularly helpful for large datasets, where memory and computational resources are a concern.

Lidar Data Processing Flowchart for Autonomous Driving

The flowchart below Artikels the sequential steps involved in processing lidar data for autonomous driving.

| Step | Description |

|---|---|

| Data Acquisition | Collecting raw lidar point clouds from various sensors. |

| Noise Removal & Outlier Detection | Cleaning the data by removing spurious points and outliers. |

| Point Cloud Registration | Registering multiple point clouds to a common coordinate system. |

| Feature Extraction | Extracting meaningful features from the registered point cloud. |

| Object Recognition | Identifying and classifying objects within the scene. |

| 3D Model Creation | Creating a 3D model or mesh of the environment. |

| Data Visualization & Analysis | Visualizing and analyzing the processed data for further use in autonomous driving applications. |

Ultimate Conclusion

The interview with David Hall provided a fascinating perspective on the future of lidar-based self-driving cars. Key takeaways included the evolving nature of lidar technology, the critical role of accurate mapping in autonomous navigation, and the ongoing challenges and opportunities in the field. The discussion highlighted the importance of lidar’s accuracy and reliability in various driving environments, from urban settings to highways.

The future of self-driving technology hinges on the continued development and refinement of lidar mapping techniques. This discussion underscores the crucial role of Velodyne’s lidar in shaping the future of autonomous driving.