Twitter shadow ban moderation is a complex issue affecting countless users. This exploration delves into the intricacies of how Twitter’s shadow bans work, from the mechanics behind them to the impact on user experiences. We’ll analyze the signs of a shadow ban, the role of moderation, and the potential impact on user behavior and content creation.

Understanding the different types of moderation actions on Twitter, including the subtle nuances of a shadow ban versus more direct methods, is crucial. This overview also considers alternative moderation approaches and real-world examples of shadow bans, aiming to provide a comprehensive picture of this often-misunderstood aspect of the platform.

Understanding the Concept of Twitter Shadow Bans

Twitter shadow bans are a form of algorithmic suppression that impacts a user’s visibility and engagement on the platform without explicitly notifying them of the action. These subtle yet powerful mechanisms can significantly affect a user’s reach and overall experience on Twitter, making it crucial to understand how they operate.Twitter employs various methods to implement shadow bans, often using a combination of factors.

These factors are not always readily apparent to the user, and the exact algorithms are proprietary. However, common indicators include a decline in tweet visibility, fewer likes and retweets, decreased engagement with comments, and a noticeable reduction in the number of impressions.

Mechanisms of Twitter Shadow Bans

Twitter’s algorithms employ complex systems for content moderation and user engagement. These systems can subtly penalize accounts or content deemed problematic, resulting in a shadow ban. This can manifest in a number of ways, such as decreased visibility of tweets in search results, reduced organic reach to followers, or a significant drop in impressions. The exact mechanisms remain somewhat opaque, as Twitter doesn’t publicly disclose the criteria used for shadow banning.

Impact on User Experience

Shadow bans severely impact user experience on Twitter. Users may experience a significant drop in visibility, affecting the ability to reach their target audience and potentially leading to disengagement. Reduced engagement manifests as fewer likes, retweets, and comments on their posts. This can have a detrimental effect on user morale and engagement with the platform, as the impact on reach is often felt acutely.

Differences Between Shadow Bans and Other Moderation Actions

Understanding the distinctions between shadow bans and other Twitter moderation actions is critical. Shadow bans operate in the background, reducing visibility and engagement without explicit communication, unlike other forms of moderation that result in account suspension or content removal.

| Feature | Shadow Ban | Other Moderation Actions |

|---|---|---|

| Visibility | Reduced or hidden content from algorithms; content may still appear to the user, but is not shown to others | Direct removal/suspension of content or account |

| Engagement | Reduced interactions (likes, retweets, comments) | Suspended or blocked interactions |

| Reach | Limited reach, affecting visibility and audience | Complete removal of reach; content is not visible to any user |

Examples of Shadow Ban Indicators

A notable decline in impressions, likes, and retweets, compared to past performance, is a clear indicator. Also, a lack of engagement from followers, despite consistent posting, can be another symptom. Additionally, noticing that tweets posted seem to have a drastically reduced visibility in search results, or followers’ feeds, are strong signs of a shadow ban. Finally, a feeling of decreased presence on the platform, even if no explicit notification is given, is another key indicator.

Twitter’s shadow ban moderation practices are a real head-scratcher, aren’t they? It’s frustrating to see your posts disappear without explanation, especially when you’re trying to engage with important discussions. This mysterious disappearing act often mirrors the complexities of a fast-paced, high-tech project like the Virgin Hyperloop One certification center in West Virginia. The project, detailed in this excellent resource on Virgin Hyperloop One certification center West Virginia , faces similar challenges in navigating public perception and maintaining a smooth operation.

Ultimately, both Twitter and hyperloop-related projects seem to grapple with the need for transparency in how they moderate content.

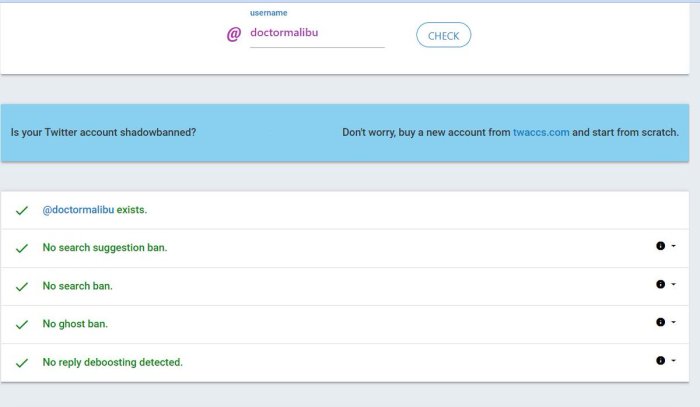

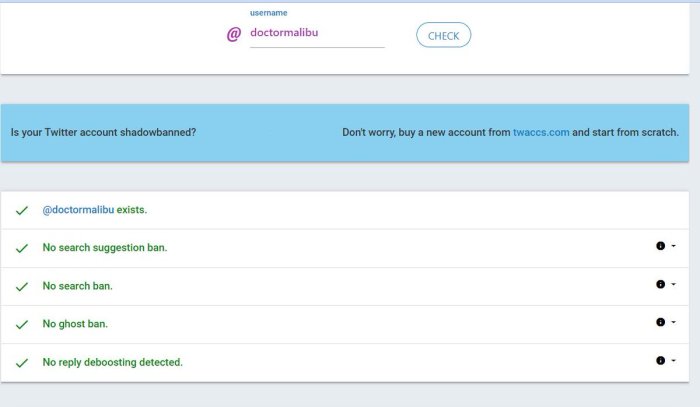

Identifying the Signs of a Shadow Ban

Unveiling the subtle signals of a Twitter shadow ban can be a tricky endeavor. While Twitter doesn’t explicitly announce shadow bans, certain indicators can hint at a potential problem. Understanding these signs is crucial for maintaining an active and engaging presence on the platform. This section dives deep into the common symptoms and helps differentiate them from normal algorithm adjustments.Identifying a shadow ban isn’t about finding a single definitive answer.

It’s about recognizing a constellation of factors that, taken together, suggest a potential problem. A single reduced tweet reach might be coincidental, but a collection of these signs points towards a shadow ban. The key is to be attentive to changes in your account performance, comparing it to previous patterns.

Common Indicators of a Shadow Ban

A significant drop in reach, coupled with a decline in engagement metrics, is a prime indicator of a shadow ban. This includes lower impressions, retweets, likes, and replies on your tweets. Pay close attention to these metrics, tracking them over time to spot patterns. Unusual changes in the algorithm, such as tweets vanishing from users’ feeds without explanation, are another possible sign.

Subtle Signs of a Shadow Ban

Beyond the obvious, subtle indicators can also signal a shadow ban. Notice if your tweets seem to be appearing less frequently in your followers’ timelines. If you’re consistently noticing that your tweets are not reaching the same audience as before, this could be a clue. A significant decrease in the visibility of your replies to others’ tweets, or a reduced rate of new followers joining your account, also warrant scrutiny.

A marked shift in the type of audience interacting with your tweets, potentially with a drop in engagement from your core followers, could be a further sign.

Comparing User Complaints to Algorithm Changes

Numerous users complain about shadow bans, citing decreased visibility as the primary concern. However, it’s essential to distinguish genuine algorithm changes from user perceptions. Twitter’s algorithm is constantly evolving, influencing the visibility of tweets. A decrease in reach could be due to the algorithm prioritizing different types of content or adjusting its ranking criteria. Sometimes, this is a result of changes in user behavior on the platform, such as an increase in spam or irrelevant content.

Common Misconceptions about Shadow Bans

There are several misconceptions surrounding shadow bans that are often misleading. One common myth is that a shadow ban is always immediately noticeable. The impact can be gradual and subtle, making it difficult to detect. Another misconception is that shadow bans are a form of censorship. In reality, Twitter’s algorithm prioritizes content that aligns with user interests, potentially impacting visibility, but not necessarily with malicious intent.

Twitter’s shadow ban moderation is a tricky thing. It’s frustrating when your posts aren’t reaching your audience, especially when you’re trying to promote something. This is a common issue, especially with restaurants trying to boost their visibility, and I’ve noticed this is often amplified by issues like fake restaurant listings on platforms like Seamless, where, for example, seamless fake restaurant listings nyc have been a problem.

Figuring out the right strategies to bypass these types of issues is a constant battle for any business owner on social media.

Similarly, a lack of engagement with specific users or accounts does not automatically indicate a shadow ban. Twitter’s algorithm uses various factors to determine visibility.

The Role of Moderation in Twitter Shadow Bans

Twitter’s shadow banning, a practice where accounts are subtly penalized without explicit notification, raises significant questions about the role of moderation. This opaque process often leaves users feeling unfairly targeted, and understanding the moderation mechanisms behind these actions is crucial for addressing the issue. The lack of transparency surrounding these decisions contributes to a climate of suspicion and distrust.The moderation process plays a pivotal role in determining whether or not a shadow ban is implemented.

Moderators, or automated systems, assess user content and activity based on Twitter’s terms of service and community guidelines. These criteria, often broad and open to interpretation, can lead to inconsistencies in enforcement.

Moderation Policies and Procedures

Twitter’s policies and procedures regarding content moderation are complex and not publicly detailed. They are frequently updated, making it difficult for users to fully comprehend the rules. This lack of transparency often leaves users uncertain about the specific actions that trigger a shadow ban. The use of algorithms to filter content plays a significant role, sometimes leading to misinterpretations or errors in judgment.

Possible Motivations Behind Shadow Bans

Several motivations could drive Twitter’s shadow banning practices. One potential motive is the desire to maintain a specific online environment. This might involve suppressing certain viewpoints or types of content deemed undesirable by Twitter’s leadership. Another possibility is the need to combat spam and harmful activity, aiming to protect users from unwanted content. However, the subjective nature of these decisions and the lack of transparency raises concerns about potential abuse of power.

There are concerns about potential bias and manipulation in moderation decisions, as well as the possibility of influencing the public conversation by controlling visibility.

Twitter’s shadow ban moderation is a tricky thing, isn’t it? It’s like trying to understand the algorithm, and sometimes it feels like your posts are just… disappearing. It’s fascinating how some creators find ways to get around these limitations, like how Hannah Berner, from the popular Summer House reality show, transitioned to stand-up comedy in a way that’s described in this compelling piece smooth like butter how summer houses hannah berner became a stand up.

Maybe we can all learn something about overcoming obstacles from her journey, and then maybe we can figure out how to outsmart Twitter’s shadow banning.

Perspectives on Fairness and Effectiveness

Various perspectives exist regarding the fairness and effectiveness of Twitter’s moderation policies. Critics argue that the lack of transparency and clear guidelines allows for arbitrary and discriminatory enforcement, potentially impacting the freedom of expression. Conversely, proponents argue that shadow banning is a necessary tool for maintaining a safe and healthy platform, particularly in the context of combating harassment, abuse, and misinformation.

The debate over the balance between free speech and platform responsibility remains a crucial aspect of online discourse. The effectiveness of these policies in achieving these goals is often questioned, especially given the lack of concrete data and user feedback on the impact of shadow bans.

Impact on User Behavior and Content Creation: Twitter Shadow Ban Moderation

Twitter shadow bans, while often subtle, can significantly impact user behavior and the overall ecosystem of content creation on the platform. Users experiencing these invisible restrictions often experience a disconnect between their efforts and the platform’s response, leading to feelings of frustration and discouragement. This can manifest in a variety of ways, from reduced engagement to a complete shift in how users interact with Twitter.

Frustration and Reduced Engagement

Shadow bans frequently result in a noticeable decrease in engagement. Users may see their tweets receive fewer likes, retweets, or replies than before, even when posting high-quality content. This lack of interaction can lead to feelings of isolation and frustration, prompting users to question their presence on the platform. The perceived lack of recognition for their efforts can deter users from participating actively in discussions and creating new content.

Furthermore, the uncertainty surrounding the cause of the reduced engagement can exacerbate these feelings of frustration.

Effect on Content Creation and Sharing

Shadow bans directly impact the creation and sharing of content. Users may become hesitant to post, fearing further repercussions. This can lead to a reduction in the overall volume of content shared on the platform, particularly if the user feels their efforts are being stifled. Moreover, the perceived unfairness of shadow bans can lead to a decline in the quality of content shared.

Users might become discouraged and focus less on the substance and originality of their posts, opting instead for less substantial contributions.

Disproportionate Impact on Demographics and Content Types

Shadow bans can disproportionately impact certain demographics and types of content. Certain communities or user groups may experience higher rates of shadow bans due to the nature of their conversations or the platform’s perception of their content. Similarly, specific types of content, such as political commentary or content related to controversial topics, may be more susceptible to shadow bans.

This uneven application can lead to a skewed representation of voices and perspectives on the platform.

User Responses to Shadow Bans

| User Response | Description | Potential Outcome |

|---|---|---|

| Appeal | Attempting to rectify the issue with Twitter through direct communication or reporting | Positive (issue resolved) or negative (issue remains unresolved) |

| Content Adaptation | Modifying content to align with Twitter’s perceived expectations, possibly sacrificing originality or message integrity. | Potential success (content is accepted) or failure (content is still rejected). |

| Migration | Moving to alternative social media platforms to find a more receptive environment. | Positive (engagement and community found) or negative (loss of existing network and engagement). |

Alternative Moderation Approaches

Twitter’s current shadow ban system, while aiming to curb harmful content, often falls short in its transparency and effectiveness. This raises the need for alternative approaches that prioritize user rights and platform accountability. A more nuanced moderation system, one that balances the need for content regulation with user freedom, is crucial for a healthy online environment.Alternative moderation methods, focusing on transparency and user input, could lead to a more equitable and efficient system.

These methods may include community-based reporting mechanisms with clear guidelines and appeals processes, allowing users to challenge decisions and understand the rationale behind them. Such a system would foster a sense of ownership and accountability among users.

Alternative Moderation Models

Different social media platforms employ various moderation strategies. Facebook, for instance, uses a combination of automated tools and human review, often involving a tiered system. This allows for a more granular approach to content moderation, enabling platforms to respond to specific issues with targeted actions. YouTube’s approach, focusing on community guidelines and user reports, encourages a collaborative approach.

Flaws in Twitter’s Current System

Twitter’s current shadow ban system lacks transparency, making it difficult for users to understand why their content is being suppressed. This ambiguity can lead to frustration and a feeling of injustice. Furthermore, the system’s reliance on automated processes can result in misclassifications of content, leading to the silencing of legitimate viewpoints. The lack of a clear appeals process exacerbates these issues, as users have limited recourse when they believe their content has been unfairly suppressed.

The opaque nature of the system also creates an environment ripe for abuse and manipulation.

Benefits and Drawbacks of Transparent Moderation

Transparent moderation, where the rules and rationale behind content decisions are openly shared, could enhance user trust and accountability. Users would understand the platform’s criteria for content removal and could appeal decisions based on a clear framework. This could also deter malicious actors who try to manipulate or exploit the system.However, transparent moderation presents potential drawbacks. The publication of moderation policies might inadvertently reveal vulnerabilities in the system, which could be exploited by those seeking to circumvent the rules.

Publicly debating the nuances of each decision could also lead to prolonged conflicts and hinder the swift removal of harmful content. The transparency of the process must be carefully balanced with the need for efficiency and safety.

Community-Based Reporting Systems

Community-based reporting systems could provide a more democratic and efficient method of content moderation. Instead of relying solely on algorithms or a small team of moderators, a system where users flag inappropriate content could ensure that the platform is responsive to community concerns. This approach could also help identify emerging trends and issues more quickly.For example, a system where users can report content that violates community guidelines and provide reasoning would give platforms valuable insight into community sentiment and allow for faster response to potentially harmful trends.

This could also help to build a sense of community ownership in the moderation process. By incorporating a robust appeals process, such systems can mitigate the potential for error or bias. This would ensure that the system is responsive to community concerns and opinions.

Comparison with Other Platforms

Platforms like Reddit utilize a combination of automated systems and user-driven reporting. This approach provides a balance between efficiency and user input. However, each platform’s approach is tailored to its specific community and content.

Illustrative Cases of Shadow Bans

Shadow bans, a largely hidden form of content moderation, can significantly impact a user’s online experience on platforms like Twitter. While the precise mechanisms and criteria are often opaque, understanding real-world examples can shed light on the potential effects and the ways in which Twitter’s policies might be applied. The lack of transparency makes it challenging for users to identify and combat these restrictions.This section delves into documented instances of shadow bans, highlighting how user engagement, content types, and even broader societal trends can interact with Twitter’s moderation policies.

We’ll examine how certain actions or patterns might trigger a shadow ban, as well as showcasing successful appeals or workarounds.

Examples of User Impact

Users often report a decline in visibility for their tweets, retweets, and replies, even though the posts are not explicitly flagged or removed. This can manifest as a decrease in engagement from other users, lower impressions, and fewer retweets. Some users report noticing a decrease in mentions or direct messages, which can suggest a shadow ban. The impact is often subtle, making it hard to pinpoint the cause of decreased engagement.

Documented Cases of Successful Appeals

Unfortunately, there are limited publicly documented accounts of successful appeals against shadow bans. While Twitter’s support system can be contacted, concrete examples of successful appeals are scarce. Users attempting to appeal often cite a lack of transparency as a significant obstacle. There are anecdotal accounts of users successfully regaining visibility after making changes to their content, indicating that adherence to Twitter’s guidelines might be a key factor in overcoming the shadow ban.

However, there’s no guarantee of success, and the specific actions that led to a successful appeal are rarely disclosed.

Impact of Events and Trends

Specific events or trends can significantly affect the application of shadow bans. For instance, during periods of heightened political discourse or social unrest, Twitter’s moderation policies might be applied more rigorously. This can lead to a surge in shadow bans, as the platform tries to manage the increased volume of potentially controversial content. Similarly, if a specific hashtag or topic gains prominence, the moderation team might focus more closely on content associated with that topic.

Illustrative Case Studies, Twitter shadow ban moderation

While detailed case studies are difficult to obtain, reports suggest that certain types of content, like those related to sensitive political topics, religious discussions, or those that strongly contradict popular opinions, are more susceptible to shadow bans. The application of shadow bans can be a complex and unpredictable process, with specific users or accounts being disproportionately affected, based on a variety of factors, potentially including the platform’s algorithmic interpretation of user behavior and content.

End of Discussion

In conclusion, Twitter shadow ban moderation presents a significant challenge for users. The potential for reduced visibility, engagement, and reach can severely impact user experience. Understanding the mechanisms behind shadow bans, recognizing the signs, and exploring alternative moderation approaches are key steps in navigating this complex aspect of the platform. This discussion has highlighted the importance of transparency and fairness in online moderation practices.