The c suite guide to genai risk management – The C-Suite Guide to GenAI Risk Management provides a comprehensive overview of the risks associated with generative AI. This guide explores the crucial considerations for executives in navigating the complexities of this transformative technology, highlighting the potential impact on various business functions. It delves into risk identification, assessment, and mitigation strategies, offering practical frameworks and case studies to empower the C-suite to effectively manage these emerging risks.

The guide meticulously examines the potential impact of generative AI on different sectors, identifying and classifying various risks, such as data privacy, security breaches, bias, and misuse. It emphasizes the importance of a robust risk management program, outlining key roles, responsibilities, and monitoring procedures to ensure the long-term viability and ethical use of generative AI.

Introduction to Generative AI Risk Management for the C-Suite

Generative AI, with its ability to create new content like text, images, and code, is rapidly transforming industries. This transformative potential, however, comes with significant risks that require proactive management. Understanding these risks and implementing effective mitigation strategies is no longer a nice-to-have, but a critical responsibility for every C-suite executive. Failure to address these risks can lead to reputational damage, financial losses, and even legal repercussions.The C-suite must take a proactive and strategic approach to generative AI risk management.

This involves understanding the unique challenges posed by this technology, developing comprehensive risk assessment frameworks, and establishing clear policies and procedures to mitigate identified threats. This approach is essential to harness the power of generative AI while minimizing the potential for harm.

Generative AI and its Potential Risks

Generative AI models, trained on massive datasets, can produce highly realistic outputs. This realism, while powerful, can also be exploited for malicious purposes. For example, deepfakes, synthetically generated content designed to deceive, can damage reputations and manipulate public opinion. Similarly, AI-generated misinformation can spread rapidly, impacting public trust and potentially influencing crucial decisions. The potential for biased outputs from these models, reflecting biases present in the training data, also poses a significant risk.

These biases can perpetuate harmful stereotypes and discrimination, potentially leading to legal challenges and reputational harm.

Navigating the complexities of GenAI risk management is crucial for the C-suite. Companies need to proactively address potential issues, and this is especially pertinent in light of recent global events, such as Sony suspending PlayStation sales in Russia. Understanding the geopolitical implications of AI development, as well as the ethical considerations, is vital for crafting effective strategies. This requires a thorough understanding of the technology’s potential impact on various business sectors and an ability to adapt to a constantly evolving landscape.

Thankfully, a comprehensive C-suite guide to GenAI risk management can provide the necessary insights for companies to develop robust strategies and mitigate potential dangers. Refer to this comprehensive resource for detailed information: sony suspends playstation sales russia.

Importance of Risk Management for Executives

Executives bear the ultimate responsibility for the ethical and responsible use of generative AI within their organizations. The financial and reputational impact of a security breach or a misuse of generative AI can be catastrophic. Effective risk management helps to identify and mitigate these risks, ensuring the long-term sustainability and success of the business. Proactive measures, such as establishing clear guidelines for data usage and output validation, help prevent the negative consequences that can stem from uncontrolled deployment.

Key Considerations for the C-Suite

The C-suite must consider several key aspects when addressing generative AI risks. These include:

- Data Privacy and Security: Ensuring the secure handling of data used to train and operate generative AI models is paramount. Robust data governance policies and stringent security protocols are critical. This involves understanding the data sources, their sensitivity, and the potential for data breaches.

- Bias and Fairness: Generative AI models inherit biases from the data they are trained on. The C-suite needs to implement strategies for identifying and mitigating these biases. Regular audits and diverse teams can help in this process. This includes understanding the potential impact of biases on different customer segments and developing strategies to address them. Careful selection of training data and the use of bias detection tools are essential.

- Misuse and Malicious Applications: Generative AI can be used to create fraudulent content, including phishing emails, counterfeit products, and deepfakes. The C-suite must establish mechanisms to detect and prevent such misuse. This includes the implementation of robust security measures and collaborations with industry experts to stay abreast of evolving threats.

- Intellectual Property Rights: Ensuring the use of generative AI does not infringe on existing intellectual property rights is a critical concern. Clear policies for usage and licensing are essential to avoid legal complications. This includes a thorough understanding of copyright and patent laws and developing protocols for using AI-generated content ethically and legally.

Potential Impact on Business Functions

Generative AI has the potential to impact virtually every business function. From marketing and customer service to product development and operations, the applications are broad and far-reaching.

- Marketing and Sales: AI can generate compelling marketing materials, personalize customer experiences, and automate sales processes.

- Product Development: AI can accelerate product design and testing, allowing for quicker iteration and innovation.

- Customer Service: AI can provide 24/7 customer support, handle routine inquiries, and resolve issues quickly and efficiently.

- Operations and Logistics: AI can optimize supply chains, automate tasks, and improve overall operational efficiency.

Generative AI Risk Categories

| Risk Category | Description |

|---|---|

| Data Privacy | Unauthorized access, use, or disclosure of sensitive data used to train and operate generative AI models. |

| Security | Vulnerability of generative AI systems to attacks, including malicious code injection, data breaches, and system compromise. |

| Bias | Unintentional perpetuation of societal biases present in the training data, leading to unfair or discriminatory outcomes. |

| Misuse | Use of generative AI for malicious purposes, such as creating deepfakes, generating fraudulent content, or spreading misinformation. |

| Intellectual Property | Potential infringement of existing intellectual property rights through the use or output of generative AI. |

Identifying and Assessing Generative AI Risks: The C Suite Guide To Genai Risk Management

Navigating the burgeoning world of generative AI necessitates a proactive approach to risk management. Simply implementing these powerful technologies isn’t enough; understanding and mitigating potential pitfalls is crucial for responsible deployment. This section delves into the critical process of identifying, assessing, and prioritizing risks associated with generative AI.This exploration will cover a spectrum of potential issues, from data-related vulnerabilities to broader societal implications, equipping C-suite executives with the knowledge needed to make informed decisions about generative AI integration.

Navigating the complexities of generative AI risk management can be daunting for the C-suite. But sometimes the best lessons come from unexpected places. Just like how my robot lawn mower, detailed in two years zero regrets my robot lawn mower is still worth every penny , has proven its value, thoughtful risk assessment can also lead to significant gains in the long run.

Ultimately, a strategic approach to generative AI risk management, like a well-maintained robot lawn mower, ensures a smoother operation for the company’s future.

Potential Risks Associated with Data Usage in Generative AI Models

Data quality and bias are foundational concerns. Generative AI models are trained on massive datasets, and if these datasets contain inaccuracies, biases, or harmful content, the resulting AI models will perpetuate and amplify these issues. This can manifest in various ways, including the generation of harmful or biased content, perpetuation of stereotypes, or the creation of misinformation. Robust data validation and cleansing processes are essential to mitigating these risks.

Methods for Assessing the Likelihood and Impact of Identified Risks

Several methods exist for assessing the likelihood and impact of identified risks. Quantitative risk assessment methodologies, employing statistical models and historical data, provide a structured approach. Qualitative assessments, relying on expert judgment and scenario planning, offer valuable insights into potential future outcomes. A combination of both approaches often provides the most comprehensive understanding of risk profiles.

Examples of Potential Generative AI Risks Specific to Different Industries

The risks associated with generative AI vary significantly across industries. In the financial sector, the risk of generating fraudulent documents or manipulating financial data is substantial. The healthcare industry faces challenges in ensuring the accuracy and ethical use of generated medical information. In the legal field, the risk of creating misleading legal documents is a significant concern.

Understanding these industry-specific vulnerabilities is crucial for tailoring risk mitigation strategies.

Comparison and Contrast of Various Generative AI Risk Assessment Methodologies

Different methodologies for assessing generative AI risks offer varying levels of precision and complexity. Quantitative approaches, while offering numerical scores, may struggle to capture nuanced qualitative factors. Qualitative methods, on the other hand, provide a deeper understanding of contextual issues but may lack the precision of quantitative models. The most effective approach often involves a hybrid methodology, combining quantitative data with expert opinions.

Prioritizing Risks Based on Potential Impact and Likelihood

A risk matrix, plotting the likelihood and impact of each risk, is a powerful tool for prioritization. Risks with high likelihood and high impact should receive the highest priority in mitigation efforts. Risks with low likelihood and low impact may require less immediate attention, while those with a high likelihood and low impact should be monitored closely for potential escalation.

Framework for Identifying and Classifying Risks Associated with Generative AI

A comprehensive framework for identifying and classifying risks associated with generative AI should consider several key categories. These include data quality and bias, model vulnerabilities, security breaches, legal and regulatory compliance, and ethical considerations. Each category should be further subdivided into specific risk factors, enabling a granular understanding of the potential threats.

Comparison of Generative AI Risk Assessment Tools

| Tool Name | Strengths | Weaknesses ||—|—|—|| Risk Register | Simple, easy to use, allows for documentation | Limited analytical capabilities, less suited for complex scenarios || Monte Carlo Simulation | Sophisticated modeling, captures uncertainty | Computationally intensive, requires substantial data input || Decision Tree Analysis | Visual representation of decision paths | Can become complex for intricate problems || Expert Judgment | Deep understanding of context | Subjectivity, potentially biased |

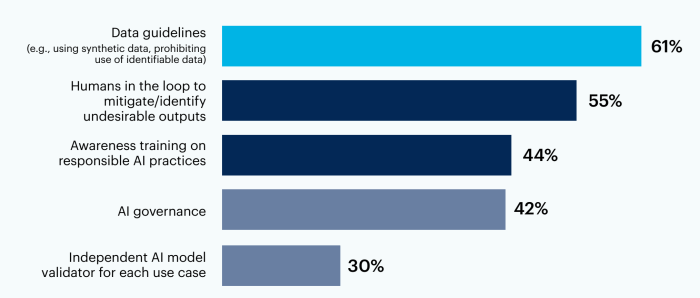

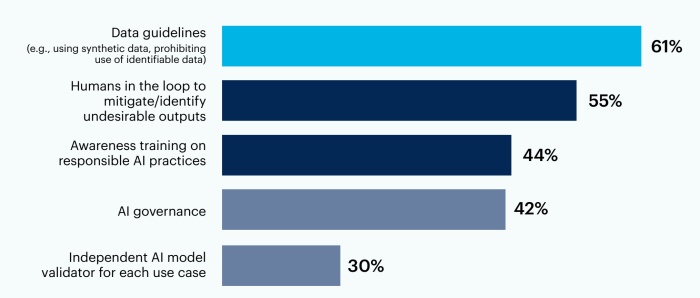

Mitigation Strategies for Generative AI Risks

Navigating the exciting yet complex landscape of Generative AI requires proactive risk management. This involves understanding not just the potential pitfalls but also developing robust strategies to mitigate them. A comprehensive approach encompassing governance, data security, employee training, and best practices is crucial for responsible and effective deployment.Effective mitigation strategies are paramount to harnessing the transformative potential of Generative AI while minimizing the associated risks.

A well-defined framework for risk assessment and response is essential for organizations to confidently explore the opportunities presented by this technology.

Governance and Policies for Generative AI

Establishing clear governance frameworks and policies is critical to managing Generative AI risks. These frameworks should encompass data usage, model development, output validation, and accountability. Policies must define acceptable use, access restrictions, and ethical considerations related to bias and fairness. A comprehensive policy framework acts as a crucial guide for both developers and users, ensuring responsible AI development and deployment.

Data Security and Privacy Measures

Data security and privacy are paramount when dealing with Generative AI. Protecting sensitive data used to train and operate these models is essential. This includes implementing robust encryption, access controls, and data anonymization techniques. Regular security audits and incident response plans should be in place to address potential data breaches. Adherence to relevant data privacy regulations, like GDPR, is critical to avoid legal repercussions.

Employee Training and Awareness Programs

Effective Generative AI risk management relies heavily on employee understanding and awareness. Training programs should equip employees with the knowledge to identify potential risks, use the technology responsibly, and report suspicious activities. These programs should cover topics such as bias detection, misinformation generation, and responsible data usage. Empowering employees with this knowledge is a critical component of a robust risk mitigation strategy.

Best Practices for Implementing Generative AI Risk Management Strategies

Implementing Generative AI risk management strategies requires a phased approach. Start with a thorough risk assessment to identify potential vulnerabilities. Develop comprehensive policies and procedures to address the identified risks. Implement robust access controls to limit unauthorized access to sensitive data and models. Establish a dedicated team to monitor and respond to emerging risks.

My recent exploration into the C-suite guide to GenAI risk management got me thinking about the practical implications of rapidly evolving technology. Considering the Biden administration’s proposed EV charging standards, like this one , it’s clear that these advancements demand a proactive approach to risk assessment. Ultimately, the C-suite guide to GenAI risk management needs to be a living document, adapting to the ever-changing landscape of emerging technologies.

Continuously review and update your risk management strategies as Generative AI technology evolves.

Preventative Measures Against Data Breaches in Generative AI Systems

A proactive approach to data breaches in Generative AI systems is crucial. Preventing these incidents requires a multifaceted strategy.

| Risk Category | Preventative Measures |

|---|---|

| Unauthorized Access | Strong passwords, multi-factor authentication, regular security audits, role-based access controls |

| Malicious Code Injection | Secure coding practices, vulnerability assessments, regular software updates, intrusion detection systems |

| Data Exfiltration | Data encryption, secure data storage, access logs, data loss prevention tools |

| Insider Threats | Employee training on data security, background checks, monitoring of user activity, whistleblower protection policies |

| Physical Security | Secure data centers, access control measures, environmental controls, physical security systems |

Robust Access Control Mechanisms for Generative AI Tools

Implementing robust access control mechanisms is vital for managing access to Generative AI tools. This includes defining clear roles and responsibilities, implementing multi-factor authentication, and regularly reviewing access permissions. Using granular access control will limit the potential damage from unauthorized access and misuse. Regular auditing of access logs is also a crucial component of a strong access control strategy.

Building a Generative AI Risk Management Program

Establishing a robust generative AI risk management program is crucial for organizations navigating the rapidly evolving landscape of this technology. A well-defined program not only mitigates potential harms but also fosters trust, compliance, and innovation. This program must be adaptable to changing circumstances and evolving risks.A comprehensive generative AI risk management program should go beyond simply identifying risks.

It requires a proactive approach that encompasses risk assessment, mitigation strategies, and ongoing monitoring. This involves establishing clear roles and responsibilities, integrating technology for efficient tracking, and fostering a culture of continuous improvement. This proactive stance ensures organizations are prepared for potential challenges and leverage the benefits of generative AI responsibly.

Developing a Comprehensive Risk Management Program

A comprehensive risk management program for generative AI requires a structured approach. Begin by defining the scope of the program, outlining the specific AI models and applications it covers. This step ensures the program’s relevance and effectiveness. Next, conduct a thorough risk assessment, identifying potential risks across various categories like data privacy, bias, security, and intellectual property infringement.

Prioritize identified risks based on their likelihood and potential impact. Develop mitigation strategies tailored to each risk, incorporating technical solutions, policies, and training programs.

Establishing Clear Roles and Responsibilities

Defining clear roles and responsibilities is paramount for effective risk management. A dedicated risk management team, potentially with cross-functional representation, should be established. This team should be empowered to identify, assess, and mitigate generative AI risks. Roles within this team should be clearly defined, including individuals responsible for data governance, security, compliance, and ethical considerations.

Regular Monitoring and Review

Regular monitoring and review of the risk management program are essential for maintaining its effectiveness. Establish a schedule for periodic reviews, assessing the program’s performance against established metrics. This ensures the program remains aligned with evolving risks and emerging best practices. Regular audits and assessments should be conducted to evaluate the effectiveness of mitigation strategies and identify any gaps or weaknesses in the program.

Key Roles and Responsibilities

| Role | Responsibilities |

|---|---|

| Generative AI Risk Officer | Oversees the entire risk management program, coordinating activities and ensuring compliance. |

| Data Governance Officer | Manages data used by generative AI models, ensuring data quality, security, and compliance with regulations. |

| Security Analyst | Identifies and mitigates security risks associated with generative AI models and systems. |

| Compliance Officer | Ensures compliance with relevant regulations and industry standards. |

| Ethical AI Specialist | Evaluates and addresses potential ethical implications of generative AI applications. |

| Training and Awareness Lead | Develops and implements training programs for employees on generative AI risks and responsible use. |

Ongoing Adaptation and Improvement

Generative AI is constantly evolving, requiring the risk management program to adapt and improve continuously. Establish mechanisms for feedback and suggestions from stakeholders, incorporating new information into the risk assessment and mitigation strategies. The program should be regularly reviewed and updated to reflect changes in technology, regulations, and best practices. This proactive approach ensures the program’s long-term effectiveness.

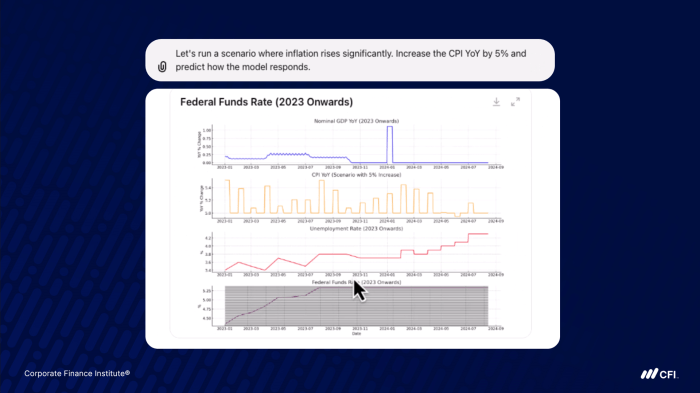

Technology Support for Risk Management

Leveraging technology can significantly enhance a generative AI risk management program. Employ tools for automated risk assessment, monitoring, and reporting. Utilize data analytics to identify patterns and anomalies that might indicate emerging risks. Develop and utilize AI-powered tools to detect biases in training data and outputs. This integration of technology ensures the program is efficient and scalable.

Reporting and Escalation Process

A clear reporting and escalation process is essential for timely responses to generative AI risks. Establish clear thresholds for reporting various risk levels, defining procedures for escalating issues to senior management or relevant regulatory bodies. Implement a system for tracking and managing reported risks, ensuring timely responses and appropriate follow-up. This system should facilitate effective communication and collaboration.

Case Studies and Real-World Examples

Navigating the complexities of generative AI requires learning from both successes and failures. Examining real-world implementations provides invaluable insights into effective risk management strategies, highlighting the crucial role of adaptation and continuous learning in this rapidly evolving field. This section explores key case studies and examples to equip C-suite executives with practical knowledge for building robust generative AI risk management programs.Understanding the diverse landscape of generative AI applications is essential.

From large language models powering customer service chatbots to sophisticated image generation tools used in design, the applications are as varied as the organizations employing them. This necessitates a tailored approach to risk management, recognizing that a “one-size-fits-all” strategy is unlikely to be effective.

Successful Generative AI Risk Management Strategies

A variety of organizations have successfully implemented generative AI risk management strategies. These strategies often incorporate a combination of technical safeguards, ethical guidelines, and robust monitoring processes. A key factor in success is proactive risk identification and assessment, followed by well-defined mitigation strategies.

- Financial Institution Case Study: A major financial institution implemented a rigorous process for identifying and mitigating bias in its generative AI-powered loan application system. This involved meticulous data analysis, auditing of model outputs, and the establishment of human oversight procedures. The outcome was a more equitable lending process while safeguarding against potential discrimination.

- Retailer Case Study: A leading retailer utilized generative AI to personalize customer experiences. They proactively addressed potential privacy risks by implementing strong data anonymization protocols and user consent mechanisms. This ensured customer data protection while enhancing the customer journey.

Unsuccessful Implementations and Key Lessons Learned

Unfortunately, not all implementations have been successful. Lessons learned from failures offer valuable insights into pitfalls to avoid.

- Misaligned Expectations: A company that introduced generative AI to streamline their marketing efforts experienced setbacks due to unrealistic expectations. They underestimated the need for significant retraining and support for their marketing teams, leading to confusion and reduced efficiency. This highlights the importance of clear communication and comprehensive training programs when integrating new technologies.

- Insufficient Data Security: An organization relying on generative AI for sensitive data processing failed to implement adequate data security measures. This resulted in a data breach, exposing confidential information and causing reputational damage. The case emphasizes the critical role of data encryption, access controls, and regular security audits.

Impact of Regulatory Changes

Regulatory changes significantly impact generative AI risk management strategies. Organizations must remain informed and adaptable to new regulations and compliance standards.

- Data Privacy Regulations: The increasing emphasis on data privacy, such as GDPR and CCPA, necessitates the implementation of robust data protection measures. Organizations must adhere to strict guidelines regarding data collection, storage, and usage.

- AI Bias Regulations: Emerging regulations aimed at mitigating bias in AI systems require organizations to develop mechanisms for identifying and addressing potential biases in their generative AI models. This includes the development of diverse datasets, continuous monitoring of model outputs, and ongoing evaluation for fairness and equity.

Table of Successful Risk Management Strategies Across Industries

The following table showcases successful risk management strategies employed across different industries.

| Industry | Risk | Mitigation Strategy |

|---|---|---|

| Financial Services | Bias in loan applications | Diverse training data, model auditing, human oversight |

| Retail | Customer data privacy | Data anonymization, user consent mechanisms, enhanced security protocols |

| Healthcare | Medical misinformation | Fact-checking tools, human verification of generated content, clear disclaimers |

Educating Stakeholders on Real-World Examples

Effective communication is crucial for ensuring buy-in from stakeholders. Real-world examples can serve as powerful tools for educating and engaging stakeholders.

- Case Study Presentations: Sharing successful and unsuccessful case studies through presentations, workshops, and reports can effectively educate stakeholders on the importance of risk management.

- Interactive Demonstrations: Demonstrations of both successful and unsuccessful implementations can help stakeholders visualize the potential risks and benefits of generative AI.

Future Trends and Considerations

Generative AI is rapidly evolving, presenting both exciting opportunities and significant risks. As the technology matures, the need for robust risk management strategies becomes increasingly critical. This section delves into emerging trends, potential future threats, and proactive measures to navigate the evolving landscape of generative AI.Understanding the complexities of generative AI is essential for anticipating potential issues and developing effective mitigation strategies.

Proactive risk management, coupled with a forward-thinking approach, is crucial for organizations to harness the benefits of generative AI while minimizing potential harm.

Emerging Trends in Generative AI Risk Management

The landscape of generative AI is dynamic, requiring continuous adaptation in risk management strategies. New applications and capabilities emerge frequently, necessitating ongoing assessment and adjustments. Trends include the increasing sophistication of deepfakes, the potential for generative AI to automate malicious activities, and the growing concern around the ethical implications of AI-generated content.

Potential Future Risks and Threats

Generative AI’s ability to create realistic content presents several potential risks. The technology can be exploited for malicious purposes, such as creating fraudulent documents, generating convincing fake news articles, and producing deepfakes for identity theft or social manipulation. Furthermore, biases embedded in training data can be amplified, leading to discriminatory or harmful outputs.

Proactive Risk Management Strategies

Proactive risk management is crucial in the evolving AI landscape. This involves continuous monitoring of emerging trends, staying informed about new vulnerabilities, and adapting risk management frameworks accordingly. Organizations should implement robust security measures to protect against misuse and develop strategies to identify and mitigate bias in generative AI models.

Anticipating and Addressing Ethical Dilemmas

Generative AI raises numerous ethical dilemmas, demanding careful consideration. Questions surrounding ownership of AI-generated content, the potential for misinformation, and the responsible use of the technology need careful attention. Clear guidelines and ethical frameworks are needed to address these issues.

Examples of Generative AI for Malicious Purposes, The c suite guide to genai risk management

Generative AI tools have been used to create realistic deepfakes of public figures for malicious purposes. These can be used to spread misinformation, manipulate public opinion, or even impersonate individuals for fraudulent activities. The technology’s ability to generate convincing synthetic audio and video adds another layer of complexity to potential misuse.

Future Regulations Impacting Generative AI Risk Management

Governments worldwide are actively exploring regulations to address the risks posed by generative AI. These regulations may encompass content moderation, data privacy, and liability issues. Staying informed about evolving regulatory landscapes is crucial for organizations to comply with future legal requirements.

Key Factors for Long-Term Generative AI Risk Management

Effective long-term risk management requires a multi-faceted approach. Key factors include:

- Continuous Monitoring and Evaluation: Regularly assessing and updating risk profiles in response to technological advancements and emerging threats is essential.

- Collaboration and Knowledge Sharing: Industry collaboration and knowledge sharing are crucial for identifying emerging trends and best practices.

- Robust Security Measures: Implementing strong security protocols and measures to prevent misuse and unauthorized access are critical.

- Ethical Guidelines and Frameworks: Developing clear ethical guidelines and frameworks for responsible AI development and deployment is essential for mitigating risks.

- Investment in Research and Development: Continued research and development in AI detection and mitigation techniques is essential for staying ahead of potential threats.

Last Recap

In conclusion, The C-Suite Guide to GenAI Risk Management offers a structured approach to understanding and mitigating the risks inherent in generative AI. By addressing potential vulnerabilities, establishing proactive risk management strategies, and fostering a culture of continuous learning, organizations can effectively harness the transformative power of this technology while mitigating potential harm. The guide provides a practical roadmap for the C-suite to navigate the complexities of generative AI and position their organizations for success in the evolving technological landscape.