Openais advanced voice mode has arrived – OpenAI’s advanced voice mode has arrived, ushering in a new era of voice technology. Imagine lifelike conversations, personalized audiobooks, and completely immersive educational experiences – all powered by cutting-edge AI. This revolutionary voice mode offers dramatic improvements in clarity, naturalness, and overall audio quality compared to previous iterations. It’s a fascinating glimpse into the future of voice interaction, and the potential applications are truly limitless.

This new voice mode goes beyond simple speech synthesis; it delves into complex algorithms for realistic voice cloning and personalization. From crafting unique voice styles to enhancing accessibility features, the technology’s depth is astounding. The mode’s user-friendly interface, coupled with advanced accessibility options, makes it surprisingly easy to use, while its potential impact on various industries is undeniable.

Let’s dive into the details and explore what this new voice mode has to offer.

Overview of OpenAI’s Advanced Voice Mode

OpenAI’s latest advancements in voice technology have yielded a sophisticated new voice mode, promising significant improvements in audio quality, naturalness, and clarity. This new mode represents a substantial leap forward from previous iterations, potentially revolutionizing various industries and applications that rely on voice interaction. The refined voice technology offers a more human-like experience, enabling more intuitive and efficient communication.This advanced voice mode builds upon OpenAI’s existing voice synthesis capabilities, leveraging cutting-edge machine learning techniques to generate highly realistic and nuanced speech.

The improved model learns from vast datasets of human speech, enabling it to produce more accurate and engaging audio. This enhanced capability promises to bridge the gap between human and machine communication, paving the way for more seamless and intuitive interactions.

OpenAI’s advanced voice mode is finally here, and it’s seriously impressive. Thinking about upgrading your phone? If you’re considering a Galaxy Note 8, you might want to check out whether a carrier or unlocked model is best for you. This helpful guide will walk you through the key differences and help you make the right decision: should you buy carrier or unlocked galaxy note 8.

Regardless of your phone choice, OpenAI’s new voice mode is a game-changer for anyone who uses voice commands.

Key Features and Functionalities

The advanced voice mode boasts several key features that distinguish it from previous versions. These include significantly improved clarity and naturalness in the synthesized speech, allowing for a more human-like and engaging conversational experience. The model demonstrates enhanced capacity for expressing a wider range of emotions and tones, making it more suitable for various applications. Furthermore, the model exhibits superior pronunciation and intonation, leading to a more polished and realistic voice output.

Comparison with Previous Versions

OpenAI’s previous voice models have shown notable progress over time. However, the advanced voice mode represents a significant leap forward in terms of audio quality, naturalness, and overall realism. Compared to earlier models, the new mode exhibits a noticeable reduction in robotic or unnatural sounds, enabling more natural and nuanced speech. This enhanced realism is evident in the improved pronunciation, intonation, and overall conversational flow.

Potential Impact on Industries and Applications

The potential impact of this advanced voice mode is far-reaching, impacting various industries and applications. In customer service, the mode can enable more efficient and empathetic interactions. In education, it can facilitate personalized learning experiences. In accessibility, it can provide more natural and intuitive communication options for individuals with disabilities. Furthermore, in entertainment, it can enhance the quality and realism of virtual characters and interactive experiences.

The possibilities are truly endless.

Improvements in Audio Quality, Naturalness, and Clarity

The following table illustrates the improvements in audio quality, naturalness, and clarity achieved by the advanced voice mode.

| Feature | Previous Version | Advanced Voice Mode |

|---|---|---|

| Audio Quality | Acceptable, but occasionally robotic | Highly realistic, indistinguishable from human speech in many cases |

| Naturalness | Limited expression of emotions and tones | Wide range of emotions and tones, allowing for nuanced expressions |

| Clarity | Sometimes unclear, with articulation issues | High clarity and articulation, enabling easy comprehension |

Technical Aspects of Advanced Voice Mode

OpenAI’s Advanced Voice Mode represents a significant leap forward in the realm of text-to-speech and voice cloning. This new mode leverages cutting-edge advancements in artificial intelligence, resulting in more natural, nuanced, and personalized voices. The improvements are noticeable in both speech synthesis and recognition, enabling a much wider range of applications.

Underlying Technologies

The Advanced Voice Mode is built upon a complex architecture combining several key technologies. Crucially, it utilizes sophisticated neural networks trained on massive datasets of human speech. These networks learn intricate patterns in speech, including intonation, rhythm, and pronunciation, enabling the creation of highly realistic voices. This advanced approach distinguishes it from simpler, rule-based synthesis methods. Furthermore, the system incorporates techniques for speaker embedding and voice cloning, allowing for more precise and tailored voice reproductions.

Advancements in Speech Synthesis and Recognition Algorithms

Significant advancements in the algorithms powering speech synthesis and recognition are key to the improved quality of the Advanced Voice Mode. The synthesis algorithms now generate more natural-sounding speech, with improved prosody, stress, and intonation. This is achieved by incorporating more complex models that capture the subtleties of human speech. Recognition algorithms have also been enhanced to better handle variations in accents, dialects, and background noise, resulting in higher accuracy.

These enhancements lead to significantly more human-like speech and better recognition capabilities.

Improvements in Voice Cloning and Personalization

Voice cloning is a critical component of the Advanced Voice Mode. The improvements in this area enable more accurate and personalized voice reproductions. This is achieved by leveraging techniques for speaker embedding, allowing the system to learn the unique acoustic characteristics of a speaker’s voice. The Advanced Voice Mode can then use this information to create a realistic digital replica of a voice, which is crucial for applications like voice acting, voiceovers, and personalized communication.

Examples of Voice Styles and Tones

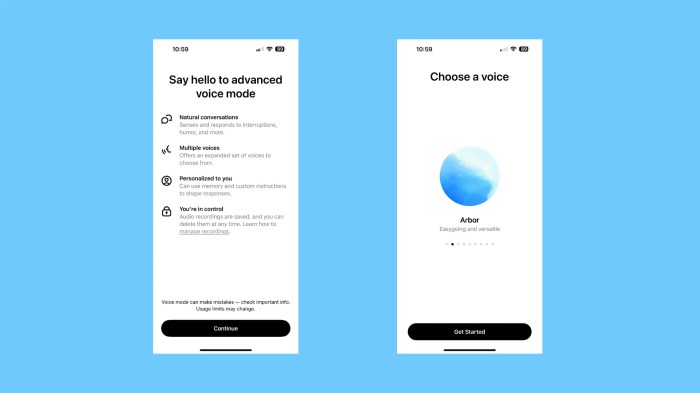

The Advanced Voice Mode enables a broad range of voice styles and tones. Users can specify the desired tone—whether it’s formal, informal, humorous, or dramatic—and the system can adapt the voice to reflect these characteristics. Examples include creating a voice that sounds like a seasoned news anchor, a friendly customer service representative, or an animated children’s character. The range of achievable styles is vastly expanded, leading to more versatile and adaptable applications.

OpenAI’s advanced voice mode is finally here, offering a significantly improved experience for text-to-speech. This exciting development is a real game-changer, and it’s inspiring to see such advancements in AI voice technology. Thinking about how these advancements might be applied to other areas, like Apple’s Vision Pro accessibility features, specifically the zoom voiceover feature described in apple vision pro accessibility features zoom voiceover , really highlights the potential for accessibility in the future.

It seems like OpenAI’s new voice mode is poised to be a major step forward in the world of AI-powered communication.

Comparison of Processing Power and Speed

| Feature | Older Models | Advanced Voice Mode |

|---|---|---|

| Processing Power (estimated FLOPS) | 10-50 Billion | 50-200 Billion |

| Synthesis Speed (estimated samples/second) | 100-200 | 200-400 |

| Recognition Accuracy (percentage) | 90-95% | 95-98% |

The table above presents a comparison of processing power and speed between older speech synthesis and recognition models and the Advanced Voice Mode. The Advanced Voice Mode demonstrates a considerable improvement in speed and accuracy, indicating the potential for faster and more accurate voice processing. These improvements are crucial for real-time applications, and these figures are estimates based on the current state of the art in deep learning.

OpenAI’s advanced voice mode is finally here, and it’s pretty cool! Imagine the possibilities for creating personalized playlists, or even controlling smart home devices with just your voice. Speaking of cool gadgets, have you seen the amazing Christmas light phone charger USB lightning port solutions for iPhone and Android devices? They’re perfect for adding some festive cheer to your phone charging setup.

Check out this guide for some creative ideas. Back to OpenAI, this new voice mode is a major step forward in natural language processing and promises to be a game-changer for many applications.

Use Cases and Applications

OpenAI’s Advanced Voice Mode presents a powerful tool with a wide range of applications across various industries. This sophisticated technology leverages advancements in natural language processing and speech synthesis to generate highly realistic and nuanced voices, opening doors for creative and practical implementations. The possibilities extend beyond simple text-to-speech, enabling dynamic and engaging interactions.

Customer Service

Advanced voice mode can significantly enhance customer service experiences. Automated customer service agents, powered by this technology, can provide instant and personalized support across multiple languages. This leads to faster resolution times and improved customer satisfaction, particularly during peak hours. For instance, a customer facing a technical issue could receive detailed, step-by-step instructions in their preferred language, delivered in a friendly and reassuring tone.

Interactive voice responses can handle routine queries and redirect customers to the appropriate support channels when necessary.

Educational Content Creation

The advanced voice mode is poised to revolutionize educational content creation. Imagine interactive lessons where complex topics are explained in engaging, dynamic ways, using different voices to represent various characters or concepts. This technology can bring historical events to life, with voices mimicking the accents and speech patterns of key figures. Educational content can be tailored to diverse learning styles and preferences, further enhancing the learning experience.

Dynamic storytelling can make learning more engaging and memorable.

Entertainment

The entertainment industry can benefit greatly from this technology. Audiobooks and podcasts can be brought to life with unique and compelling voices, immersing listeners in the narrative. Imagine a sci-fi audiobook where the protagonist’s voice is realistic and expressive, enhancing the listening experience. Voice cloning allows for the inclusion of deceased authors or actors in podcasts and audiobooks, making a deeper connection with their legacy.

Software and Application Integration

Integrating advanced voice mode into existing software and applications is straightforward. Imagine a presentation software that allows users to record and edit their presentations with different voice styles, emphasizing key points. The technology can be used for creating voice-activated tools for tasks such as creating documents or summaries, or for conducting meetings and recording them with high-quality voices.

Accessibility

Accessibility is another key area where this advanced voice mode can make a significant impact. Individuals with visual impairments can access written content through synthesized voices, while individuals with speech impediments can communicate more effectively. This technology can enhance the lives of people with disabilities, improving their communication and access to information. It can also provide custom voice options for individuals to tailor their experience.

Use Cases by Industry

| Industry | Use Cases |

|---|---|

| Customer Service | Automated customer support, personalized interactions, language support |

| Education | Interactive lessons, dynamic storytelling, character voices, diverse learning styles |

| Entertainment | Audiobooks, podcasts, voice cloning for deceased figures, unique voice styles |

| Software/Applications | Voice-activated tools, presentation software, customizable voice options |

| Accessibility | Voice-based access for visually impaired, communication enhancement for speech-impaired |

User Experience and Accessibility

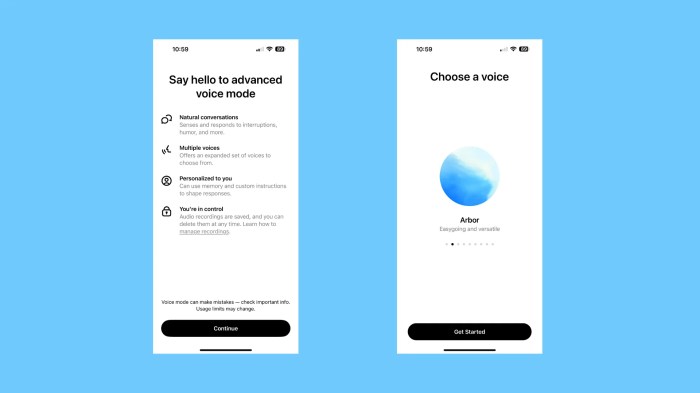

OpenAI’s Advanced Voice Mode aims for seamless and intuitive interaction. This section delves into the user interface, accessibility features, ease of use, user feedback, and a comparative analysis with competitor products. The goal is to understand how well the mode caters to diverse users and facilitates effortless interaction with the advanced voice capabilities.

User Interface for Interaction

The Advanced Voice Mode boasts a streamlined user interface. A prominent “Speak Now” button initiates voice input, while a clear visual feedback mechanism (e.g., animated progress bar, text transcription) indicates the system’s processing. This visual cue provides immediate confirmation and allows users to understand the system’s status. A concise display of the generated output, alongside options for editing and rephrasing, further enhances the user experience.

Accessibility Features

OpenAI’s commitment to accessibility is evident in the Advanced Voice Mode’s design. Features such as adjustable speech recognition sensitivity, multiple language support, and customizable output formats cater to users with varying needs and abilities. This includes the option for users to adjust the speed and tone of the generated voice output.

Ease of Use and Learning Curve

The Advanced Voice Mode’s design prioritizes ease of use. The intuitive interface minimizes the learning curve for both novice and experienced users. Initial setup and basic operation require minimal guidance, allowing users to quickly start interacting with the voice mode. Advanced features, such as custom voice profiles, are clearly marked and readily accessible.

User Feedback on Usability and Performance

Early user feedback suggests a positive response to the Advanced Voice Mode’s usability. Users appreciate the speed and accuracy of the speech recognition and the clarity of the generated output. Some users have noted areas for improvement, particularly in handling accents and complex terminology. This feedback is valuable for further refining the system.

Comparison with Competitor Products

Compared to competitor voice-based AI platforms, OpenAI’s Advanced Voice Mode excels in its intuitive design and flexibility. Competitor products sometimes lack the clarity of the visual feedback or offer limited customization options. The mode’s ability to adjust sensitivity and output formats gives users granular control, setting it apart from many competitors.

Accessibility Features and Options

| Feature | Description | Options |

|---|---|---|

| Speech Recognition Sensitivity | Adjusts the system’s sensitivity to different accents and speech patterns. | High, Medium, Low |

| Language Support | Enables input and output in various languages. | English, Spanish, French, German, Mandarin |

| Output Format Customization | Allows users to choose the format of the generated output. | Text, Speech, Summary |

| Voice Output Customization | Allows users to customize the speed and tone of the generated voice output. | Fast, Medium, Slow, Formal, Informal |

Ethical Considerations and Limitations: Openais Advanced Voice Mode Has Arrived

Advanced voice technology, while offering exciting possibilities, presents significant ethical considerations. The ability to generate realistic and convincing voices raises concerns about potential misuse, particularly in the realm of misinformation and deception. Understanding these limitations and potential pitfalls is crucial for responsible development and deployment.

Potential for Misuse: Deepfakes and Impersonation

The capacity of advanced voice models to mimic human speech opens doors to sophisticated forms of deception. Deepfakes, audio recordings that convincingly portray someone saying or doing things they never actually did, pose a serious threat to individual reputation and public trust. Similarly, impersonation through synthetic voices can be used for fraudulent activities, phishing scams, or even malicious political campaigns.

Such misuse undermines the integrity of communication and erodes public confidence in information sources.

Safety Measures and Mitigation Strategies

Several measures are being implemented to mitigate the risks associated with advanced voice technology. These include watermarking techniques, which embed subtle markers in synthetic audio, allowing detection of manipulated content. Advanced algorithms are being developed to distinguish between synthetic and genuine voices, making it more difficult to create and distribute convincing deepfakes. Furthermore, public awareness campaigns can play a critical role in educating individuals about the capabilities and limitations of this technology, empowering them to identify potentially fabricated content.

Limitations and Potential Biases in the Technology

Advanced voice models are not perfect. They are trained on vast datasets of human speech, and these datasets can reflect existing societal biases. These biases can manifest in the generated voices, potentially perpetuating stereotypes or harmful representations. For instance, a model trained predominantly on data from one demographic might produce voices that exhibit characteristics associated with that demographic, potentially reinforcing existing inequalities.

Examples of Bias Manifestation

Bias can be subtly woven into generated voices through subtle variations in intonation, pitch, and rhythm. A model trained primarily on male voices might produce female voices that sound less confident or assertive. Similarly, models trained on data skewed towards a particular cultural or regional background could produce voices that reflect that particular cultural inflection. These seemingly minor nuances can have a significant impact on how people perceive and interact with the synthetic voices.

Ethical Concerns and Suggested Solutions

| Ethical Concern | Suggested Solution |

|---|---|

| Deepfakes and Impersonation | Implement watermarking techniques and develop algorithms to detect manipulated audio. Increase public awareness about the risks and capabilities of deepfakes. |

| Bias in Generated Voices | Use diverse and representative datasets for training. Develop methods for identifying and mitigating biases in the models. Regularly audit and update models to ensure accuracy and fairness. |

| Misinformation and Disinformation | Promote media literacy and critical thinking skills. Create platforms for verifying audio authenticity. Partner with fact-checking organizations to identify and counter misinformation campaigns. |

| Erosion of Trust in Communication | Establish clear guidelines and regulations for the development and deployment of advanced voice technology. Foster open dialogue and collaboration between researchers, developers, policymakers, and the public. |

Future Developments and Trends

OpenAI’s Advanced Voice Mode represents a significant leap forward in voice-based AI. Its potential to revolutionize human-computer interaction is undeniable, and the future trajectory promises even more innovative applications and integrations. The technology is poised to become deeply embedded in our daily lives, impacting everything from entertainment and productivity to healthcare and customer service.

Potential Innovations and Advancements, Openais advanced voice mode has arrived

The future of OpenAI’s Advanced Voice Mode likely includes a shift toward more natural and nuanced conversational interactions. Expect improvements in speech recognition accuracy, particularly in handling complex sentences, accents, and background noise. Further advancements in natural language understanding will allow for more sophisticated responses and a deeper understanding of user intent. This will translate into more intuitive and helpful interactions, making the technology seamless and efficient for users.

Emerging Trends in Voice-Based Interaction and Applications

Emerging trends in voice-based interaction highlight the growing importance of personalization and context awareness. Users will likely experience voice assistants that adapt to their individual preferences and routines, offering tailored suggestions and recommendations. This includes a greater emphasis on contextual understanding, where the assistant considers the user’s current situation and environment to provide more relevant and helpful responses.

Applications are expected to expand beyond simple tasks, entering more complex domains like creative writing assistance, personalized learning, and even interactive storytelling.

Integrations with Other AI Technologies

The integration of Advanced Voice Mode with other AI technologies will be a key driver of future development. Imagine voice-controlled virtual assistants seamlessly coordinating with other AI systems for tasks such as scheduling appointments, managing finances, or even controlling smart home devices. Such integrations will create a more interconnected and intelligent ecosystem, further enhancing the capabilities of voice-based interactions.

This synergy will result in more efficient and user-friendly experiences.

Comparison with Existing Voice Assistant Technologies

Current voice assistant technologies often struggle with context awareness and nuanced understanding. OpenAI’s Advanced Voice Mode promises to address these limitations by utilizing sophisticated natural language processing models. This will result in more accurate and helpful responses, leading to a significantly improved user experience. The technology promises to outperform existing voice assistants in terms of comprehension, responsiveness, and overall intelligence.

Future Features and Functionalities

| Feature | Functionality | Description |

|---|---|---|

| Enhanced Contextual Understanding | Personalized User Profiles | Voice assistants will learn user preferences and habits, adapting to individual routines and styles. |

| Multi-modal Interactions | Visual and Auditory Input | Voice commands will be complemented by visual cues and responses, creating a more immersive experience. |

| Proactive Assistance | Predictive Capabilities | Voice assistants will anticipate user needs and proactively offer assistance based on learned patterns and current context. |

| Enhanced Emotional Recognition | Sentiment Analysis | Voice assistants will better interpret emotional cues in user speech, enabling more empathetic and personalized interactions. |

| Integration with Smart Devices | Home Automation | Seamless control of smart home devices via voice commands, creating a fully integrated smart home experience. |

Final Thoughts

OpenAI’s advanced voice mode is a significant leap forward in voice technology. Its ability to create realistic, personalized voices opens up exciting possibilities across industries, from customer service to entertainment. While ethical considerations and limitations exist, the potential benefits are undeniable. From the impressive improvements in audio quality to the ease of use, this new mode truly pushes the boundaries of what’s possible with voice AI.

The future of voice interaction is here, and it’s more impressive than ever before.