Meta MTIA AI chips algorithm training is revolutionizing how we approach artificial intelligence. These cutting-edge chips are designed for intense algorithm training, pushing the boundaries of what’s possible. From understanding their architecture to examining the training processes, this exploration delves into the intricacies of Meta’s MTIA AI chips and the algorithms they power. We’ll look at the training data, performance metrics, and future innovations shaping the field.

This detailed overview examines the specifics of algorithm training on Meta’s MTIA AI chips, comparing them to other platforms and highlighting the unique challenges and opportunities. We’ll cover everything from the chip’s architecture to the training datasets, and the performance metrics used to assess the chips’ effectiveness. This comprehensive look aims to provide a clear understanding of the technology.

Introduction to Meta’s MTIA AI Chips

Meta’s relentless pursuit of advancements in artificial intelligence has led to significant investments in custom hardware. A key component of this strategy is the development of specialized AI chips designed to accelerate the performance of their AI models. The MTIA (Meta’s Training Inference Accelerator) AI chips represent a crucial step in this journey, offering a powerful solution for tackling the computational demands of their vast datasets and complex AI models.The significance of MTIA lies in its ability to enhance Meta’s AI model training and inference capabilities.

This allows for faster development cycles, more efficient resource utilization, and ultimately, the creation of more sophisticated and powerful AI systems. These chips are a testament to Meta’s commitment to pushing the boundaries of AI technology and their understanding of the unique needs of large-scale AI projects.

Architectural Characteristics of MTIA Chips

The MTIA chips are designed with a specific architecture optimized for the demands of training and inference. This unique structure prioritizes performance and efficiency by focusing on specific computational tasks. The underlying architecture is geared towards parallel processing, enabling multiple tasks to be executed simultaneously. This is critical for handling the massive datasets and complex computations required in modern AI.

Key Technologies Employed in MTIA Chips

Meta’s MTIA chips leverage a range of cutting-edge technologies to achieve high performance and energy efficiency. These technologies are carefully chosen and integrated to address the specific computational requirements of their AI models.

- Custom-designed Tensor Processing Units (TPUs): MTIA chips utilize specialized TPUs tailored to the specific needs of Meta’s AI models. This customization allows for optimal performance and efficient use of resources, unlike general-purpose CPUs or GPUs. These custom TPUs are designed to handle the matrix multiplications and other computations prevalent in machine learning algorithms. This approach ensures optimal utilization of the hardware for Meta’s specific needs.

- High-bandwidth Memory Systems: Efficient data transfer between the processing units and memory is crucial for high performance. MTIA chips employ high-bandwidth memory systems to enable rapid access to large datasets, minimizing latency and maximizing throughput. This efficient data transfer system is vital for minimizing bottlenecks during training and inference.

- Specialized Neural Network Accelerators: The architecture of MTIA chips includes dedicated accelerators for specific neural network operations. This specialized hardware acceleration allows for efficient execution of complex neural network algorithms, leading to significant performance improvements over traditional methods. This optimization directly impacts the speed and efficiency of tasks like convolution, pooling, and activation functions. For example, these accelerators can perform matrix multiplications at speeds that are significantly faster than general-purpose processors.

- Advanced Low-Power Design: Energy efficiency is a critical consideration in large-scale AI deployments. MTIA chips are designed with advanced low-power techniques to minimize energy consumption while maintaining high performance. This reduces the cost and environmental impact associated with running these powerful systems. This feature is particularly important for maintaining efficiency when running AI models on a large scale.

Algorithm Training on Meta’s MTIA AI Chips: Meta Mtia Ai Chips Algorithm Training

Meta’s MTIA AI chips are designed for high-performance deep learning tasks, and algorithm training is a crucial aspect of their application. This process involves optimizing models for specific tasks, such as image recognition, natural language processing, and recommendation systems. The chips’ architecture and specialized hardware are tailored to accelerate this training, enabling faster model development and deployment.Deep learning models, often complex neural networks, require significant computational resources for training.

Meta’s MTIA chips, with their advanced architecture, aim to address these demands by offering a more efficient and faster way to train such models. The focus is on reducing training time, improving accuracy, and increasing overall throughput, thus enabling faster innovation in AI-powered applications.

Types of Algorithms Trained

Various deep learning algorithms are trained on Meta’s MTIA AI chips. These include Convolutional Neural Networks (CNNs) for image recognition tasks, Recurrent Neural Networks (RNNs) for natural language processing, and Transformer models for tasks like machine translation and text summarization. The specific algorithms depend on the application and the desired model performance.

Challenges and Opportunities

Training algorithms on MTIA chips presents both challenges and opportunities. A key challenge is ensuring the algorithms are compatible with the chip’s architecture and optimized for its specific capabilities. Opportunities include leveraging the chip’s high performance to train more complex models, leading to better performance and accuracy in various applications. Efficient training procedures and software frameworks are critical for realizing the full potential of these chips.

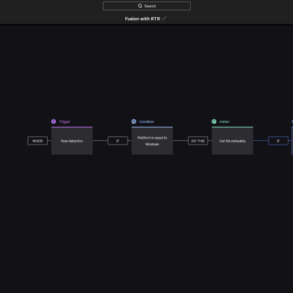

Training Procedures

The training procedures employed on MTIA chips often involve specialized libraries and frameworks, such as PyTorch and TensorFlow, tailored for the chip’s architecture. These frameworks are designed to leverage the chip’s unique hardware features, like tensor cores and specialized memory access mechanisms. Gradient descent optimization algorithms, a fundamental component of training, are often optimized for the chip’s parallelism and vectorization capabilities.

Performance Benchmarks

Performance benchmarks for training algorithms on MTIA chips show significant improvements compared to other platforms. For instance, Meta has reported substantial speedups in training large language models, leading to faster model development cycles. Specific benchmarks are usually proprietary and are often reported in internal Meta documents and presentations.

Meta’s MTI AI chip algorithm training is seriously impressive, pushing the boundaries of what’s possible. Finding efficient ways to power all that processing is key, and honestly, a great 20-watt USB-C charger like this 20 watt USB-C charger is just 8 shipped is a game-changer for keeping those algorithms running smoothly. Ultimately, advancements in charging technology will be essential as AI chip training continues to evolve.

Comparison with Other Platforms, Meta mtia ai chips algorithm training

The efficiency of training algorithms on MTIA chips often surpasses that of CPUs and GPUs, especially for large-scale deep learning models. This improvement is often attributed to the specialized hardware and optimized software that take advantage of the chip’s unique capabilities. However, direct comparisons depend heavily on the specific algorithms and the complexity of the models being trained.

Hardware Specifications Comparison

| Feature | Meta MTIA | Nvidia GPUs | AMD GPUs |

|---|---|---|---|

| Tensor Cores | Yes, specialized | Yes, specialized | Yes, specialized |

| Memory Bandwidth | High, optimized for AI | High, but varies by model | High, but varies by model |

| Instruction Set | Custom, optimized for deep learning | Custom, optimized for graphics and general-purpose computing | Custom, optimized for graphics and general-purpose computing |

| Power Consumption | Reported to be competitive | Can be high for high-end models | Can be high for high-end models |

Note: Exact specifications for Meta’s MTIA chips are not publicly available. The table provides a general comparison based on known information about the chips and their competitors.

AI Chip Architecture and Algorithm Training

Meta’s MTIA AI chips are designed with a specific architecture that significantly impacts the training of AI algorithms. Their unique hardware units and memory hierarchies are optimized for high-performance training, leading to faster and more efficient processes. Understanding this architecture is crucial for optimizing algorithms for these chips.The architecture of MTIA AI chips is tailored to handle the computational demands of deep learning models.

Specialized hardware units, specifically designed for tasks like matrix multiplication and tensor operations, accelerate the training process. This specialized hardware, combined with optimized memory access, results in substantial improvements in training speed and efficiency compared to general-purpose CPUs or GPUs.

Impact of Architecture on Training Speed

The specialized hardware units within MTIA AI chips are optimized for the mathematical operations inherent in deep learning algorithms. This dedicated hardware significantly accelerates the training process, allowing for faster convergence to optimal model parameters. For example, a convolutional neural network (CNN) training task can complete in a fraction of the time on MTIA compared to a CPU or even a conventional GPU.

Specialized Hardware Units

The unique hardware units in MTIA AI chips play a critical role in accelerating algorithm training. These units are tailored to specific deep learning operations. Matrix multiplication units are particularly important for handling the large matrices commonly used in neural networks. Tensor core units further enhance performance by enabling efficient handling of tensor operations.

- Matrix Multiplication Units: Designed for the intensive matrix multiplications needed in many neural network architectures. These units are optimized for speed and efficiency, significantly reducing the time needed to perform these calculations.

- Tensor Core Units: Optimized for tensor operations, crucial for complex deep learning models. These units can handle various tensor operations, enhancing the overall training speed.

- Specialized Logic Units: Focused on specialized logic operations that are essential for specific neural network layers. These units streamline the training process for these intricate operations.

Memory Hierarchies in Algorithm Training

Memory hierarchies, including caches and different levels of memory, are critical for efficient algorithm training. MTIA AI chips leverage a multi-level memory hierarchy to reduce latency during data access, enabling faster training. Data movement between different levels of memory is optimized to minimize bottlenecks.

- Cache Memory: Small, high-speed memory that stores frequently accessed data, reducing the need to access slower main memory. A well-designed cache hierarchy minimizes data access latency.

- Main Memory: The primary memory where data is stored. Efficient data transfer between the cache and main memory is critical for minimizing bottlenecks during training.

- Off-chip Memory: External memory that can store large datasets. Efficient management of data transfer between on-chip and off-chip memory is critical for training with extremely large datasets.

Optimizing Algorithms for MTIA Chip Architecture

Optimizing algorithms for MTIA AI chips requires understanding the architecture’s strengths and weaknesses. This includes restructuring the algorithms to leverage specialized hardware units and memory hierarchies. Efficient data movement and minimizing memory access latency are key factors in optimizing performance.

- Algorithm Restructuring: Algorithms can be restructured to match the architecture of the MTIA chip. This includes modifying the order of operations to optimize data flow and minimize memory access.

- Data Layout Optimization: The arrangement of data in memory significantly affects access times. Optimizing data layout to match the memory hierarchy improves efficiency.

- Quantization Techniques: Reducing the precision of numerical values can reduce memory usage and improve computational speed. Appropriate quantization techniques can be used to optimize performance without sacrificing accuracy.

Architectural Components of MTIA AI Chips

| Component | Description |

|---|---|

| Custom Matrix Multiplication Units | Optimized for high-throughput matrix multiplication, a core operation in deep learning. |

| Tensor Cores | Enable efficient tensor operations, essential for advanced neural network models. |

| High-Bandwidth Memory | Provides fast access to data, reducing latency in algorithm training. |

| Specialized Logic Units | Handle complex logic operations needed for specific neural network layers. |

| Multi-Level Cache Hierarchy | Reduces latency in data access by storing frequently used data in high-speed cache memory. |

Training Data and Datasets for Algorithms

Fueling the power of any AI algorithm, including those running on Meta’s MTIA AI chips, is the vast and varied training data. This data acts as the foundation, shaping the algorithm’s understanding and predictive capabilities. The quality, quantity, and meticulous preparation of this data directly impact the performance and reliability of the resulting models.The training data for algorithms on MTIA chips is often incredibly complex and large-scale, demanding specialized processing and storage solutions.

This data encompasses a wide range of formats and types, reflecting the diverse tasks the algorithms are designed to perform. Crucially, the preprocessing steps ensure that the data is consistent, accurate, and optimized for efficient processing by the MTIA chips.

Meta’s MTIA AI chip algorithm training is fascinating, but I’m also digging into the cool updates for Google Maps on their 15th anniversary, like new icon tabs, design changes, and improved transit information with live view. This Google Maps refresh is a great example of how user-friendly design can be improved with technology. Ultimately, advancements in AI chip algorithms like Meta’s are crucial for pushing the boundaries of applications like Google Maps, paving the way for even more intuitive and helpful experiences in the future.

Types of Training Data

The types of training data used for algorithms trained on MTIA chips are diverse, reflecting the multifaceted nature of the tasks these algorithms are designed to perform. These include structured data (e.g., tabular data), unstructured data (e.g., images, text, audio), and semi-structured data (e.g., data with a mix of structured and unstructured elements). The specific data type varies significantly based on the application, whether it’s image recognition, natural language processing, or other tasks.

Volume and Complexity of Datasets

The datasets used for training algorithms on MTIA chips often involve petabytes of data. The sheer volume of this data necessitates advanced storage and management techniques. Furthermore, the complexity of the data can range from simple labeled datasets to intricate, multi-dimensional datasets with intricate relationships between different data points. This complexity demands sophisticated data processing tools and methodologies.

For example, training a model to recognize different types of medical images might involve millions of images with varying resolutions and complexities.

Data Preprocessing and Preparation Techniques

Robust data preprocessing is crucial for the success of algorithm training on MTIA chips. Techniques such as data cleaning, normalization, feature scaling, and dimensionality reduction are commonly employed. Data cleaning involves identifying and handling missing values, outliers, and inconsistencies within the data. Normalization ensures that data points from different features are on a comparable scale, while feature scaling adjusts the range of feature values to improve the performance of certain algorithms.

Dimensionality reduction techniques, like Principal Component Analysis (PCA), reduce the number of variables while retaining important information, leading to more efficient training.

Role of Data Augmentation in Algorithm Training

Data augmentation plays a vital role in enhancing the robustness and generalization ability of algorithms trained on MTIA chips. It involves creating synthetic variations of the existing training data. This can involve techniques like rotating, cropping, or flipping images, or generating variations in text data, which allows the model to learn from a broader range of inputs and avoid overfitting to the specific training examples.

For example, in image recognition, augmenting images by adding noise or changing lighting conditions helps the model recognize objects under different conditions.

Dataset Formats for Training

| Dataset Format | Description |

|---|---|

| CSV (Comma Separated Values) | A simple text-based format for tabular data, often used for structured datasets. |

| JSON (JavaScript Object Notation) | A lightweight data-interchange format, commonly used for representing structured data with key-value pairs and arrays. |

| Parquet | A columnar storage format optimized for analytical queries and data warehousing, efficient for large-scale datasets. |

| TFRecord | A binary format designed for machine learning tasks, offering efficient storage and retrieval of data used for training models. |

These formats, among others, are used to organize and store the training data, facilitating the efficient transfer and processing of information on MTIA chips.

Meta’s MTIA AI chip algorithm training is fascinating, especially considering the recent teardown of the Galaxy Note 10, which revealed its innovative vapor chamber cooling system. This teardown highlights how crucial thermal management is for pushing the boundaries of AI processing power, and by extension, how the advancements in mobile chip design are impacting algorithm training for powerful AI like Meta’s.

The efficiency of the cooling system in the Note 10 directly translates to the potential for more complex and efficient AI algorithms, a key element of Meta’s MTIA chip development.

Metrics and Evaluation of Algorithm Training

Evaluating the performance of algorithms trained on Meta’s MTIA AI chips is crucial for understanding their effectiveness and efficiency. This involves a multifaceted approach encompassing various metrics that capture different aspects of the training process and the resulting algorithm’s capabilities. Precise measurement and analysis of these metrics are vital for optimizing chip design, algorithm development, and ultimately, the performance of AI applications.A robust evaluation framework for MTIA chip-trained algorithms considers not only accuracy but also training time, resource utilization, and energy consumption.

These metrics provide a comprehensive understanding of the trade-offs inherent in different training strategies and algorithm designs. A balanced approach to evaluation ensures that the best possible algorithms are selected for deployment.

Performance Metrics for Algorithm Training

Several key metrics are used to assess the effectiveness of algorithms trained on MTIA AI chips. These metrics are crucial for determining the optimal configuration and training strategy for specific AI tasks. The choice of metrics depends heavily on the intended use case.

- Accuracy: This fundamental metric quantifies the correctness of predictions made by the trained algorithm. For classification tasks, accuracy measures the proportion of correctly classified instances. In regression tasks, it might be measured by the Root Mean Squared Error (RMSE). High accuracy indicates a well-performing algorithm that consistently produces correct outputs.

- Training Time: This metric assesses the duration required to train the algorithm on a given dataset. Faster training times are highly desirable, as they directly impact the development cycle and the overall efficiency of the AI system. Training time is influenced by factors such as the size of the dataset, the complexity of the algorithm, and the hardware capabilities of the MTIA chip.

- Resource Utilization: This metric measures the amount of computational resources consumed during algorithm training. MTIA chips’ performance is evaluated in terms of memory bandwidth, processing units utilization, and other resources. Efficient utilization of resources leads to reduced energy consumption and faster training times.

- Energy Consumption: Energy efficiency is a crucial factor, especially in deployment scenarios where the AI model is running continuously. MTIA chip design often emphasizes low power consumption, which is measured in terms of joules per operation (J/op). Lower energy consumption directly translates to lower operational costs and improved sustainability.

- Inference Time: This metric evaluates the time taken for the trained algorithm to generate predictions on new, unseen data. Fast inference times are crucial for real-time applications, where rapid response is necessary. This metric reflects the latency introduced by the trained model.

Analysis of Evaluation Results

A structured approach to analyzing the results is essential. This involves comparing different algorithm configurations, datasets, and training parameters. Visualizations, such as graphs and charts, help to effectively communicate the trends and patterns observed.

- Comparison Across Models: Compare the performance metrics of different algorithms trained on the MTIA chip to identify the most effective model for a specific task.

- Correlation Analysis: Identify correlations between different metrics, such as training time and accuracy. This can reveal relationships between different parameters of the training process.

- Statistical Significance: Use statistical methods to determine whether observed differences in performance metrics are statistically significant or simply due to random variation.

Key Performance Indicators (KPIs) for Algorithm Training

This table summarizes the key performance indicators for algorithm training on MTIA chips. These KPIs are used to benchmark and track the progress of algorithm development and to ensure optimal performance.

| KPI | Description | Unit |

|---|---|---|

| Accuracy | Proportion of correct predictions | Percentage |

| Training Time | Duration to train the algorithm | Seconds/Epochs |

| Resource Utilization | Percentage of resources used during training | Percentage |

| Energy Consumption | Energy consumed during training | Joules |

| Inference Time | Time to generate predictions | Milliseconds |

Future Trends and Innovations

The landscape of AI chip design and algorithm training is constantly evolving, with new trends emerging rapidly. Meta’s MTIA AI chips, positioned at the forefront of this innovation, must adapt to these shifts to maintain their competitive edge and continue driving advancements in large language model (LLM) training. This section delves into emerging trends, their potential impact on MTIA chips, and future research directions.

Emerging Trends in AI Chip Design

The quest for greater efficiency and performance in AI chips is driving several key trends. These include the increasing adoption of specialized hardware accelerators tailored for specific AI tasks, such as tensor processing units (TPUs) and neural network processing units (NNPUs). Further, there’s a push towards heterogeneous architectures, combining different processing elements for optimal task distribution. This allows for greater flexibility and potential for more efficient resource allocation.

Moreover, advancements in memory technologies, like high-bandwidth memory (HBM), are crucial for supporting the massive datasets needed for training sophisticated models.

Impact on Meta’s MTIA Chips

The adoption of these trends directly impacts the design and functionality of Meta’s MTIA chips. For example, integrating specialized accelerators within the MTIA architecture could significantly improve performance in specific areas like transformer-based language modeling, a key aspect of large language model training. Furthermore, a heterogeneous approach to chip design could lead to better utilization of resources, potentially enabling MTIA to handle more complex and data-intensive tasks.

The integration of advanced memory technologies, like HBM, would be crucial for supporting the increasing data demands of LLM training.

Potential Research Directions in Algorithm Training

Research into algorithm training on specialized AI chips is focused on optimizing training processes for improved efficiency and accuracy. This includes developing algorithms that are specifically designed to leverage the unique capabilities of these specialized hardware components. Another significant area is the investigation into more efficient training methods, such as model quantization and pruning, that reduce the computational resources required without significantly compromising accuracy.

Finally, research into the development of more robust and generalizable algorithms will play a crucial role in handling diverse and complex data.

Future Directions and Challenges for MTIA AI Chips in Training Large Language Models

The development of MTIA AI chips for training large language models (LLMs) faces several challenges. One key challenge lies in scaling the training process to accommodate the ever-increasing size and complexity of LLM architectures. Another significant challenge involves optimizing training algorithms for maximum efficiency on specialized hardware. Efficient memory management is also crucial to handle the massive datasets required for LLM training.

Furthermore, the need to adapt to new, emerging LLM architectures and models poses a significant ongoing challenge. The continuous evolution of LLM design necessitates ongoing chip architecture refinements.

Summary of Potential Future Directions

| AI Chip Feature | Potential Future Direction |

|---|---|

| Architecture | Heterogeneous integration of specialized accelerators, optimized memory hierarchies |

| Algorithm Training | Development of algorithms leveraging specialized hardware, model quantization, efficient training methods |

| Data Management | Scalable memory management, advanced data compression techniques |

| Performance | Increased throughput, reduced latency |

| Power Efficiency | Optimized power management for high-performance tasks |

Real-World Applications of MTIA Chips

Meta’s MTIA AI chips, coupled with sophisticated training algorithms, are poised to revolutionize numerous sectors. These chips, designed for high-performance inference and training, are not just theoretical concepts; they are actively shaping the future of artificial intelligence. Their impact is already being felt in areas ranging from healthcare and finance to entertainment and beyond.

Examples of Real-World Applications

MTIA chips and their trained algorithms are finding applications across diverse sectors. One prominent example is in the field of image recognition. Sophisticated algorithms trained on MTIA chips can analyze vast quantities of medical images, potentially aiding in early disease detection and personalized treatment plans. Similarly, in the financial sector, these chips can process enormous datasets to identify fraudulent activities and assess investment risks with greater accuracy and speed.

Impact on Various Sectors

The impact of MTIA chips on various sectors is substantial. In healthcare, the ability to process medical images rapidly and accurately leads to faster diagnoses and potentially better patient outcomes. Financial institutions benefit from enhanced fraud detection capabilities, leading to reduced losses and improved security. Entertainment companies can utilize these chips for more realistic and immersive experiences, such as creating photorealistic virtual worlds or generating highly personalized content recommendations.

Societal Implications of Advancements

The societal implications of these advancements are significant. Improved healthcare diagnostics and treatments have the potential to save lives and improve overall well-being. Enhanced financial security systems can reduce fraud and protect consumers. However, it’s crucial to address potential biases in the algorithms and ensure equitable access to these technologies. Careful consideration must be given to the ethical implications and responsible implementation of these powerful tools.

Algorithm Training in Different Applications

The training algorithms on MTIA chips are tailored to the specific needs of each application. For example, algorithms designed for medical image analysis might be trained on large datasets of X-rays, CT scans, and MRIs, focusing on the identification of specific pathologies. In contrast, algorithms for fraud detection in finance might be trained on historical transaction data, focusing on patterns and anomalies indicative of fraudulent activity.

The algorithms’ design is critical to their effectiveness in each unique application.

Table Illustrating Impact on Sectors

| Sector | Specific Application | Impact |

|---|---|---|

| Healthcare | Early disease detection, personalized treatment plans, faster diagnostics | Improved patient outcomes, potentially saving lives, reduced healthcare costs |

| Finance | Fraud detection, risk assessment, algorithmic trading | Reduced financial losses, improved security, potentially increased investment returns |

| Entertainment | Realistic virtual worlds, personalized content recommendations, interactive experiences | Enhanced user engagement, creation of immersive experiences, new revenue streams |

| Manufacturing | Predictive maintenance, quality control, optimization of production processes | Reduced downtime, increased efficiency, improved product quality |

| Transportation | Autonomous vehicle navigation, traffic optimization, logistics management | Improved safety, reduced congestion, increased efficiency |

Final Conclusion

In conclusion, Meta’s MTIA AI chips are a significant advancement in algorithm training, offering exceptional performance and potential for groundbreaking applications. The combination of specialized hardware, optimized algorithms, and robust training procedures positions these chips as leaders in the field. As we explore future trends and innovations, we see enormous potential for Meta’s MTIA chips to continue driving progress in AI.