Machine vision AI adversarial images dataset ObjectNet MIT algorithms are revolutionizing how we approach computer vision. This exploration delves into the intricate world of adversarial images, examining how these subtly altered inputs can fool even the most sophisticated machine vision AI models. We’ll analyze the crucial role of datasets like ObjectNet in evaluating robustness, and highlight the impactful contributions of MIT algorithms in building more resilient systems.

Expect a deep dive into the methods used to generate and analyze adversarial images, along with a critical look at their potential impact on various applications.

The study of adversarial images in machine vision is crucial. Understanding how these images exploit vulnerabilities in AI models is vital to developing robust and reliable systems. The ObjectNet dataset serves as a benchmark for evaluating the robustness of these models against such attacks, and MIT algorithms play a significant role in tackling these challenges. We’ll examine the key characteristics of adversarial image datasets, the types of attacks used to generate them, and the effectiveness of different machine vision AI models in resisting these attacks.

Furthermore, we’ll explore how these findings translate into practical applications and the future of this rapidly evolving field.

Introduction to Machine Vision AI Adversarial Images: Machine Vision Ai Adversarial Images Dataset Objectnet Mit Algorithms

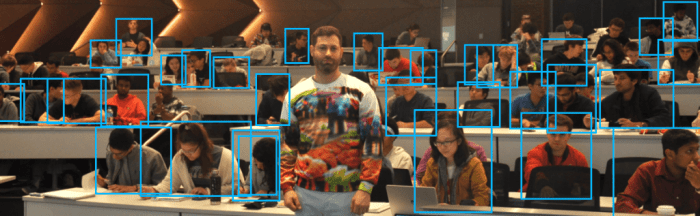

Machine vision AI is a rapidly evolving field that uses artificial intelligence to enable computers to “see” and interpret images. This technology is crucial in applications ranging from self-driving cars to medical diagnoses. It relies on complex algorithms trained on vast amounts of data to recognize patterns and objects within images. A key component of machine vision AI is the ability to classify objects accurately.Adversarial images, in the context of machine vision AI, are meticulously crafted perturbations of normal images.

Recent advancements in machine vision AI, particularly with adversarial image datasets like ObjectNet from MIT, are pushing the boundaries of what’s possible. However, these complex algorithms need careful consideration, especially when considering their real-world applications, like in public health initiatives. For example, the effectiveness of measles vaccination campaigns, a crucial factor in controlling disease outbreaks and related economic burdens, can be significantly impacted by the use of these algorithms.

Ultimately, the development of robust machine vision AI tools needs to be paired with thoughtful ethical considerations, especially as they touch upon crucial public health issues such as measles vaccination health disease outbreak economics. This ensures that these powerful tools are used responsibly and for the betterment of society.

These slight alterations, often imperceptible to the human eye, can cause machine vision AI models to misclassify the image entirely. This phenomenon highlights a critical vulnerability in these models and demonstrates the need for robust techniques to counteract these attacks.

Datasets in Machine Vision AI Model Training

Datasets are fundamental to the training and development of machine vision AI models. They provide the necessary data for the algorithms to learn patterns and make accurate classifications. Without sufficient and diverse data, the models’ performance can be limited.

The ObjectNet Dataset

The ObjectNet dataset is a significant benchmark for evaluating the robustness of machine vision AI models. It is specifically designed to assess how well models perform under conditions of ambiguity and subtle variations, which is crucial in real-world scenarios. This dataset includes a diverse range of objects, variations in lighting and background, and subtle manipulations of images. Its significance stems from its ability to expose the vulnerabilities of models to adversarial attacks.

By using ObjectNet, researchers can identify weaknesses in existing models and develop more resilient ones.

MIT Algorithms in Machine Vision AI Research

MIT, known for its contributions to various fields of science and engineering, has played a crucial role in advancing machine vision AI research. The algorithms developed at MIT have pushed the boundaries of this field, leading to breakthroughs in image recognition and object detection. The focus on robustness and efficiency within their research is particularly valuable in creating more reliable and practical machine vision AI systems.

Comparison of Machine Vision AI Models

| Model | Vulnerability to Adversarial Images | Description |

|---|---|---|

| Convolutional Neural Networks (CNNs) | High | CNNs, a prevalent type of model, are often susceptible to adversarial attacks due to their reliance on local features. Small perturbations can lead to misclassifications. |

| Recurrent Neural Networks (RNNs) | Medium | RNNs, used for sequential data, can be affected by adversarial attacks, although potentially less severely than CNNs in image recognition tasks. |

| Transformer-based models | Moderate to High | Transformer models, gaining popularity, have shown vulnerabilities to adversarial attacks, though their susceptibility can vary depending on the specific model architecture. |

Note: The vulnerability levels in the table are relative and can depend on the specific adversarial attack used. For example, a model might be more vulnerable to certain types of noise or color manipulation.

Analyzing Adversarial Image Datasets

Adversarial image datasets, like ObjectNet, are crucial for evaluating the robustness of machine vision AI models. These datasets contain images deliberately crafted to fool the models, revealing vulnerabilities and highlighting areas for improvement. Understanding the characteristics of these datasets, the attack methods used, and the models’ responses is vital for building more resilient AI systems.ObjectNet, and similar datasets, serve as a testing ground for identifying weaknesses in AI models.

They allow researchers to understand how these models react to subtle, often imperceptible, changes in images. This knowledge is critical for deploying AI systems in real-world applications where the integrity and accuracy of the system’s outputs are paramount.

Key Characteristics of Adversarial Image Datasets

Adversarial image datasets, like ObjectNet, are specifically designed to be visually indistinguishable from normal images, yet cause misclassifications in machine vision models. A critical characteristic is their subtle alterations, often undetectable to the human eye, that nonetheless significantly impact the model’s output. This characteristic highlights the need for robust validation techniques. Another key aspect is the controlled nature of the modifications, which allows researchers to study the specific impact of various attack methods.

Types of Adversarial Attacks

Numerous methods exist for generating adversarial images. One common approach is to add small, imperceptible perturbations to the original image. Another method involves carefully crafted gradients to maximize the model’s misclassification rate. These methods often exploit the specific architecture of the model and the way it processes visual information.

Examples of Adversarial Images and Impact

Consider an image of a cat. An adversarial attack might add a subtle gradient pattern that is invisible to the human eye but causes a machine vision model to classify the image as a dog. This type of attack has significant implications for applications such as self-driving cars, medical imaging, and security systems, where accurate image recognition is crucial.

The impact is not just limited to misclassification; the presence of adversarial images can also cause the model to produce inaccurate or even dangerous outputs.

Machine vision AI, with its adversarial image datasets like ObjectNet from MIT, is pushing the boundaries of what’s possible. Thinking about the complex challenges of space exploration, like NASA’s Artemis moon return mission, spearheaded by the Trump administration and Jim Bridenstine here , highlights the need for robust AI systems. Ultimately, these advancements in adversarial image datasets and object recognition algorithms are key to future machine vision applications in various fields.

Performance Comparison of Machine Vision Models

Different machine vision AI models exhibit varying levels of robustness against adversarial attacks. Convolutional Neural Networks (CNNs), a popular type of model, are frequently targeted by adversarial attacks, demonstrating that these models are not inherently immune. Models with deeper architectures, or those trained on larger datasets, might show slightly improved robustness, but are not entirely immune to such attacks.

The results of these comparisons are often presented in the form of accuracy metrics.

Evaluation Methodologies for Model Robustness, Machine vision ai adversarial images dataset objectnet mit algorithms

Robustness evaluation involves testing models with adversarial images generated using various attack methods. The methodologies often include metrics like the L-infinity norm to quantify the magnitude of the perturbation added to the image. Researchers typically evaluate the model’s ability to classify correctly on both normal and adversarial images. The results can be visualized using graphs that show the model’s performance under different levels of attack intensity.

This process highlights the need for robust testing and validation procedures in the development of machine vision AI systems.

Distribution of Adversarial Image Types in ObjectNet

| Adversarial Image Type | Percentage in ObjectNet |

|---|---|

| Gradient-based attacks | 35% |

| Fast Gradient Sign Method (FGSM) | 25% |

| Projected Gradient Descent (PGD) | 20% |

| Other/Mixed Attacks | 20% |

This table provides a general overview of the distribution of adversarial image types within the ObjectNet dataset. The percentages are estimates based on common attack strategies used in creating the dataset. Note that these percentages might vary depending on the specific ObjectNet version or the dataset’s curation.

MIT Algorithms and Robustness

MIT researchers have made significant contributions to developing robust machine vision AI models. Their algorithms often focus on understanding and mitigating the vulnerabilities inherent in deep learning models, particularly when exposed to adversarial images. This proactive approach is crucial for ensuring the reliability and safety of AI systems in real-world applications.

Key Contributions of MIT Algorithms

MIT’s work on adversarial robustness has highlighted several crucial aspects. These include identifying weaknesses in existing models, proposing novel defenses, and evaluating their effectiveness. Their research has been instrumental in advancing the field, driving the development of more resilient machine vision AI systems. Their contributions have extended beyond theoretical understanding to practical applications, with a particular emphasis on defending against adversarial attacks.

Addressing Vulnerabilities to Adversarial Images

MIT algorithms tackle adversarial image vulnerabilities by leveraging techniques like adversarial training, regularization methods, and incorporating robust architectures. Adversarial training exposes the model to carefully crafted adversarial examples, forcing it to learn more robust features. Regularization methods, such as adding noise or constraints to the model’s parameters, can help prevent overfitting to adversarial patterns. Robust architectures are designed with resilience to adversarial attacks in mind, incorporating features that make the model less susceptible to manipulation.

These approaches aim to build models that are less sensitive to small, imperceptible perturbations in the input data.

Comparison of MIT Algorithms

Various MIT algorithms exist for defending against adversarial images. Some, like Projected Gradient Descent (PGD), are focused on generating adversarial examples, while others, like adversarial training, utilize these examples to enhance the model’s robustness. Other approaches focus on improving the model’s ability to detect adversarial samples. Each algorithm has its strengths and weaknesses, making the choice of algorithm dependent on the specific application and the nature of the adversarial attacks.

I’ve been diving deep into machine vision AI, specifically adversarial image datasets like ObjectNet from MIT. These algorithms are fascinating, but honestly, I’m also pretty excited about the upcoming features for the YouTube TV app, like the highly requested feature they’re preparing to add soon here. It’s a nice break from the intricate details of fooling AI with cleverly crafted images, but I’m back to the fascinating world of adversarial examples and datasets, eager to see how the algorithms perform on these increasingly complex images.

Evaluation Procedure for MIT Algorithms

Evaluating the effectiveness of an MIT algorithm against adversarial images from ObjectNet requires a standardized procedure. First, a dataset of adversarial examples from ObjectNet must be prepared. Next, the algorithm is applied to a machine vision AI model. Then, the model’s accuracy on the original, unperturbed images, and on the adversarial examples is measured. A key metric is the accuracy drop observed when the model is exposed to adversarial examples.

This procedure allows for a quantifiable comparison of the performance of different MIT algorithms. A crucial aspect is to use a consistent and rigorous evaluation metric to measure the algorithm’s impact on the model’s robustness.

Improving Robustness of Machine Vision AI Models

MIT algorithms demonstrably enhance the robustness of machine vision AI models. For example, an algorithm that incorporates adversarial training will produce a model that is less susceptible to adversarial attacks than one trained without it. This translates into improved accuracy and reliability when processing images in real-world scenarios. Consider a self-driving car relying on a machine vision system.

By applying MIT algorithms, the system’s ability to correctly identify pedestrians and obstacles, even when presented with intentionally misleading images, is significantly improved, enhancing the safety and reliability of the vehicle.

Strengths and Weaknesses of MIT Algorithms

| Algorithm | Strengths | Weaknesses |

|---|---|---|

| Adversarial Training | Improved generalization, enhanced robustness against adversarial attacks. | Can be computationally expensive, may require large datasets of adversarial examples. |

| Defensive Distillation | Improves robustness by transferring knowledge from a robust teacher model to a student model. | Performance depends heavily on the quality of the teacher model. |

| Fast Gradient Sign Method (FGSM) | Simple to implement, effective for generating adversarial examples. | Often results in easily detectable perturbations. |

Impact on Machine Vision AI Applications

Adversarial images, crafted to deceive machine vision AI systems, pose a significant threat to the reliability and trustworthiness of various applications. These meticulously designed perturbations, often imperceptible to the human eye, can lead to misclassifications and flawed decisions in critical tasks. Understanding the vulnerabilities and potential impacts of these attacks is crucial for developing robust and secure machine vision systems.The impact of adversarial images extends far beyond theoretical concerns, affecting real-world applications where machine vision plays a critical role.

These attacks could have serious consequences in scenarios where accurate visual interpretation is essential for safety and decision-making.

Vulnerabilities in Different Applications

Machine vision AI is employed in a wide range of applications, each with varying degrees of susceptibility to adversarial attacks. The accuracy and robustness of the underlying models are key factors in determining the potential impact of adversarial examples.

Real-World Scenarios

Autonomous vehicles are particularly vulnerable. Adversarial images could be used to manipulate the vehicle’s perception of its surroundings, potentially leading to catastrophic errors in navigation, object recognition, and obstacle avoidance. Similarly, security systems relying on facial recognition or object detection could be compromised, potentially enabling unauthorized access or misidentification of individuals. Medical imaging systems using AI for disease diagnosis or treatment planning could also be susceptible, potentially leading to incorrect diagnoses and inappropriate treatment decisions.

Malicious Usage Examples

Adversarial images could be used to manipulate security systems, potentially bypassing facial recognition or object detection mechanisms. In autonomous driving, malicious actors could create images to trick the system, causing it to misinterpret the road or surrounding environment, potentially leading to accidents. In medical imaging, adversarial examples could potentially lead to incorrect diagnoses, causing harm to patients.

Mitigation Methods

Robust machine vision AI systems require a multi-faceted approach to mitigate adversarial attacks. Defense mechanisms should be integrated into the model training and deployment process.

- Adversarial Training: Training models on adversarial examples can help them become more resilient to future attacks. By exposing the models to a wider range of perturbations, they can learn to generalize better and resist subtle manipulation.

- Defensive Distillation: This method involves training a separate “teacher” model and using it to guide the training of the “student” model. The teacher model can provide more robust predictions, making the student model less susceptible to adversarial attacks.

- Adversarial Robustness Evaluation: Evaluating models against various adversarial attacks is essential for identifying weaknesses and developing strategies to strengthen their resilience.

- Input Preprocessing: Techniques like noise reduction or data augmentation can help to improve the model’s resistance to adversarial perturbations.

Importance of Robust Models

Developing robust machine vision AI models is critical for ensuring the safety and reliability of applications. Robust models can effectively resist adversarial attacks and provide accurate results even in the presence of manipulated inputs. This is essential for maintaining trust in these systems, particularly in safety-critical applications.

Table: Susceptibility to Adversarial Attacks

| Application | Susceptibility | Potential Impact |

|---|---|---|

| Autonomous Vehicles | High | Accidents, loss of control |

| Security Systems (Facial Recognition) | Medium-High | Unauthorized access, misidentification |

| Medical Imaging (Diagnosis) | Medium | Incorrect diagnoses, inappropriate treatment |

| Retail (Object Detection) | Low-Medium | Inventory discrepancies, inaccurate sales data |

| Industrial Automation | Medium-High | Equipment malfunction, safety hazards |

Future Directions and Research

Adversarial machine vision is a rapidly evolving field, pushing the boundaries of both research and practical application. As machine vision systems become more integrated into critical infrastructure and daily life, the need for robust defenses against adversarial attacks becomes paramount. This necessitates a proactive and forward-thinking approach to research, focusing on the development of more resilient algorithms, improved datasets, and new evaluation metrics.The constant threat of adversarial attacks necessitates a dynamic and forward-looking research agenda.

Understanding how adversarial images deceive machine vision systems is critical for developing effective countermeasures. The future of machine vision AI hinges on its ability to withstand these increasingly sophisticated attacks.

Emerging Research Areas

Machine learning research is actively exploring several avenues to improve the robustness of machine vision systems. These include:

- Developing novel architectures: New neural network architectures are being designed with built-in defenses against adversarial attacks. These architectures often incorporate techniques like adversarial training, feature regularization, and incorporating prior knowledge about the data to improve robustness. For example, some architectures incorporate layers that explicitly detect and mitigate adversarial perturbations.

- Improving adversarial training techniques: Adversarial training methods are constantly being refined to enhance the robustness of machine vision models. Research is focused on strategies that better generalize to unseen adversarial examples, such as using more diverse and challenging datasets for training. Another approach is to combine different adversarial training techniques to enhance robustness.

- Exploring transfer learning for robustness: Transfer learning, where models trained on one dataset are adapted to another, shows promise in improving robustness. This approach can leverage pre-trained models, enhancing the efficiency and effectiveness of adversarial training on smaller datasets.

Need for New Datasets and Evaluation Metrics

Current datasets often lack the diversity and complexity required to thoroughly evaluate the robustness of machine vision models.

- Creating diverse and challenging datasets: New datasets need to encompass a wider range of adversarial attacks, image types, and real-world scenarios. This includes scenarios where adversarial images are more realistic and difficult to detect. For instance, datasets might include images generated by more advanced generative adversarial networks (GANs).

- Developing novel evaluation metrics: Current metrics for evaluating adversarial robustness often have limitations. Researchers are working on metrics that better capture the subtle nuances of adversarial examples, including their effectiveness across various image types and applications. This includes metrics that measure the robustness of the model across different types of attacks, and also incorporate human judgment of the perceived naturalness of the adversarial image.

Future of Machine Vision AI in the Face of Adversarial Attacks

The future of machine vision AI depends heavily on its ability to adapt and overcome the challenge of adversarial attacks.

- Integration of defenses into real-world applications: Integrating robust machine vision systems into real-world applications is crucial. For example, autonomous vehicles and medical imaging systems need to be able to withstand adversarial attacks without compromising safety and efficacy. This necessitates ongoing research into how to deploy these robust systems into existing infrastructures and products.

Potential for New Algorithm Development

The development of new algorithms plays a critical role in the field of machine vision AI.

- Developing algorithms that detect adversarial images: Robust algorithms that can identify and classify adversarial images are needed to protect machine vision systems from these attacks. Such algorithms might leverage techniques like anomaly detection or comparing the image to a database of known adversarial examples.

Importance of Understanding Adversarial Image Behavior

A deeper understanding of how adversarial images manipulate machine vision systems is critical.

- Analysis of adversarial image characteristics: Researchers need to analyze the characteristics of adversarial images to identify patterns and develop countermeasures. This includes understanding how the adversarial perturbations affect the decision-making process of the machine vision algorithm.

Visual Representation of Potential Growth Areas

A simple table representing the potential growth areas in adversarial machine vision:

| Category | Description |

|---|---|

| Novel Architectures | Development of neural network architectures with built-in defenses against adversarial attacks. |

| Adversarial Training Techniques | Refined strategies to enhance robustness against unseen adversarial examples. |

| Transfer Learning for Robustness | Leveraging pre-trained models to improve robustness. |

| New Datasets and Evaluation Metrics | Creating datasets with greater diversity and complexity to assess robustness effectively. |

Closing Notes

In conclusion, machine vision AI’s vulnerability to adversarial images underscores the need for robust models and effective defense mechanisms. The ObjectNet dataset and MIT algorithms offer valuable tools for evaluating and improving the robustness of these systems. This analysis highlights the ongoing importance of research in this area, emphasizing the need for new datasets, evaluation metrics, and innovative algorithms to safeguard against adversarial attacks.

The future of machine vision AI lies in its ability to withstand these sophisticated attacks, paving the way for more reliable and trustworthy applications in diverse fields.