Lip sync AI deepfake WAV2LIP code how to unlock a world of possibilities, from crafting realistic lip movements to pushing the boundaries of digital creativity. This comprehensive guide delves into the technical intricacies of creating AI-powered lip-syncing deepfakes, using WAV2LIP code as a core component. We’ll explore various techniques, from pre-trained models to custom training, and discuss the practical applications, ethical considerations, and future trends in this rapidly evolving field.

We’ll cover everything from understanding the fundamental purpose of WAV2LIP code and its core algorithms to a practical step-by-step guide on implementation. The guide will also include crucial information on software and hardware requirements, various methods for high-quality lip sync, potential pitfalls and troubleshooting steps. Expect a thorough examination of different lip sync AI methods, their strengths and weaknesses, and a comparison of their accuracy and efficiency.

Finally, we’ll peer into the future of lip sync AI, anticipating potential advancements and their impact on various industries.

Introduction to Lip Sync AI Deepfakes

Lip sync AI deepfakes are a fascinating, and somewhat unsettling, application of artificial intelligence. They utilize sophisticated algorithms to seamlessly synchronize a person’s mouth movements with an audio track, effectively creating a video where the audio and visuals appear perfectly matched. This technology relies on complex machine learning models to analyze both the audio and video input and then generate a realistic lip movement output.

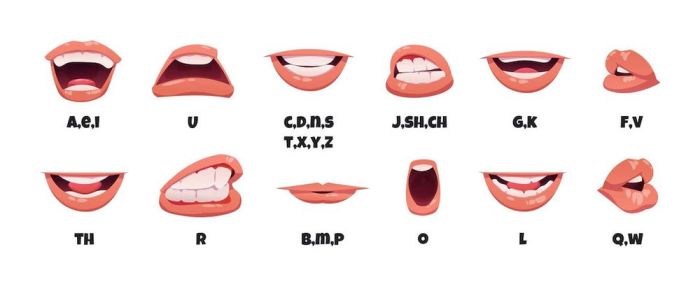

The resulting videos can be convincingly realistic, blurring the lines between authenticity and deception.The process involves several key steps. First, the input audio is processed to extract the audio waveform and identify the timing of syllables and phonemes. This audio analysis provides the temporal information for the lip movements. Next, the video input, typically a sequence of images, is analyzed to identify the speaker’s facial features, particularly the mouth region.

Deep learning models, trained on extensive datasets of audio-visual pairs, are then employed to generate the corresponding lip movements for the input audio. These models learn complex mappings between the audio features and the corresponding facial expressions, enabling the synthesis of realistic lip movements.

Types of Lip Sync AI Deepfakes

Lip sync AI deepfakes can be categorized into different approaches based on the method used for creating the lip movements. Pre-trained models, trained on massive datasets of videos and audio, offer a readily available solution for creating lip sync deepfakes. These models require minimal additional training for specific use cases. Conversely, custom training involves fine-tuning pre-trained models or developing entirely new models specifically for the target speaker or video.

This method allows for greater control and potentially better results, but it demands considerable computational resources and expertise. Both methods have their strengths and weaknesses depending on the desired level of realism and the availability of resources.

Components of Lip Sync AI Deepfakes

| Component | Description | Example |

|---|---|---|

| Audio Input | The audio track that the lip movements will be synchronized with. This can be anything from a song to a speech recording. | A recording of a person singing a song. |

| Video Input | A video recording of a person’s face, typically used as a reference for the lip movements. | A video clip of a person speaking. |

| Audio Analysis | The process of extracting the temporal information from the audio, including the timing of syllables and phonemes. | Identifying the precise moment when each syllable is spoken. |

| Facial Feature Extraction | The process of identifying and tracking the speaker’s facial features, especially the mouth region, from the video input. | Tracking the position of the lips, jaw, and other facial features in each frame of the video. |

| Deep Learning Model | A machine learning model trained on massive datasets of audio-visual pairs, that maps audio features to corresponding facial expressions. | A neural network model trained on millions of videos and corresponding audio tracks. |

| Lip Movement Synthesis | The process of generating the lip movements that correspond to the input audio. | Creating realistic lip movements that perfectly match the audio input. |

| Output Video | The final video where the audio and visual components are perfectly synchronized. | A video of a person singing a song with realistic lip movements. |

WAV2LIP Code Exploration

The WAV2LIP code, a cornerstone of Lip Sync AI deepfakes, meticulously maps audio waveforms to corresponding lip movements. This process, often complex, is crucial for generating realistic and believable lip-syncing effects. Understanding the code’s inner workings allows one to appreciate the intricate process of transforming audio into visual lip movements.

Fundamental Purpose of WAV2LIP Code, Lip sync ai deepfake wav2lip code how to

The core function of WAV2LIP code is to establish a direct correlation between audio input (a sound file) and corresponding lip movements. This translation process is achieved by learning the complex mapping between the temporal characteristics of the audio signal and the corresponding articulatory movements of the lips. This allows for the creation of convincing lip-syncing animations from virtually any audio source.

Core Functions and Algorithms in WAV2LIP Code

Several key algorithms and functions contribute to the efficacy of WAV2LIP code. These include: frame-by-frame analysis of audio and video, deep learning models for lip movement prediction, and sophisticated alignment techniques to synchronize the audio and video streams. The specific algorithms employed can vary depending on the particular implementation of WAV2LIP, but generally include recurrent neural networks (RNNs) for temporal modeling and convolutional neural networks (CNNs) for spatial feature extraction.

Code Structure and Components

A typical WAV2LIP implementation usually comprises several interconnected modules. One critical module is the audio preprocessing stage, which involves tasks such as noise reduction, normalization, and feature extraction from the audio signal. The video preprocessing module often handles tasks such as lip region detection and feature extraction from the video. The core model, which typically employs deep learning architectures, is trained on a vast dataset of audio-video pairs.

Finally, the output module generates the lip-synced video frames.

Code Libraries Used in WAV2LIP Projects

| Library | Description | Pros | Cons |

|---|---|---|---|

| TensorFlow | An open-source machine learning framework developed by Google. | Vast community support, extensive documentation, and wide range of tools for deep learning tasks. | Can be complex to set up for beginners, requires significant computational resources for large models. |

| PyTorch | Another popular open-source machine learning framework, known for its flexibility and ease of use. | Dynamic computation graphs, which can lead to more efficient code for certain tasks. Excellent community and tooling. | Learning curve can be steeper than TensorFlow, especially for those familiar with only one framework. |

| OpenCV | A comprehensive library for computer vision tasks. | Excellent for image and video processing, robust tools for tasks like face detection and feature extraction. | Might not be the most suitable for deep learning models alone, needs integration with other libraries. |

Choosing the right library depends on the specific needs of the project and the developer’s familiarity with different frameworks. For complex deep learning models, TensorFlow or PyTorch are generally preferred, while OpenCV provides vital tools for preprocessing audio and video data.

Deepfake Techniques for Lip Synchronization: Lip Sync Ai Deepfake Wav2lip Code How To

Deepfake technology, fueled by advancements in deep learning, has revolutionized the creation of realistic synthetic media. A crucial component of these deepfakes is lip synchronization, allowing for seamless integration of synthetic speech with a target’s facial movements. This process is not merely about mimicking lip shapes but involves a sophisticated understanding of the intricate relationship between audio and facial expressions.Deep learning models are the backbone of modern lip-sync AI deepfakes.

These models learn complex patterns and relationships within vast datasets, enabling them to predict and generate realistic lip movements corresponding to input audio. This capability has significant implications across various fields, from entertainment and social media to forensic analysis and security.

Deep Learning Models for Lip Sync

Deep learning models excel at learning intricate patterns in data. Various architectures are employed for lip synchronization, each with its strengths and weaknesses. Convolutional Neural Networks (CNNs) are particularly well-suited for processing visual data, enabling them to identify subtle facial features and their correlations with audio. Recurrent Neural Networks (RNNs), especially Long Short-Term Memory (LSTM) networks, are effective at capturing temporal dependencies in audio and video sequences.

Hybrid models, combining CNNs and RNNs, are increasingly popular, leveraging the strengths of both to achieve more accurate and nuanced lip synchronization.

Training Data Requirements

Accurate lip synchronization hinges on the quality and diversity of training data. This data typically comprises paired audio-visual sequences. The videos must be high-resolution and clear to capture subtle facial movements. The audio should be clean and aligned precisely with the corresponding video. A substantial amount of data is crucial for effective training, as the models need to encounter a wide range of expressions, speaking styles, and audio qualities.

For example, a model trained on only a few speakers and specific accents might struggle to generalize and synchronize lips for other individuals and different speech patterns. The dataset needs to encompass different ages, genders, ethnicities, and speaking styles to minimize biases and enhance generalization.

Audio Preprocessing for Improved Accuracy

Audio preprocessing plays a critical role in enhancing the accuracy of lip synchronization. Noisy or poorly recorded audio can significantly hinder the model’s ability to accurately predict lip movements. Techniques such as noise reduction, audio normalization, and speaker diarization (if multiple speakers are present) can significantly improve the quality of the audio input. For instance, background noise removal can prevent the model from misinterpreting ambient sounds as speech, leading to inaccuracies in lip synchronization.

Careful preprocessing steps help to ensure the model focuses on the intended speech signal, leading to more accurate and reliable lip synchronization results.

Practical Applications of Lip Sync AI

Lip Sync AI, a powerful technology leveraging deep learning, has moved beyond the realm of entertainment. Its potential extends significantly into various sectors, offering solutions that enhance communication, education, and accessibility. This exploration delves into the practical applications of this innovative technology, examining its utility in fields beyond entertainment.

Ever wanted to learn how to use lip sync AI deepfake wav2lip code? It’s a fascinating field, but lately, I’ve been more interested in the legal battles between tech giants like eBay and Amazon. There’s a major lawsuit surrounding poaching, racketeering, and fraud involving managers here , which is really highlighting the competitive landscape. Regardless of the legal drama, the lip sync AI deepfake wav2lip code is still a cool technology to explore, though.

Real-World Applications Beyond Entertainment

Lip Sync AI deepfakes are no longer confined to the entertainment industry. Their potential for practical applications is rapidly expanding. In situations where recording or replicating human speech is crucial, this technology can prove invaluable. For instance, in education, lip-synced videos of lectures or demonstrations can greatly improve learning accessibility. In the medical field, lip-synced explanations of complex procedures can aid in patient education and training.

Furthermore, lip-synced video presentations in corporate settings can improve communication clarity and efficiency.

Educational Applications

Lip Sync AI can revolutionize education by providing diverse learning experiences. Students can access lectures or tutorials in multiple languages, with the AI seamlessly synchronizing the audio and visuals. Imagine a video of a professor lecturing in Mandarin, seamlessly lip-synced with English subtitles. This technology can also facilitate interactive learning by creating simulations of real-world scenarios. A student could practice interacting with a patient in a simulated medical environment, observing the AI’s lip movements as they respond to a virtual patient’s questions.

Furthermore, the technology can create customized learning materials tailored to individual learning styles, with different visual presentations or speaking speeds.

Ethical Considerations

The use of lip sync AI deepfakes raises significant ethical concerns. The potential for misuse, such as creating misleading or fraudulent content, is a significant worry. Authenticity and transparency are critical when using this technology. Clear labeling and disclosure of AI-generated content are essential to avoid deception and maintain trust. Furthermore, issues of intellectual property rights and copyright infringement must be carefully considered.

The technology’s impact on employment, particularly in sectors reliant on live presentations, also requires thoughtful consideration.

Comparison of Lip Sync AI in Entertainment and Education

| Feature | Entertainment | Education |

|---|---|---|

| Primary Purpose | Creating engaging and entertaining content, often for entertainment value or social media trends. | Enhancing learning experiences, facilitating accessibility, and promoting knowledge dissemination. |

| Content Focus | Often focuses on visual appeal, narrative, and emotional impact. | Primarily focused on conveying accurate information, promoting comprehension, and enhancing understanding. |

| Ethical Concerns | Potential for misuse in creating misleading content, particularly in the realm of social media and viral trends. | Maintaining accuracy, avoiding misrepresentation, and promoting responsible use to ensure ethical learning experiences. |

| User Interaction | Typically passive consumption of content, often without active engagement. | Potential for interactive learning and engagement, tailoring content to individual learning needs. |

How to Implement Lip Sync AI Deepfakes

Bringing the power of AI to lip synchronization opens up a world of creative possibilities. From Hollywood-style movie production to subtle entertainment edits, the potential for altering and enhancing audio-visual content is immense. This section dives into the practical implementation of lip sync AI deepfakes, detailing the process from data collection to deployment.

Data Collection and Preparation

High-quality data is crucial for successful lip sync deepfakes. This involves capturing audio and video sequences of the target person, ensuring clear audio and video recordings. A synchronized recording is paramount, meaning the audio and video should precisely match each other frame-by-frame. Recording in a controlled environment with minimal background noise is essential to reduce artifacts and maintain clarity in the final product.

Ever wondered how to use lip sync AI deepfake wav2lip code? It’s a fascinating field, but recent news about the Disney Scarlett Johansson lawsuit settlement disney scarlett johansson lawsuit settled highlights the ethical implications of such technology. Ultimately, understanding the code behind lip sync AI deepfakes is crucial for both creative applications and responsible development.

The use of high-resolution cameras and microphones is recommended to capture details effectively. Multiple takes of the same segment are highly recommended to provide robustness in the training data.

Model Selection and Training

Numerous AI models excel at lip synchronization. Choosing the right model depends on the desired outcome, and the availability of resources. Models like those based on the Wav2lip framework are popular due to their effectiveness and ease of use. These models learn the mapping between audio and lip movements, enabling the seamless synchronization. The training process usually involves feeding the model with the collected data, allowing it to identify patterns and correlations between the audio and lip movements.

This process typically requires considerable computational resources and time. Significant computing power is usually needed, potentially involving dedicated hardware like GPUs.

Software and Hardware Requirements

Implementing lip sync AI deepfakes demands a robust setup. Powerful hardware is critical for training and running complex models. A high-end graphics processing unit (GPU) is highly recommended for handling the computational demands of model training and inference. A workstation with sufficient RAM is essential for efficient operation. Software-wise, programming languages like Python, along with libraries like TensorFlow or PyTorch, are commonly used for model development and training.

Frameworks like Wav2lip provide pre-built tools for lip synchronization tasks. Specific tools for audio editing, video processing, and data manipulation are also essential. A stable internet connection is necessary for accessing online resources and cloud-based services for training and inference.

Generating High-Quality Lip Sync Deepfakes

Several methods exist for generating high-quality lip sync deepfakes. Utilizing a pre-trained model, like those available from Wav2lip, allows for quick results, though customization might be limited. Customizing existing models can enhance realism and fine-tune lip synchronization to specific targets, but requires greater technical expertise and computational resources.

Installation and Running Wav2Lip Code

A step-by-step guide to installing and running Wav2Lip code is essential for practical implementation. First, ensure Python and the necessary libraries (like TensorFlow or PyTorch) are installed. Download the Wav2Lip code from its repository. Next, configure the environment, ensuring correct library dependencies are met. Prepare the audio and video data, making sure they align properly.

Run the model using the prepared data. Troubleshooting steps include verifying library compatibility, checking for errors in the data preparation process, and adjusting model parameters as needed.

Potential Pitfalls and Troubleshooting

Misaligned audio and video files are a common pitfall. Incorrect model configurations can lead to poor lip synchronization results. Inadequate computational resources can hinder the training process. Insufficient data quality can impact the accuracy of the lip sync results. Troubleshooting involves verifying data alignment, checking model parameters, increasing computational resources, and improving data quality.

The use of dedicated hardware like GPUs can alleviate the computational strain, leading to more efficient and accurate results.

Comparison of Different Lip Sync AI Methods

Lip synchronization using AI has rapidly evolved, offering various approaches with varying degrees of accuracy and efficiency. Understanding these different methods is crucial for choosing the right technique for a specific application. This section delves into the comparison of prominent lip sync AI methods, examining their strengths, weaknesses, and practical implications.Different AI methods employ varying techniques for mapping audio to lip movements, leading to distinct performance characteristics.

This comparison will illuminate the trade-offs between accuracy, complexity, and resource requirements for each method.

Accuracy and Efficiency of Different Approaches

Various algorithms achieve lip synchronization, each with unique strengths and weaknesses. Some methods rely heavily on deep learning models, while others leverage simpler, rule-based approaches. Deep learning models, trained on massive datasets, often achieve higher accuracy in complex scenarios. However, these models can be computationally intensive, requiring significant processing power and time.Rule-based approaches, on the other hand, are generally faster and less resource-intensive.

Figuring out lip sync AI deepfake wav2lip code can be tricky, but if you’re looking for some cool home upgrades, check out these amazing Amazon deals! 3 amazon home deals you shouldnt miss might just have the perfect items to enhance your space. Once you’ve got your home sorted, you can always dive back into the fascinating world of lip sync AI deepfake wav2lip code.

They may be suitable for simpler tasks or scenarios where real-time performance is paramount. The accuracy of rule-based systems, however, can be lower than deep learning models, especially in cases with complex or nuanced lip movements.

Types of Datasets Used to Train Lip Sync AI Models

The quality and quantity of training data significantly impact the performance of lip sync AI models. Large datasets comprising diverse audio and video recordings are crucial for effective model training. These datasets typically contain various speakers, accents, and types of audio, reflecting the real-world variability. For example, a dataset for training a lip sync model for a specific language might include a range of speakers from different regions within that language, ensuring that the model can handle a wider range of pronunciation variations.

Comparison of Lip Sync AI Methods

| Method | Accuracy | Complexity | Resources Needed |

|---|---|---|---|

| Deep Learning (Convolutional Neural Networks) | High | High | High (GPU, large datasets) |

| Rule-Based Systems (e.g., template matching) | Moderate | Low | Low (CPU, small datasets) |

| Hybrid Methods (combining deep learning and rule-based approaches) | High-Moderate | Moderate | Moderate |

Note: Accuracy is measured in terms of the percentage of correctly synchronized lip movements, while complexity refers to the computational resources needed for training and running the model. Resources needed include processing power (CPU or GPU), memory, and dataset size.This table provides a general comparison; specific implementations can vary, impacting the final accuracy, complexity, and resource requirements. For instance, a sophisticated deep learning model might achieve higher accuracy than a simpler rule-based system but at the cost of significantly higher computational demands.

Choosing the appropriate method depends heavily on the specific use case and available resources.

Future Trends in Lip Sync AI

Lip sync AI technology, currently a powerful tool for various applications, is poised for significant advancements. These improvements will likely reshape industries relying on video creation, entertainment, and even more specialized fields. The rapid pace of development in machine learning and deep learning fuels the potential for more realistic, seamless, and sophisticated lip-sync capabilities.

Potential Advancements in Real-Time Capabilities

Real-time lip-sync is crucial for applications like live streaming and video conferencing. Current systems often struggle with the speed and precision needed for smooth playback in real time. Future developments are expected to address these challenges. Faster processing speeds and more efficient algorithms will allow for real-time lip-synching without noticeable lag or artifacts.

Enhanced Accuracy and Naturalness

The current state of lip-sync AI frequently produces results that, while technically accurate, lack a natural, human-like quality. Future improvements in training data and neural network architectures are predicted to create more lifelike lip movements. This will involve more nuanced and complex models that can better adapt to individual speaker characteristics and dialects, leading to more authentic lip-sync performances.

Personalized Lip-Sync Models

A key future direction is the creation of personalized lip-sync models. These models will adapt to the specific nuances of individual speakers, including their unique speech patterns, vocal qualities, and even their facial features. This personalized approach will lead to far more realistic and natural-sounding lip-sync results, removing the current generalizability limitations of existing models.

Integration with Other AI Technologies

Future trends suggest an increased integration of lip-sync AI with other AI technologies. This might include integration with facial recognition, emotion analysis, or even text-to-speech systems. For example, imagine a video conferencing platform that automatically detects speaker emotions and adjusts the lip-sync to better reflect those emotions, enhancing communication and empathy.

Expansion into Novel Applications

The potential applications of lip-sync AI extend beyond the entertainment industry. Future developments might include creating realistic animated characters in movies or video games. The creation of realistic virtual avatars for social interactions, education, and business will become more commonplace. Furthermore, advanced lip-sync technology could be used to help people with speech impairments to communicate more effectively.

Improved Handling of Diverse Speakers and Languages

The current models often struggle with speakers from different ethnic backgrounds or with distinct accents. Future development in this area is crucial. The ability to accurately lip-sync for diverse languages and dialects will broaden the accessibility and utility of this technology.

Final Conclusion

In conclusion, this guide provides a robust foundation for understanding and implementing lip sync AI deepfakes using WAV2LIP code. By exploring the technical aspects, practical applications, and ethical considerations, we aim to equip you with the knowledge and tools to navigate this exciting frontier. The future of lip sync AI is brimming with potential, and this guide serves as a springboard for your own exploration and innovation in this transformative technology.