Facebook political preferences ads are a powerful tool, allowing campaigns to target specific demographics and interests. This in-depth look examines how these ads function, their impact on user behavior, ethical considerations, and Facebook’s evolving policies. We’ll explore the different targeting methods, the potential for misinformation, and the strategies behind successful campaigns.

This analysis delves into the intricate world of political advertising on Facebook. We’ll explore the diverse methods used to reach specific groups, from emotional appeals to issue-based arguments. Further, the article examines the ethical concerns and historical evolution of Facebook’s policies in this area, ultimately providing a comprehensive understanding of the subject.

Facebook Political Advertising Targeting Methods

Facebook’s robust advertising platform provides a powerful tool for political campaigns, allowing them to precisely target their messages to specific demographics, interests, and behaviors. This granular targeting can be incredibly effective in reaching voters who are most likely to be receptive to a candidate’s message, potentially swaying opinions and influencing election outcomes. However, the platform’s algorithm can significantly impact campaign effectiveness, and ethical considerations surrounding targeted advertising must be acknowledged.Understanding the intricacies of Facebook’s targeting options is crucial for political campaigns to maximize their reach and impact.

Different campaign types, from local elections to national campaigns, require varying approaches, necessitating careful consideration of the target audience.

Targeting Options for Political Ads

Facebook offers a wide range of targeting options, allowing campaigns to create highly specific audiences. These options encompass demographics, interests, behaviors, and more, enabling a tailored approach to voter engagement.

- Demographic Targeting: This involves identifying and targeting voters based on their age, location, gender, relationship status, education level, and other demographic factors. For example, a campaign targeting young voters might focus on individuals between the ages of 18 and 25, or concentrate on a particular state or region.

- Interest-Based Targeting: Campaigns can identify and target voters based on their interests, including specific political affiliations, social issues, or hobbies. A campaign promoting environmental policies, for instance, might target users who have expressed interest in environmental causes or climate change.

- Behavioral Targeting: This involves identifying and targeting voters based on their online behavior, such as pages visited, groups joined, posts interacted with, and purchase history. A candidate campaigning on economic issues might target users who frequently engage with articles and discussions related to the economy.

- Custom Audiences: This allows campaigns to upload lists of existing contacts, such as email addresses or phone numbers, to create a custom audience for targeting. This can be valuable for reaching supporters or potential supporters who have already shown interest.

- Lookalike Audiences: This sophisticated technique allows campaigns to identify new users who share similar characteristics with their existing audience. This can be used to expand reach and identify potential voters with similar interests or behaviors.

Targeting for Different Campaign Types

The targeting strategies for local and national campaigns often differ significantly.

- Local Elections: Local campaigns tend to focus on specific geographic areas and often leverage demographic targeting to reach voters within particular neighborhoods or communities. Interest-based targeting might include targeting voters associated with local issues or organizations.

- National Campaigns: National campaigns often need to reach a broader range of voters across the country. They utilize a combination of demographic, interest, and behavioral targeting strategies to reach voters with varying backgrounds and beliefs. This frequently involves micro-targeting specific regions to address regional concerns or interests.

Impact of Facebook’s Algorithm

Facebook’s algorithm plays a crucial role in determining the effectiveness of political advertising targeting. The algorithm prioritizes content that aligns with user interests and preferences, potentially influencing the visibility of a campaign’s ads. The algorithm also considers factors like ad relevance and engagement rates.

Targeting Method Comparison

| Targeting Category | Example | Description |

|---|---|---|

| Demographics | Age | Specify age ranges for targeting, e.g., 18-24 |

| Interests | Political Affiliation | Target users with specific political views, e.g., Democrats |

| Behaviors | Online Activity | Target users based on their online activity patterns, e.g., frequent engagement with political news |

Impact of Political Ads on User Behavior

Political advertising on Facebook, like any form of persuasive communication, holds the potential to significantly influence user behavior. From shaping opinions to potentially impacting voter turnout, the impact of these ads is complex and multifaceted. Understanding the mechanisms behind this influence is crucial for both political strategists and individuals seeking to critically evaluate the information they encounter online. Analyzing successful and unsuccessful campaigns provides valuable insights into the effectiveness of various approaches.Political advertising on Facebook, through targeted strategies, can profoundly affect user engagement and decision-making.

This influence extends beyond simply informing voters about candidates and policies. The carefully crafted narratives, emotional appeals, and persuasive language used in these ads can subtly steer public opinion, fostering support for specific candidates or policies.

Potential Influence on User Engagement and Decision-Making, Facebook political preferences ads

Political advertisements can trigger emotional responses, leading to increased engagement and memorability. The use of strong visuals and evocative language can generate enthusiasm or apprehension in viewers. However, the nature of this engagement isn’t always positive. Negative campaigning, for example, can sometimes alienate potential supporters or polarize public discourse.

Impact on Public Opinion and Voter Turnout

Political ads, strategically designed and deployed, can effectively shape public opinion. By emphasizing certain issues and highlighting specific candidate traits, campaigns can create a particular narrative around a candidate. This targeted messaging, if successful, can influence voters to prioritize specific issues or select particular candidates. Additionally, well-executed campaigns can motivate voters, increasing participation in elections. Conversely, poorly targeted or misleading ads can have the opposite effect, undermining public trust or discouraging engagement.

Facebook’s political preference ads are a fascinating, and frankly, a little unsettling, area. It’s like they’re trying to predict your vote, and that’s a powerful thing. Interestingly, Samsung is getting ahead of the game with biometrics; they’ll soon let you unlock your Galaxy phone just with your voice, samsung will soon let you unlock your galaxy phone just your voice.

This raises some interesting questions about how much personal data companies are collecting, and how it’s being used, bringing us back to those Facebook political ads.

Examples of Successful and Unsuccessful Campaigns

The effectiveness of a political ad campaign hinges on numerous factors, including the target audience, the chosen message, and the overall strategy. A campaign emphasizing a candidate’s experience in addressing specific policy issues, paired with relatable imagery, might resonate with a broader segment of the electorate. Conversely, a campaign relying solely on fear-mongering or unsubstantiated accusations might alienate potential supporters and ultimately fail to achieve its objectives.

Psychological Factors Influencing User Responses

Several psychological factors play a role in shaping how individuals respond to political advertisements. Cognitive biases, such as confirmation bias, can lead individuals to favor information that aligns with their pre-existing beliefs. Emotional appeals, such as patriotism or fear, can sway opinions, especially if the appeals are well-targeted. Furthermore, the framing of information and the use of persuasive rhetoric can subtly influence attitudes.

Comparison of Political Ad Strategies

| Strategy | Target Audience | Objective |

|---|---|---|

| Emotional Appeals | Voters who prioritize social issues | Evoke strong emotional responses, often associated with values like compassion, justice, or security |

| Issue-Based | Voters focused on specific policies | Highlight specific policy details, emphasizing the candidate’s stance on key issues |

Different strategies for political ad campaigns target distinct segments of the population. Emotional appeals often resonate with voters concerned with social issues, while issue-based approaches focus on voters who prioritize specific policies. Understanding the nuances of each strategy is crucial for creating effective campaigns.

Ethical Considerations of Political Advertising

Political advertising, while a crucial component of democratic processes, presents unique ethical challenges, particularly on social media platforms like Facebook. The vast reach and targeting capabilities of these platforms amplify the potential for manipulation and harm, demanding careful consideration of transparency, accountability, and user well-being. The very nature of political persuasion, often emotionally charged and highly contested, necessitates a critical examination of the ethical implications of advertising techniques.The digital age has transformed the landscape of political discourse, allowing for unprecedented access to potential voters but also introducing new avenues for manipulation and misinformation.

The need for robust ethical frameworks and regulations is more critical than ever to ensure fair and equitable political engagement.

Potential Ethical Concerns

Political advertising on Facebook, while enabling broad dissemination of information, can be exploited for harmful purposes. Concerns include the potential for misleading or false information, undue influence through targeted campaigns, and the exploitation of user data for political gain. Furthermore, the amplification of extremist viewpoints through algorithmic filtering and targeted advertising presents a significant ethical challenge, potentially leading to the polarization of society.

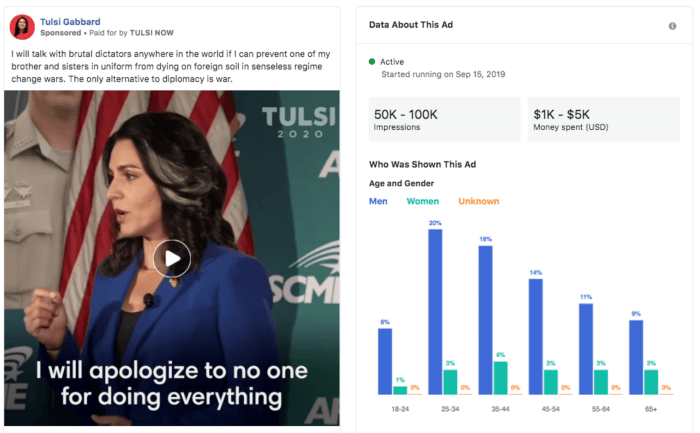

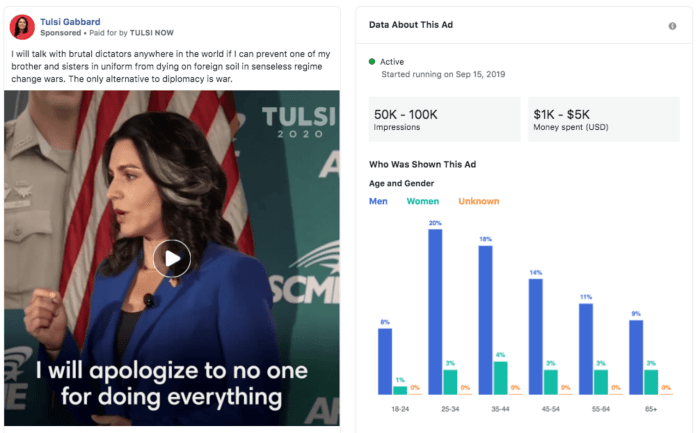

Transparency and Accountability

Transparency in political advertising is paramount. Clear disclosure of sponsorships and funding sources is essential for informed decision-making by users. This includes not only identifying the entities behind the ads but also outlining the specific strategies used to reach targeted audiences. Robust accountability mechanisms, including independent fact-checking initiatives and mechanisms for redressal, are crucial to counter potential misrepresentations and falsehoods.

Accountability mechanisms should allow users to report misleading content and enable platforms to take action.

Biases in Targeting and User Privacy

Targeted advertising on Facebook can potentially introduce biases into the political process. If algorithms prioritize specific demographics or interests, certain viewpoints might be disproportionately amplified, while others are suppressed. This raises significant concerns about user privacy. Data collection practices used to target ads must be transparent and comply with relevant privacy regulations, ensuring that user data is not misused for political manipulation.

The use of sensitive personal data to create highly tailored political messages without user consent raises ethical questions about the limits of political advertising.

Regulating Political Advertising on Social Media

Various approaches exist to regulate political advertising on social media platforms. Some advocate for stricter disclosure requirements, while others propose independent oversight bodies to monitor and evaluate campaigns. Different countries have implemented various approaches to regulate political advertising online, ranging from voluntary codes of conduct to mandatory disclosure requirements and independent regulatory bodies. These regulations often vary depending on the specific political context and legal framework of each country.

Comparative analysis of these approaches can provide valuable insights into the effectiveness of different regulatory models.

Ethical Guidelines for Political Advertising

A comprehensive set of ethical guidelines is essential to ensure responsible political advertising on social media. These guidelines should prioritize transparency, accountability, and respect for user privacy and well-being.

- Transparency in disclosing sponsorships: Clear and prominent disclosure of sponsorships and funding sources is crucial. This should extend to all forms of political advertising, including sponsored content, paid posts, and targeted ads. Examples include prominently displaying the sponsor’s name or organization on the ad and including a clear statement about funding sources in the ad’s description.

- Avoiding misinformation and harmful content: Platforms must actively combat the spread of misinformation and harmful content. This includes implementing robust fact-checking mechanisms and clear guidelines for acceptable content in the political advertising space. Examples include establishing partnerships with independent fact-checking organizations and developing content moderation policies that address the potential spread of hate speech.

- Respecting user privacy and data security: The collection and use of user data for political advertising must be transparent and comply with all relevant privacy regulations. Platforms must ensure that user data is not misused for political manipulation or profiling. Examples include providing users with clear information about how their data is being used for targeted advertising and offering users control over their data.

- Preventing hate speech and discrimination: Political advertising should not promote hate speech, discrimination, or violence. Clear guidelines and robust moderation policies are essential to prevent the spread of such content. Examples include explicitly prohibiting hate speech, discrimination, and incitement to violence in advertising content and establishing clear processes for reporting and addressing such violations.

Evolution of Facebook’s Political Advertising Policies

Facebook’s approach to political advertising has undergone significant transformations, shaped by public pressure, technological advancements, and evolving societal norms. Initially, the platform’s policies were relatively permissive, allowing for a wide range of political content. However, as the platform’s influence grew, so did scrutiny of its role in political discourse. This evolution reflects a broader societal discussion about the responsibility of tech giants in shaping public opinion and ensuring fair elections.

Historical Overview of Facebook’s Policies

Facebook’s initial approach to political advertising was largely hands-off. This stance allowed for a wide array of content, including potentially misleading or inflammatory material. This laissez-faire attitude was challenged as the platform’s user base grew exponentially, and its influence on political campaigns became undeniable. The early days were characterized by a lack of stringent regulations, making it challenging to discern credible information from misinformation.

Facebook’s political preference ads are fascinating, but sometimes I wonder how much influence they really have. It’s like trying to sync your Android phone with your Windows 10 PC; how sync your android windows 10 is a complex process, and there are a lot of variables. Ultimately, though, the ads likely aren’t the only factors influencing our political leanings.

There’s more to it than just targeted marketing campaigns.

Evolution of Policies in Response to Societal Norms and Technological Advancements

Facebook’s policies have evolved significantly in response to public pressure and technological advancements. The rise of social media as a primary source of information, coupled with the increasing sophistication of online campaigns, highlighted the need for more stringent regulations. The platform’s increasing influence in political discourse prompted greater scrutiny from regulatory bodies, civil society organizations, and users themselves.

Impact of User Feedback and Public Pressure on Facebook’s Policies

User feedback and public pressure have been pivotal in driving changes to Facebook’s political advertising policies. Public outcry over instances of misinformation and manipulation prompted the company to introduce new guidelines and restrictions. Examples include the introduction of fact-checking partnerships and the implementation of stricter rules regarding the spread of fake news. The public’s concerns regarding the platform’s influence on elections became a significant factor in shaping policy decisions.

Facebook’s political preference ads are a fascinating example of how targeted advertising can influence opinions. But, the complexities extend far beyond simply influencing voter choice. Think about how crucial securing OT infrastructure plant segmentation is becoming, especially as critical systems become more interconnected. This heightened awareness of vulnerabilities in critical infrastructure, like securing OT infrastructure plant segmentation, here , mirrors the need for greater transparency and accountability in digital campaigning and ad targeting.

Ultimately, the power of Facebook’s political ads needs a critical eye, especially when considering the broader implications for society.

Comparison with Other Social Media Platforms

Compared to other social media platforms, Facebook’s approach to political advertising has demonstrated a blend of proactive measures and reactive adjustments. While some platforms have opted for more restrictive approaches, others have maintained a more permissive stance. This diverse landscape reflects the complexities of regulating political discourse online and the ongoing debate about the appropriate level of intervention by social media companies.

The effectiveness and fairness of these policies are subject to ongoing scrutiny and debate.

Timeline of Key Events and Policy Changes

| Year | Event | Description |

|---|---|---|

| 2018 | New Transparency Policy | Facebook introduced a new policy aimed at increasing transparency regarding political advertising. This involved requiring more detailed disclosures about the sources and spending behind these ads. |

| 2020 | COVID-19 Campaign Policies | The COVID-19 pandemic led to adjustments in Facebook’s policies regarding political advertising. This included measures designed to prevent the spread of misinformation related to the pandemic and its impact on elections. |

Misinformation and Disinformation in Political Ads: Facebook Political Preferences Ads

Political advertising, while a crucial part of democratic discourse, can be a breeding ground for misinformation and disinformation. Facebook, with its vast user base, presents a significant platform for the spread of these harmful narratives. Understanding how these tactics operate and how to combat them is vital for maintaining a healthy and informed electorate.

Mechanisms of Spread

Political advertisements on Facebook can exploit a variety of methods to disseminate false or misleading information. Targeting algorithms can be used to specifically reach individuals susceptible to particular narratives. The platform’s format, with its concise and often emotionally charged posts, can make it difficult for users to critically evaluate the information presented. Furthermore, the rapid dissemination of content through shares and comments can amplify false claims exponentially, creating a cascade effect that spreads misinformation far and wide.

The use of emotionally evocative language and misleading visuals further compounds the problem, making it difficult for viewers to discern truth from falsehood.

Challenges in Identification and Combating

Identifying and combating misinformation in political ads presents a significant challenge. The sheer volume of content on Facebook makes manual fact-checking an impractical endeavor. Automated systems often struggle with nuanced language and complex issues, leading to false positives or missed instances of disinformation. Additionally, the dynamic nature of misinformation, with its constant evolution and adaptation, makes maintaining up-to-date fact-checking resources a constant struggle.

Misinformation frequently exploits existing biases and anxieties, making it particularly difficult to counter effectively.

Fact-Checking and Debunking Strategies

Fact-checking initiatives play a critical role in countering misinformation. Independent fact-checking organizations can play a key role in analyzing claims and providing accurate assessments. Collaboration between fact-checkers, social media platforms, and educational institutions is crucial to combating the spread of false narratives. The development of tools that allow users to readily identify potentially false information is also essential.

This could include incorporating clear labels indicating potentially false content, allowing users to easily access verified information, and providing concise explanations of why certain claims are inaccurate.

Examples of Successful Campaigns

Several organizations and individuals have successfully engaged in campaigns to combat misinformation. These efforts often involve creating clear and concise rebuttals to false claims, using visuals and interactive content to explain the inaccuracies, and highlighting the source of the misinformation. Targeted campaigns to address specific narratives circulating on the platform have demonstrated success in countering disinformation. Social media campaigns highlighting reliable sources and providing educational content on critical thinking skills can also be effective in countering the spread of misinformation.

Common Misinformation and Disinformation Techniques

| Type | Description | Example |

|---|---|---|

| Falsehoods | Statements that are completely fabricated. | Claiming a candidate received foreign funding. |

| Half-Truths | Statements that contain a kernel of truth but are misleading. | Exaggerating policy benefits. For instance, claiming a tax cut will lead to a 100% increase in GDP growth, when the actual impact is much smaller and comes with other consequences. |

| Misleading Visuals | Manipulating images or videos to create a false impression. | Using a picture of a candidate with a crowd to suggest widespread support, when the crowd was significantly smaller than implied. |

| Emotional Appeals | Using emotional language and imagery to manipulate viewers into accepting a claim. | Creating an advertisement with exaggerated fear-mongering to suggest a candidate’s policies will lead to disaster. |

Last Recap

In conclusion, Facebook political preferences ads represent a complex interplay of targeting strategies, user behavior, ethical concerns, and evolving policies. The power to influence public opinion is significant, demanding transparency, accountability, and careful consideration of the potential for misinformation. As Facebook continues to adapt to the political landscape, it’s crucial to remain vigilant and informed about the implications of these ads.