Earn It Act Section 230 amendment reintroduced sets the stage for a fascinating debate about online platforms’ responsibilities. This reintroduction sparks crucial questions about how online content moderation will evolve, potentially affecting everything from social media to e-commerce. The proposed changes promise a significant shift in the digital landscape, prompting careful consideration of their impact on user behavior and platform operations.

The amendment delves into the history of Section 230, examining previous attempts to modify it and the motivations behind this particular reintroduction. It analyzes the potential ramifications for various online platforms, including social media, e-commerce, and news aggregators. Moreover, the impact on user behavior and online discourse, as well as potential conflicts with existing laws, are explored.

Background of the Amendment

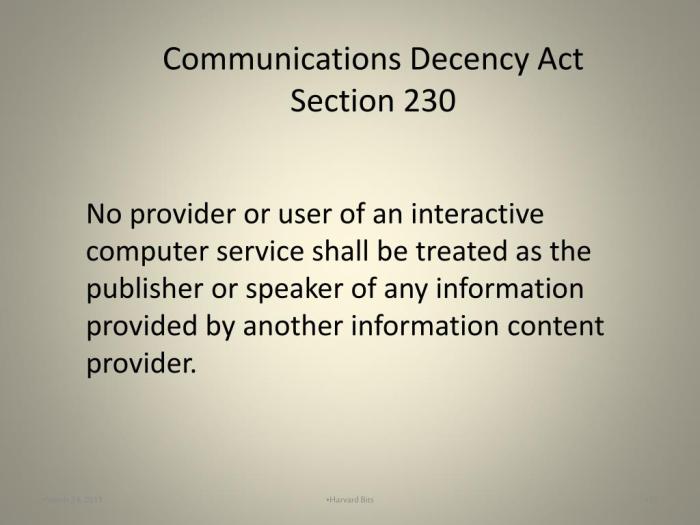

Section 230 of the Communications Decency Act, enacted in 1996, has been a cornerstone of online discourse and platform regulation. It shields online platforms from liability for content generated by users, fostering a free exchange of ideas and fostering innovation in the digital space. However, this protection has been increasingly debated and challenged in recent years.The legal and political context surrounding Section 230 has been fraught with tension.

The reintroduction of the Earn It Act’s Section 230 amendment is definitely a hot topic right now. It’s interesting to see how this connects to emerging technologies like the Alphabet Wing drone air traffic control app alphabet wing drone air traffic control app. This app, aiming for safer drone operations, could potentially influence how the amendment shapes the future of online services.

Ultimately, the Earn It Act amendment’s success will depend on a lot of factors and public feedback.

Critics argue that it allows platforms to host harmful content without accountability, while proponents maintain that it is crucial for the development and flourishing of the internet. This ongoing debate highlights the complex interplay between freedom of expression, platform responsibility, and public safety in the digital age.The reintroduction of the amendment stems from a confluence of factors, including concerns about misinformation, hate speech, and the spread of harmful content online.

Proponents believe that Section 230 needs adjustments to hold platforms accountable for content that violates existing laws, while preserving the crucial role of platforms in fostering online discourse.

Proposed Changes to Section 230

The specific changes proposed in the reintroduction aim to refine Section 230’s application in the modern digital environment. These proposals are expected to address the current challenges posed by online content moderation, potentially offering a more balanced approach to platform liability and user safety. This revised approach seeks to clarify and potentially redefine the parameters of platform responsibility while still encouraging the innovation and development of the internet.

History of Section 230

Section 230, initially intended to encourage the growth of online services, has seen its legal interpretation evolve over time. The original intent was to safeguard platforms from being held liable for user-generated content, encouraging their development and the subsequent growth of the internet. Over time, the application of Section 230 has become more complex as the digital landscape has evolved.

Several court cases and legislative debates have shaped the interpretation of the law.

Key Legal and Political Context

The political and legal context surrounding previous attempts to amend Section 230 has often been contentious. Different factions have voiced contrasting perspectives, highlighting the significant social and political implications of any changes to the law. These differing views often revolve around balancing platform responsibilities with user freedoms. The ongoing debates emphasize the delicate balance required to maintain a vibrant online environment while ensuring accountability for harmful content.

Motivations Behind the Reintroduction

Several motivations underlie the reintroduction of this amendment. These include addressing issues such as the spread of misinformation and hate speech online. The reintroduction of this amendment is intended to better align the law with the evolving challenges of the digital age. A key motivation is to ensure that platforms are held responsible for content that violates existing laws, while preserving the free exchange of ideas.

Impact on Online Platforms: Earn It Act Section 230 Amendment Reintroduced

The reintroduction of the Earn It Act Section 230 amendment promises significant shifts in the landscape of online platforms. This amendment, aiming to redefine the liability protections currently afforded to these platforms, will force them to adapt and potentially reshape their strategies for content moderation, user engagement, and overall business models. The ramifications are far-reaching, impacting not just social media giants but also e-commerce platforms and others relying on user-generated content.This amendment’s implications will vary based on the specific platform’s structure and business model.

For instance, platforms heavily reliant on user-generated content, such as social media sites, will likely be most affected. The amendment’s potential impact on e-commerce platforms, while significant, may manifest differently, focusing more on the verification and authenticity of products and seller information.

Potential Effects on Social Media Platforms

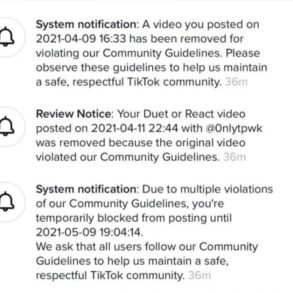

Social media platforms, characterized by their reliance on user-generated content, will experience substantial adjustments. The amendment’s emphasis on platform accountability for harmful content could lead to stricter content moderation policies. This might involve more proactive measures to identify and remove content deemed problematic, potentially affecting the free flow of information and expression.Platforms will likely implement more robust verification and reporting mechanisms to ensure compliance with the new standards.

User-generated content might be subjected to additional scrutiny, potentially impacting user engagement and platform usage patterns. The line between acceptable and unacceptable content could become increasingly blurred, leading to complex legal and operational challenges. Platforms will likely have to develop more comprehensive content moderation guidelines and training programs for their moderators. Increased scrutiny could result in the hiring of more moderators and possibly outsourcing moderation tasks.

Adaptation Strategies for E-commerce Platforms

E-commerce platforms will need to adapt to the amendment’s provisions regarding the verification of sellers and products. This will likely entail a shift toward more rigorous verification procedures for vendors, including mandatory documentation and identity checks. Detailed seller profiles with verification statuses could become a common feature, impacting the ease of doing business on these platforms. Furthermore, platforms may need to develop more robust systems to identify and address fraudulent or misleading product listings, which could necessitate partnerships with regulatory bodies.

Potential Conflicts with Existing Platform Practices

The amendment may create conflicts with existing platform practices. Many platforms currently rely on automated content moderation systems, which may struggle to accurately assess the context and intent behind user-generated content in the light of the new requirements. The amendment’s emphasis on user responsibility might clash with existing policies that encourage user engagement and platform activity.

Impact on Content Moderation Policies

Content moderation policies will undergo a significant transformation. The amendment will likely necessitate more nuanced and comprehensive content moderation policies, moving away from simple filtering towards a more contextual and human-centric approach. This shift could lead to a need for greater transparency in the platform’s content moderation procedures. Furthermore, platforms will need to be prepared for potential legal challenges and scrutiny related to their content moderation decisions.

Training and education for moderators will become crucial to ensuring compliance and mitigating potential legal risks.

Implications for User Behavior

The reintroduction of the Earn It Act Section 230 amendment brings significant implications for user behavior online. Users, accustomed to a certain level of platform freedom and a perceived lack of accountability, are likely to react in diverse ways to the proposed changes. This amendment, aiming to hold platforms more responsible for content, could potentially alter the dynamic between users and the online spaces they inhabit.This shift in the regulatory landscape necessitates a careful consideration of how users will adapt and interact with online platforms.

The anticipated changes in content moderation and platform accountability could lead to noticeable shifts in user behavior, from increased scrutiny of content to alterations in the way users engage with online communities. Understanding these potential shifts is crucial to anticipating the long-term impact of this amendment.

Changes in Content Moderation Practices

The amendment’s focus on platform accountability for content moderation will undoubtedly affect how users interact with the online world. Users may become more vigilant in reporting content they deem harmful or inappropriate. This heightened awareness could lead to a more demanding approach toward content moderation policies, pushing platforms to adopt more stringent guidelines. Conversely, some users might feel their freedom of expression is being curtailed, potentially leading to reduced participation or even the development of alternative, less regulated platforms.

This potential for user-driven changes to content moderation policies is a crucial element to understand.

Platform Accountability and User Trust

The amendment’s impact on platform accountability directly affects user trust. Users are likely to become more discerning about the platforms they choose to utilize. A perceived lack of platform responsibility could result in users migrating to alternative platforms that align better with their expectations. For example, if users feel a particular platform is not adequately addressing harmful content, they may switch to a platform with a more transparent or stringent content moderation policy.

Similarly, increased scrutiny of platform behavior might foster a greater reliance on independent fact-checking and verification tools.

Potential Shifts in Online Discourse

The amendment’s implications for platform accountability will undoubtedly affect online discourse. Users might become more cautious in expressing opinions or engaging in discussions, potentially leading to self-censorship. Conversely, some users might view the amendment as an opportunity to express their views more freely, confident in the increased scrutiny of platforms. This change in the dynamic could also influence the types of content shared and discussed online, leading to a potential shift in the balance of online discourse.

Increased User Scrutiny of Online Platforms

Users are increasingly aware of the role platforms play in shaping their online experience. The amendment’s emphasis on platform accountability will undoubtedly heighten this scrutiny. Users may become more critical of the algorithms, moderation policies, and data practices employed by various platforms. This enhanced awareness will likely prompt a more discerning approach to platform selection and usage, with users becoming more aware of the potential consequences of their online interactions.

The reintroduction of the Earn It Act Section 230 amendment is definitely a hot topic right now. It’s all about platform accountability, and while I’m not an expert on the specifics, I am curious about how these changes might impact mobile app security. For example, WhatsApp’s Android fingerprint unlock feature whatsapps android fingerprint unlock feature already prioritizes user security, and I wonder if these legislative changes might encourage more of this kind of proactive security.

Ultimately, the Earn It Act amendment’s success will hinge on how effectively it addresses the evolving needs of online platforms.

This increased scrutiny will likely drive a more active engagement with the policies and practices of online platforms.

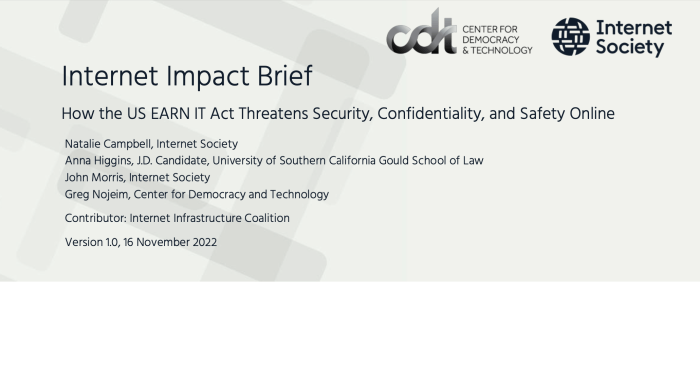

Comparison with Existing Laws and Regulations

The proposed amendment to Section 230 of the Communications Decency Act presents a significant shift in the legal landscape for online platforms. Understanding its impact requires a careful comparison with existing laws and regulations, particularly those governing online speech and platform liability. This comparison highlights areas of alignment and divergence, potential conflicts, and the broader implications for the digital ecosystem.The existing legal framework surrounding online speech is complex and often contested.

Different jurisdictions and legal traditions have established varying standards for regulating online content. This complexity is reflected in the multitude of cases and precedents that have shaped the understanding of platform liability. The amendment’s proposed changes aim to redefine the parameters of this framework, necessitating a detailed comparison with existing laws to anticipate the potential ramifications.

The reintroduction of the “Earn It Act” section 230 amendment is definitely a hot topic right now. While that’s all important, I’ve been digging into some exciting new Android updates, like Android 13 QPR3 beta 2.1, which is looking pretty promising. It’s fascinating to see how these technical advancements can potentially shape the future, and it makes me think back to the broader implications of the “Earn It Act” amendment and how it could affect digital platforms.

Comparison of the Amendment’s Approach to Existing Legal Precedents

The amendment’s approach to platform liability deviates from some established legal precedents. While existing laws generally protect platforms from liability for user-generated content, the proposed amendment introduces a stricter standard, potentially holding platforms accountable for content that is deemed harmful or illegal. This shift contrasts with the “safe harbor” protections currently afforded to platforms under Section 230, and could significantly alter the balance of power between platforms and users.

Areas of Alignment and Divergence from Existing Legal Frameworks

The amendment displays both alignment and divergence with existing legal frameworks. For example, the proposed language aligns with existing laws regarding the dissemination of illegal content. However, the amendment diverges significantly from existing legal precedents regarding the regulation of harmful but not illegal content. This divergence is a crucial point of contention, as it raises concerns about the potential for overreach and chilling effects on online expression.

Potential Conflicts Between the Amendment and Other Legal Areas

The amendment’s proposed changes could create conflicts with other legal areas, such as intellectual property law and contract law. For instance, the amendment’s focus on platform liability for user-generated content could inadvertently impact the rights of content creators and the terms of service agreements between platforms and users. The potential for conflicting interpretations and applications of different legal principles warrants careful consideration.

Examples of Potential Conflicts

Consider a case where a user posts copyrighted material on a platform without permission. Existing copyright laws would typically address this issue, but the amendment’s proposed changes to Section 230 could potentially place additional liability on the platform. This highlights the potential for conflicts between the amendment and established copyright protections. Similarly, the amendment’s impact on contract law could affect the ability of platforms to enforce their terms of service regarding user-generated content.

Potential Challenges and Opportunities

Navigating the complexities of online content moderation is fraught with difficulties. The proposed amendment to Section 230, while aiming to foster a safer online environment, presents unique challenges in its implementation and potential for legal disputes. Understanding these hurdles, alongside the innovative possibilities it unlocks, is crucial for a productive discussion.

Potential Implementation Challenges

The amendment’s intricacies regarding platform liability and the practical application of its provisions could prove challenging. Different platforms will face varying degrees of difficulty in adjusting to the new requirements. Smaller platforms, particularly those with limited resources, may find it particularly challenging to comply with the new standards, possibly leading to a disproportionate impact on their ability to operate.

Furthermore, establishing clear and consistent standards across diverse content types and user communities is a significant hurdle.

- Defining “harmful” content objectively is a complex task, especially in the face of subjective interpretations and evolving societal values.

- Ensuring that content moderation policies do not stifle free speech or create a chilling effect on legitimate expression is a critical concern.

- Ensuring a fair and consistent enforcement mechanism across platforms is essential to avoid discriminatory practices.

- Scalability and resource allocation for large platforms to implement these measures efficiently and effectively can be a considerable challenge.

Legal Challenges and Disputes

The amendment’s ambiguities and broad language could invite legal challenges. The potential for disputes over the interpretation of “harmful content” and the appropriate level of platform responsibility is significant. Jurisdictional conflicts and varying legal standards across countries could create additional complexity.

- Existing legal precedents and doctrines regarding free speech and the First Amendment could be challenged and reinterpreted.

- The amendment’s potential to affect the balance of power between platforms and users, and the potential for abuse by either party, needs careful consideration.

- The lack of clarity regarding the responsibility of platforms in handling third-party content could lead to litigation, impacting platform operations and user experiences.

- Differences in interpretations of the amendment across different jurisdictions could lead to conflicting legal outcomes, hindering a uniform application of the law.

Opportunities for Improved Online Safety and Accountability

The amendment presents an opportunity to foster a safer online environment while maintaining the benefits of free expression. This includes holding platforms accountable for harmful content while ensuring that the content moderation process respects freedom of speech and expression.

- A greater focus on user reporting mechanisms and platform response times to user concerns can foster greater accountability.

- Clearer guidelines for content moderation practices can help platforms maintain balance and consistency in their responses.

- Mechanisms for user appeals and recourse in cases of perceived unfair or inappropriate content moderation decisions can improve user experience.

Opportunities for Platform Innovation and User Engagement

The amendment offers potential for platform innovation in areas such as content moderation and user engagement. This could result in more engaging and effective online experiences.

- Platforms can develop innovative algorithms and tools for automated content moderation, reducing the workload on human moderators while maintaining accuracy.

- New models for collaborative content moderation involving users, moderators, and potentially AI can lead to more effective and user-centric solutions.

- Platforms can explore ways to provide users with more control over their online experiences and empower them to report inappropriate content.

- Increased transparency in content moderation practices could foster greater trust between platforms and users.

Structure of a Table for Different Types of Impacts

This section delves into the potential effects of the Earn It Act Section 230 amendment on various online platforms. Analyzing these impacts across different platform types is crucial to understanding the nuanced ramifications of this proposed legislation. By categorizing potential positive and negative consequences, and proposing mitigation strategies, we can gain a clearer picture of how this amendment might reshape the digital landscape.

Impact Comparison Across Platform Types

Understanding the potential impacts of the amendment requires considering how different types of online platforms might be affected. This table Artikels a comparison of potential positive and negative consequences, along with possible mitigation strategies, for various online platform types.

| Platform Type | Potential Positive Impacts | Potential Negative Impacts | Potential Mitigation Strategies |

|---|---|---|---|

| Social Media | Increased user trust and safety, reduced spread of misinformation, and enhanced accountability of platforms. | Potential for reduced user engagement due to stricter content moderation policies, censorship concerns, and a chilling effect on free expression. Platforms may be forced to invest heavily in new moderation tools and procedures. | Development of clear content moderation guidelines, transparency in moderation practices, and establishment of independent review mechanisms. Platforms should also focus on user education and engagement strategies to offset potential declines in participation. |

| News Aggregators | Greater transparency in content sourcing and verification, increased accountability for spreading false or misleading information, and improved user confidence in the accuracy of news presented. | Potential for biased content moderation policies, challenges in maintaining a diverse range of news sources, and possible limitations on user access to different perspectives. A reduction in the volume of news available to users is another potential negative. | Establishment of clear standards for verifying news sources, implementing algorithms to flag potentially misleading content, and promoting diverse viewpoints. Platforms could also provide resources for users to independently evaluate news articles and fact-check information. |

| Forums and Discussion Boards | Enhanced community safety, reduction in harassment and harmful content, and improved ability for users to engage in productive discussions. | Potential for decreased user participation due to stricter rules on acceptable behavior, concerns about stifling dissenting opinions, and increased difficulty in fostering open and honest dialogue. Moderation might become more time-consuming and costly for platforms. | Development of user-friendly reporting mechanisms, clear community guidelines, and a focus on fostering respectful dialogue. Transparency in moderation decisions and independent review procedures are essential. |

| E-commerce Platforms | Increased consumer confidence and trust in product information, reduction in fraudulent activity, and enhanced consumer protection. | Potential for increased costs associated with verifying product information, difficulties in maintaining a broad selection of products, and possible challenges in balancing freedom of speech with the need to protect consumers. | Establishment of clear guidelines for product listings and reviews, development of tools to detect and prevent fraudulent activity, and clear policies for addressing user complaints. Implementing robust verification processes for sellers and products could also help. |

Illustrative Examples of Platform Adaptation

The Earn It Act Section 230 amendment reintroduction necessitates significant adjustments for online platforms. This adaptation involves a shift from a largely liability-free environment to one where platforms bear a degree of responsibility for the content hosted on their sites. Platforms must now proactively identify and mitigate harmful content, a paradigm shift that will demand substantial changes in their operations.

Platform A: A Social Media Platform

Social media platforms are particularly vulnerable to the ramifications of the new amendment. To comply, Platform A will implement a multi-faceted approach to content moderation. This involves a combination of automated tools and human review processes.

- Automated Content Filtering: Sophisticated algorithms will be trained to identify potentially harmful content based on s, sentiment analysis, and image recognition. This will involve continuously updating the algorithm’s training data to account for emerging trends and variations in language and presentation. The platform will implement a system to flag potentially problematic content, allowing human moderators to review the flagged items.

- Enhanced Human Review Process: A dedicated team of human moderators will review flagged content, ensuring accuracy and fairness in the moderation process. These moderators will undergo rigorous training to identify various types of harmful content and understand the nuances of the amendment’s requirements. Training will include specific guidance on identifying hate speech, harassment, and misinformation.

- Transparency and Reporting Mechanisms: The platform will create a transparent system for users to report potentially harmful content and provide detailed explanations of the platform’s content moderation policies. Clear reporting mechanisms will allow users to report specific violations and provide supporting information for review. This will allow for a more efficient and targeted moderation process.

Impact on User Experience, Earn it act section 230 amendment reintroduced

The changes to Platform A’s content moderation policies may lead to a decrease in the visibility of certain content, especially content that is controversial or potentially harmful. However, this reduction in visibility is intended to create a safer environment for all users. The platform will continue to prioritize user-generated content while working to prevent the proliferation of harmful material.

Adjustments to Platform Algorithms

Platform A’s algorithms will be adjusted to prioritize content that aligns with the principles of the new amendment. This includes modifications to ranking algorithms, which may result in a shift in content visibility. Examples of adjustments include:

- Content Ranking Adjustments: Algorithms will consider factors like the presence of harmful content or hate speech when ranking posts. Content flagged by users or flagged through automated systems will be prioritized for review. This may lead to a temporary reduction in the visibility of content that does not adhere to the new amendment’s standards.

- Content Filtering Improvements: Algorithms will be trained to identify more subtle forms of harmful content, such as hate speech disguised as humor or misinformation presented as opinion. This may necessitate adjustments to the algorithm’s parameters, leading to a more comprehensive filtering process.

Platform B: An E-commerce Platform

E-commerce platforms face unique challenges in adapting to the new amendment, particularly concerning the sale of goods that may be used for harmful purposes. Platform B will adopt a comprehensive approach to comply with the new requirements.

- Product Listing Scrutiny: A review process for product listings will be implemented. This involves human reviewers assessing listings for potential violations. The platform will develop a system to flag products that might be associated with harm, such as weapons or items that facilitate hate crimes. These products will be subject to further review and may be removed from the platform.

- Supplier Verification: Platform B will implement stringent verification procedures for suppliers. This includes background checks, compliance audits, and an assessment of the suppliers’ adherence to the principles of the new amendment. Suppliers who fail to meet these standards will be removed from the platform.

- Customer Reviews and Feedback: A more proactive approach to customer reviews and feedback will be implemented. The platform will encourage users to report any concerns about the use of products for harmful purposes, allowing for further investigation.

Legal Arguments For and Against the Amendment

This section delves into the multifaceted legal arguments surrounding the reintroduced Section 230 amendment. Analyzing both sides’ perspectives is crucial for understanding the potential impact on online platforms and user behavior. The arguments often hinge on differing interpretations of free speech principles, the balance between platform responsibility and individual expression, and the practical implications for online content moderation.The amendment’s implications for online speech and the role of platforms are a subject of significant debate.

This scrutiny arises from concerns about the amendment’s impact on the current regulatory framework and its possible effect on online speech and the responsibilities of online platforms. The discussion often centers around the potential chilling effect on free speech, the need for platform accountability, and the practical challenges of implementing the amendment.

Arguments For the Amendment

The proponents of the amendment posit that Section 230, in its current form, grants excessive immunity to online platforms, hindering their accountability for harmful content. They argue that this immunity allows platforms to act as unregulated publishers, potentially exacerbating online harassment, misinformation, and hate speech.

“Current Section 230 protections shield platforms from liability for user-generated content, even when that content is harmful or illegal. This amendment aims to address this imbalance by holding platforms more accountable.”

- Platform Responsibility: Proponents emphasize the need for platforms to take greater responsibility for the content hosted on their sites. They believe that platforms should be held liable for content that is demonstrably harmful, or that the platform actively facilitates illegal activities.

- Combating Harmful Content: Arguments frequently cite the rise of harmful content online, including instances of harassment, misinformation, and hate speech. Proponents suggest that Section 230’s current form impedes the ability to effectively combat these issues.

- Protecting Vulnerable Users: The argument frequently highlights the potential for harm to vulnerable groups, emphasizing the importance of platforms taking active steps to mitigate such harms. They assert that current Section 230 protections are inadequate in this context.

Arguments Against the Amendment

Opponents of the amendment express concerns that the proposed changes could stifle free speech online. They argue that increased liability for platforms could lead to a chilling effect, where platforms censor or remove content to avoid legal repercussions. They also contend that determining liability for user-generated content is a complex and difficult task, potentially leading to inconsistent and unfair outcomes.

“A significant concern is that the amendment may lead to overreach and stifle free expression online by imposing burdensome and unpredictable liabilities on platforms.”

- Chilling Effect on Speech: A primary argument against the amendment is the potential for a “chilling effect” on online speech. Platforms, fearing liability, may be more inclined to censor or remove content, even if it’s not harmful or illegal.

- Burden on Platforms: Opponents highlight the practical difficulties and substantial burdens imposed on platforms to monitor and moderate content. They contend that platforms are already struggling to keep up with the volume of content, and additional responsibilities would be unsustainable.

- Unclear Standards: The amendment’s proposed standards for liability are criticized for being vague and open to interpretation, leading to inconsistent application and potentially unfair outcomes. They argue that determining harmful content and platform responsibility can be challenging.

Conclusive Thoughts

In conclusion, the reintroduction of the Earn It Act Section 230 amendment signifies a pivotal moment for online platforms and users. The proposed changes, while promising improved online safety and accountability, also raise important questions about freedom of speech and platform innovation. The potential challenges and opportunities surrounding this amendment are significant and warrant thorough consideration by all stakeholders.