Twitter shadow ban Trump conservatives search results raises serious questions about the platform’s impartiality and its potential impact on political discourse. This phenomenon involves the suppression of certain accounts or content from search results without a clear explanation, potentially affecting the visibility of conservative viewpoints and impacting the information flow for users. We delve into the mechanics, potential motivations, and real-world implications of this practice.

This investigation examines the potential for Twitter to manipulate search results, focusing on the impact on Trump and conservative accounts. We’ll explore various perspectives on this issue, analyzing the algorithms used, potential strategies employed, and the possible consequences for both the platform and the broader political landscape.

Understanding the Phenomenon

A “shadow ban” on Twitter is a suspected, but often unacknowledged, restriction on the visibility of specific accounts or content. It manifests as reduced reach and engagement, often without the user receiving an explicit notification or explanation. This clandestine nature makes it a challenging issue to analyze and prove definitively. Users affected by shadow bans might see their posts and tweets reaching fewer people, or experiencing diminished engagement from others.The potential impact of a shadow ban on search results is significant.

If a user or account is shadow-banned, their content might not appear in relevant search results. This can severely limit their ability to connect with potential followers, engage in discussions, and spread their message. This has profound implications, especially for public figures or organizations relying on Twitter for communication and outreach.

Motivations Behind Potential Shadow Bans

Twitter’s algorithms, designed to surface relevant content, play a crucial role in determining visibility on the platform. These algorithms assess various factors, including engagement metrics, account activity, and user interactions. A key element is the identification of potentially harmful or violating content. This process, however, is often opaque and can lead to subjective interpretations and perceived bias.

Perspectives on Potential Shadow Bans of Trump and Conservative Accounts

Multiple perspectives exist regarding potential shadow banning of Trump and conservative accounts. Some argue that these actions are a result of Twitter’s attempts to maintain a balanced and safe environment. Others believe that there are political motivations behind these actions, aiming to limit the spread of certain viewpoints. Concerns about censorship and freedom of speech are often raised in such contexts.

The Twitter shadow ban affecting Trump and conservative search results is a real head-scratcher. It’s almost as if certain viewpoints are being subtly suppressed. On a completely different note, Android N’s emergency information on the lock screen ( android n emergency information on lock screen ) is a pretty cool safety feature. But getting back to the Twitter issue, this kind of thing raises serious questions about the platform’s impartiality and the overall impact on free speech.

Mechanisms of Shadow Bans, Twitter shadow ban trump conservatives search results

Twitter employs several mechanisms to potentially implement shadow bans. These mechanisms are not always publicly disclosed, and may include:

- Reduced visibility in search results: Content from accounts subject to shadow bans might appear lower in search results or not at all. This effectively diminishes the reach and impact of the content. For instance, a tweet by a prominent conservative figure might not appear on the first page of a search related to their views.

- Algorithm adjustments: Changes to the Twitter algorithm can influence the ranking of posts. These modifications could selectively reduce the visibility of content from specific accounts or types of accounts. An example would be a change in the algorithm that prioritizes tweets with a higher number of likes, potentially downranking those from accounts with fewer interactions.

- Content filtering: Content deemed inappropriate or harmful might be filtered from users’ feeds, thereby limiting exposure. This is a mechanism that Twitter uses to prevent the spread of misinformation or hateful content. The application of this filter can be applied differently to various accounts or viewpoints.

- Engagement restrictions: Limited engagement, such as fewer likes, retweets, and replies, might indicate a shadow ban. This reduced interaction could be a subtle indicator of restrictions put in place by the platform. For example, a tweet might get fewer likes than anticipated, without an obvious reason.

Role of Algorithms in Determining Visibility

Twitter’s algorithms are complex and multifaceted, employing numerous factors to determine content visibility. These factors often include engagement metrics, user interactions, and the content itself. The application of these criteria is often not transparent to users, creating uncertainty about the reasons behind certain content visibility patterns. The application of these algorithms can sometimes lead to the perception of bias or unfairness.

Trump and Conservative Accounts

The recent surge in speculation surrounding shadow bans on social media platforms has intensified the scrutiny surrounding online censorship. This heightened awareness extends beyond general user experiences to include specific demographics and political viewpoints. This analysis focuses on the potential targeting of accounts associated with President Trump and the broader conservative movement. Understanding the potential patterns and motivations behind these actions is crucial for evaluating the fairness and transparency of online platforms.The possibility of targeted shadow bans on Trump and conservative accounts raises concerns about potential bias and manipulation of information flow.

Platforms may be influenced by various factors, including public pressure, political considerations, and algorithm adjustments. The goal here is to investigate potential patterns and provide insights into the potential strategies used to identify and target these accounts.

Common Themes and Characteristics

Trump and conservative accounts often share common themes and characteristics that might be used to identify them by algorithms. These themes frequently include a focus on political issues, particularly those relating to the former President, and often involve strong opinions and critical commentary, which are hallmarks of their online presence. The use of specific s, phrases, or hashtags associated with the conservative movement and Trump’s administration could also be factors.

Furthermore, the volume of interactions, such as retweets, likes, and comments, can also play a role in identifying patterns and potentially targeted accounts.

Potential Strategies for Identifying Targeted Accounts

Algorithms may use various strategies to identify and potentially target Trump and conservative accounts for shadow banning. These strategies might involve:

- filtering: Identifying posts containing specific s or phrases associated with the conservative movement or President Trump. The frequency and context of these s within a post or account may be a critical factor.

- Interaction analysis: Evaluating the volume and nature of interactions, such as likes, retweets, and comments, to detect patterns of engagement that deviate from typical behavior. A significant increase or decrease in interaction might be a sign.

- Network analysis: Examining the connections and relationships between accounts to identify clusters or groups with similar political viewpoints. This could potentially reveal networks deemed problematic by the platform.

- Sentiment analysis: Determining the emotional tone or sentiment expressed in posts to identify accounts that frequently express negative or critical opinions on specific topics or individuals.

Comparison and Contrast of Treatment

A crucial aspect is the comparison of how Trump and conservative accounts are treated versus other accounts. A thorough examination of interaction metrics, visibility, and algorithmic responses across different user groups is essential. If a pattern of disproportionate treatment is found, it raises serious questions about fairness and impartiality. Data transparency and independent verification are vital to assess potential bias in platform algorithms.

Prominent Trump and Conservative Figures Potentially Affected

- Donald Trump

- Various prominent conservative influencers on social media

- News outlets associated with the conservative viewpoint

- Government officials and political figures known for conservative stances

Timeline of Events Potentially Linked to Shadow Bans

- 2020 Presidential Election Cycle: A potential period for increased monitoring and analysis of accounts with political views.

- Post-Election Period: Accounts exhibiting criticism or dissent regarding the election results may be a target of algorithm adjustments.

- Recent Controversies: Events such as statements or actions by prominent figures may lead to shifts in platform algorithms.

Impact on Search Results

A shadow ban, while not explicitly removing content, can significantly impact the visibility of certain accounts or posts in search results. This subtle suppression can effectively diminish the reach of targeted content, potentially influencing public perception and discussion on particular topics. This is especially concerning for topics that are already politically charged.The impact of a shadow ban on search results is multifaceted.

It affects not only the organic visibility of accounts but also the likelihood of those accounts appearing in search results for relevant terms. This can lead to a distorted view of the availability and prevalence of information. It can also affect the distribution of differing perspectives and hinder the free flow of information, potentially stifling open debate.

How Shadow Bans Affect Search Result Visibility

Shadow banning can reduce the visibility of specific accounts and posts in search results for relevant terms. This means that even if the content isn’t deleted, it may not appear as prominently in response to a user’s query. Users searching for information related to Trump and conservative viewpoints may not encounter content from these accounts as frequently. This can effectively limit the range of perspectives available to the searcher.

The algorithm, possibly influenced by internal biases, might prioritize other results, leading to a skewed view of the available information.

Hypothetical Scenario

Imagine a user searches for “Trump’s economic policies.” Without a shadow ban, several accounts associated with conservative viewpoints might appear in the top search results, along with news articles and analyses from various sources. However, if these accounts are shadow banned, their content may appear lower down in the search results, potentially pushed down to pages 2 or 3.

News outlets or accounts sympathetic to the opposing viewpoint might appear more prominently.

Search Result Differences Before and After Shadow Ban

| Search Term | Before Shadow Ban (Example Results) | After Shadow Ban (Example Results) |

|---|---|---|

| Trump’s economic policies | Conservative think tank articles, tweets by Trump supporters, articles from Fox News | Mainstream news articles, analysis from economists not associated with conservative viewpoints, tweets by political commentators |

| Conservative views on immigration | Posts from conservative commentators, articles from conservative publications, videos from conservative speakers | Academic articles on immigration policy, news articles from various outlets with differing opinions, commentary from non-conservative voices |

| Trump’s foreign policy | Articles from conservative news outlets, tweets from Trump supporters, videos of Trump speeches | Articles from major news outlets, commentary from foreign policy experts, reports from international organizations |

This table illustrates a potential difference in the search results. The “Before” column shows the variety of results, including those associated with conservative views, while the “After” column shows a potential shift towards a less diverse range of sources. This difference is not a complete suppression, but a subtle bias that could significantly influence a user’s perception of the available information.

Potential Consequences

The shadow banning of Trump and conservative accounts on Twitter raises significant concerns about the platform’s impartiality and its potential impact on the democratic process. This practice, if widespread, could stifle diverse viewpoints and create an uneven playing field for political discourse, potentially leading to a less informed public. The consequences extend beyond the immediate impact on specific users, affecting the overall health of online political engagement.The limited visibility of certain voices in search results can have a profound effect on public perception.

If a significant segment of the population is effectively silenced or marginalized online, it can distort the public narrative and influence public opinion in ways that aren’t transparent or easily verifiable. This could potentially lead to a loss of trust in the platform and its ability to serve as a neutral space for public discussion.

Ever wonder why certain tweets about Trump and conservative viewpoints seem to vanish? It’s a tricky topic, and while the Twitter shadow ban on Trump and conservative searches is a persistent issue, understanding the specifics is tough. Luckily, if you’re curious about the OnePlus 6 price, shipping date, and specs, this resource has all the details you need.

It’s fascinating how seemingly unrelated things like tech specs can tie into these larger social and political dynamics. Ultimately, the issue of the Twitter shadow ban continues to impact the online conversation.

Effects on Public Discourse and Political Engagement

The suppression of certain viewpoints can lead to a homogenization of online discussions, making it difficult for alternative perspectives to gain traction. This lack of diverse viewpoints can hinder constructive debate and the free exchange of ideas, which are fundamental to a healthy democracy. When certain voices are silenced or marginalized, it can lead to a chilling effect on political engagement, as individuals may be hesitant to express their opinions if they fear censorship or suppression.

Consequences of Limited Visibility on Twitter Search Results

Reduced visibility in Twitter search results can significantly impact the reach and influence of specific accounts. This can limit the ability of these accounts to engage with the public, share information, and build support for their viewpoints. The result is a narrowed range of perspectives presented in search results, which could shape public perception and limit access to critical information.

Potential Consequences for the Political Landscape

The differential visibility of political viewpoints on Twitter can have substantial effects on the political landscape. It can exacerbate existing political divisions and create an environment where certain viewpoints are amplified while others are marginalized. This could result in a skewed representation of public opinion and potentially influence election outcomes. Historical examples demonstrate how control over information dissemination can shift public sentiment.

Examples of Similar Actions in Other Contexts

Historical precedents exist where censorship or restrictions on information dissemination have had detrimental effects on public opinion and social stability. The suppression of dissenting voices in authoritarian regimes often leads to a climate of fear and mistrust, while the manipulation of information during wartime can influence public support for a particular cause.

Potential Repercussions for Twitter’s Platform Credibility and Trustworthiness

The perception of bias or manipulation in search results can damage Twitter’s credibility and trustworthiness as a platform for public discourse. If users perceive the platform as favoring certain viewpoints, it can lead to a loss of trust in the platform’s impartiality and ability to provide a fair and open forum for diverse opinions. This erosion of trust can have long-term implications for the platform’s user base and its future.

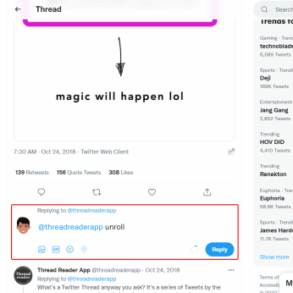

Methods for Determining Shadow Bans

Uncovering a shadow ban, a subtle form of censorship on social media platforms, requires a keen eye and a methodical approach. It’s not always a straightforward process, as platforms don’t explicitly announce these actions. Identifying patterns and analyzing changes in visibility are key to detecting this often-hidden suppression. This section dives into the practical methods for determining if your account or content is subject to a shadow ban.

Potential Indicators of Shadow Banning in Search Results

Determining if search results are manipulated due to a shadow ban involves observing significant changes in visibility and reach. One common indicator is a sudden drop in the visibility of your content in search results, even when using s associated with your work. This decrease can be gradual or abrupt, making it crucial to track search results over time.

Another sign is the reduced number of impressions, clicks, or engagements on your posts. This is particularly noticeable when compared to past performance.

Analyzing Search Results to Assess Shadow Banning

A critical step in assessing potential shadow bans is to meticulously track your account’s visibility in search results. This involves regularly searching for terms relevant to your content and noting the position and prominence of your posts in the search results. Tools for tracking ranking are available, and these should be utilized to compare current rankings with previous performance.

Furthermore, comparing your search result rankings with those of similar accounts is helpful. This comparative analysis can highlight significant differences that suggest manipulation.

Comparing Different Methods of Detecting Shadow Bans

Several methods can be used to detect shadow bans, each with its own strengths and weaknesses. Manual monitoring of search results is a basic approach but can be time-consuming. Utilizing third-party tools for tracking rankings offers a more systematic and detailed analysis, allowing for comparison and trend identification. Using analytics dashboards provided by social media platforms can also provide valuable insights into changes in visibility.

The best approach often combines these methods for a comprehensive understanding of the situation.

Table of Indicators of Shadow Bans

This table Artikels various indicators that might suggest a shadow ban, highlighting potential changes in visibility and reach.

| Indicator | Description |

|---|---|

| Sudden drop in search result rankings | A significant decrease in the position of your content in search results for relevant s. |

| Reduced impressions and clicks | A noticeable decline in the number of times your posts are displayed and the number of clicks received. |

| Decreased engagement on posts | A reduction in likes, comments, shares, or other forms of interaction with your content. |

| Content appearing lower or missing in search results | Your content might not be appearing in search results, even when searching with appropriate s. |

| Lower visibility in related communities | Posts that previously appeared prominently in related communities now have reduced visibility or are absent. |

Tracking Changes in Account Visibility

Regularly monitoring your account’s visibility in search results is crucial for detecting any potential shadow ban. This involves consistently searching for s related to your content and noting any changes in the ranking or presence of your posts. Maintaining a log of these results, comparing them over time, and analyzing trends is essential. Comparing your search results with those of similar accounts is a vital technique for assessing if a shadow ban is affecting you.

A detailed log will provide evidence of any manipulation.

Alternative Explanations

Reduced visibility in search results for Trump and conservative accounts isn’t solely attributable to shadow bans. Other factors can significantly influence online presence. These alternative explanations merit consideration alongside the shadow ban theory. Understanding these competing hypotheses is crucial to forming a complete picture of the phenomenon.A multifaceted approach is necessary to analyze the decrease in search visibility.

The mere absence of a direct shadow ban doesn’t necessarily invalidate the existence of such a practice. Other factors, often subtle and intertwined, can produce similar outcomes. Delving into these possibilities provides a more comprehensive perspective.

Search Algorithm Updates

Search engine algorithms are constantly evolving, incorporating new ranking factors. These updates can significantly impact the visibility of certain content. Changes in the weighting of factors like recency, relevance, and user engagement can affect search rankings for specific s.

Competition

Increased competition for specific s can lead to a decrease in visibility. As more content is published around certain search terms, existing content might be pushed further down the results pages. This competitive environment can impact the ranking of any content, regardless of its ideological leaning.

Ever wonder about those Twitter shadow bans affecting Trump and conservative search results? It’s a fascinating topic, especially considering Stephen King is back on X ( stephen king is back on x ). Perhaps the platform’s algorithm adjustments are impacting more than just political discourse. Maybe this shift in platform user engagement is connected to the recent changes in how conservative voices are presented in search results.

It’s a lot to unpack, and definitely something worth exploring further.

Content Quality and Relevance

The quality and relevance of the content itself play a pivotal role in search results. If content is deemed low-quality, irrelevant, or spammy by the algorithm, it might be ranked lower or excluded entirely from results. This is not necessarily indicative of a shadow ban but rather a reflection of the algorithm’s assessment of content value. Content quality, free of misinformation, is essential for maintaining a high search ranking.

User Engagement and Interaction

Search engines also consider user engagement and interaction with search results. Factors such as click-through rates, time spent on a page, and social shares influence the ranking of web pages. A decline in these metrics can negatively impact the visibility of content, regardless of the content’s ideology. Content that doesn’t resonate with users will see a decline in engagement and interaction.

Geopolitical Events and Trends

Significant geopolitical events or shifts in public interest can impact search results. If a particular topic becomes less relevant or trending, the search results for related content will also experience a decrease in visibility. Search trends shift based on current events and public discourse.

Data Privacy Regulations and Policies

Data privacy regulations, particularly those affecting the collection and use of user data, can influence search results. Changes in these regulations may affect how search engines prioritize and display content. Privacy concerns and data protection regulations can impact the visibility of search results.

Potential Impact of Content Moderation Policies

Content moderation policies can influence visibility by affecting the content deemed suitable for display in search results. Search engines, to maintain a balanced platform, may modify search results based on content moderation policies. Content moderation policies, while intended to maintain platform integrity, can indirectly influence visibility.

Illustrative Examples: Twitter Shadow Ban Trump Conservatives Search Results

Shadow banning on Twitter, especially concerning conservative accounts and those associated with Donald Trump, raises concerns about the platform’s fairness and transparency. This manipulation of search results can significantly impact the visibility of these voices, potentially affecting public discourse and the dissemination of information. Understanding how this might manifest is crucial to evaluating the potential harm.Search results manipulation, when used strategically, can be an effective tool to control the flow of information and limit exposure to certain viewpoints.

This can have a substantial impact on individuals and communities. This section will provide a concrete example of how such a manipulation might appear on Twitter, focusing on a search query related to Trump and conservative viewpoints.

Example Search Query and Altered Results

A user searches for “Trump conservative policies.” This is a fairly common and often politically charged query.

Initial Search Results:

A wide range of articles, news pieces, and social media posts are displayed, featuring various perspectives on Trump’s policies, from supportive articles to those critical of them. The results are diverse, presenting a mix of opinions.

Altered Search Results (After Shadow Ban):

A similar number of results are presented, but the supportive articles and posts are significantly fewer or downranked, and critical or opposing viewpoints are more prominent.The presentation prioritizes results that challenge or critique Trump’s conservative policies.

The alteration in search results subtly, but significantly, shifts the narrative presented to the user. The initial search offered a balanced representation, but the altered results present a skewed perspective, potentially influencing the user’s understanding of the topic.

Impact on Potential Users

The change in search results can significantly affect users’ access to information. By prioritizing certain viewpoints, Twitter’s algorithm, consciously or unconsciously, can limit exposure to differing opinions. This can lead to echo chambers, where users are primarily exposed to information that reinforces their existing beliefs, potentially hindering a comprehensive understanding of the topic. The user might only see viewpoints critical of Trump and conservative policies, without encountering balanced perspectives.

This can influence opinions, and potentially, future actions, based on limited information. The platform’s role in shaping the user’s understanding of the topic is undeniable.

Closure

The potential for Twitter shadow bans to manipulate search results for Trump and conservative accounts warrants further investigation. This analysis of the topic reveals a complex interplay of algorithms, political motivations, and public discourse. The implications extend beyond the immediate participants, impacting the integrity of online information and potentially distorting public perception.