TikTok restrict accounts problematic topics for you feed. This exploration delves into the reasons behind account restrictions on TikTok, examining the types of content flagged, the impact on users, and the moderation techniques employed by the platform. We’ll compare TikTok’s approach to that of other major social media sites, and consider potential solutions to address user concerns.

TikTok’s content moderation system aims to maintain a safe and positive environment for its users. However, the process isn’t without its complexities. This article investigates the potential for bias and subjectivity in content moderation, and examines the diverse experiences of users facing restrictions. It’s a critical look at how restrictions impact the user experience, potentially influencing content discovery and user growth.

TikTok Account Restrictions

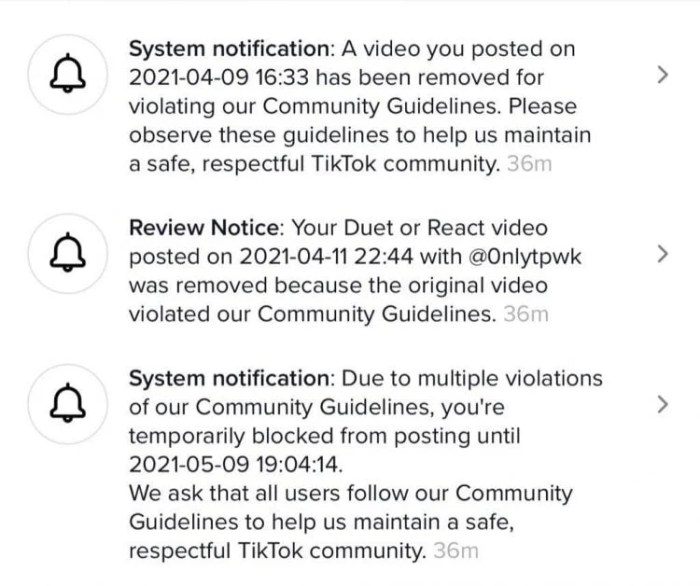

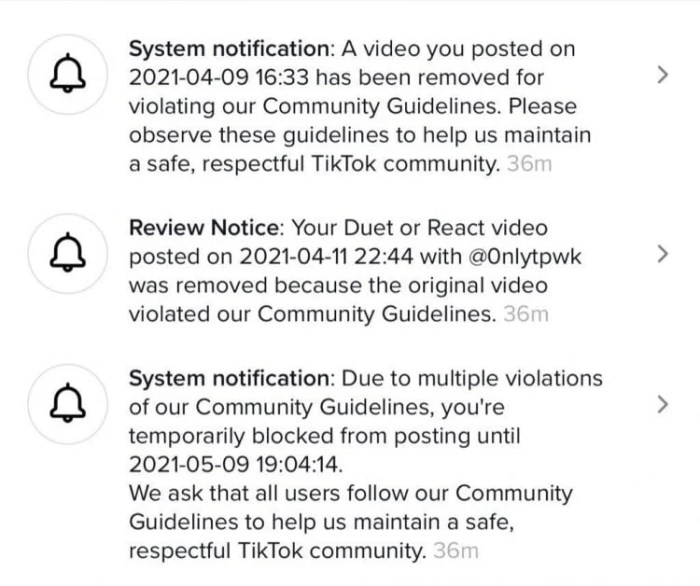

TikTok, a hugely popular short-form video platform, employs account restrictions as a means of maintaining a safe and positive user experience. These restrictions, often temporary or permanent, are a response to violations of community guidelines, impacting both creators and viewers. The platform’s approach to managing problematic content is a constant balancing act, aiming to deter harmful behavior while allowing for diverse expression.Account restrictions on TikTok, like those on other social media platforms, are frequently implemented for content deemed inappropriate, violating community guidelines, or infringing on user rights.

TikTok’s restrictions on certain account topics in your feed can be frustrating, right? It feels like you’re missing out on potentially interesting content. Fortunately, managing your Android phone and Windows 10 PC can become much easier with some tweaks. This guide will help you streamline your tech experience, and then you can finally get back to exploring the full range of content on TikTok.

This will help you avoid those frustrating restricted feeds!

These actions can have significant consequences for creators, impacting their ability to engage with their audience and generate income. Understanding the nature of these restrictions and the reasons behind them is crucial for maintaining a productive and safe online presence.

Nature of TikTok Account Restrictions

Account restrictions on TikTok, as on other social media platforms, are a tool to address content that breaches community guidelines. These restrictions vary in severity, from temporary suspensions to permanent bans. They can stem from a multitude of factors, often related to content violations.

Potential Reasons for Account Restrictions Related to Content

Account restrictions on TikTok are frequently implemented for content that violates the platform’s community guidelines. These violations can range from hate speech and harassment to content promoting dangerous activities or misinformation. Copyright infringement, the use of unauthorized material, and inappropriate content (nudity, graphic violence, etc.) can also trigger restrictions. The platform’s aim is to protect its users from harm and maintain a positive environment.

Examples of Content Types Leading to Restrictions

Several types of content can lead to account restrictions. Content that promotes violence, hate speech, or discrimination is frequently flagged. Content that incites hatred or violence against specific groups or individuals, is a major concern. Content that is sexually suggestive or exploits, abuses, or endangers children is strictly prohibited. Misinformation and content that spreads false or misleading information can also result in restrictions.

Content that violates copyright laws, plagiarizes work, or uses copyrighted material without permission is also a potential cause. Content that is deemed spam or intended to mislead or deceive users is also subject to restrictions.

Impact of Restrictions on Creators and Users

Restrictions can have a significant impact on creators and users. For creators, restrictions can result in lost income, a diminished audience, and damage to their reputation. Users might experience the removal of content they enjoyed or find it difficult to interact with creators. The platform aims to strike a balance between allowing diverse expression and protecting users from harmful content.

Comparison of TikTok’s Approach to Other Social Media Platforms

TikTok’s approach to handling problematic content differs slightly from other social media platforms like Instagram and YouTube. While all aim to maintain a safe environment, the specific criteria for content restrictions and the enforcement mechanisms may vary. For instance, TikTok’s focus on short-form video content might lead to unique challenges in moderation compared to platforms with longer-form content.

Categorization of Restricted Content

| Content Type | Reason for Restriction |

|---|---|

| Hate speech | Violation of community guidelines prohibiting hate speech and discrimination. |

| Harassment | Violation of community guidelines prohibiting harassment and bullying. |

| Dangerous activities | Promotion of dangerous activities or content that could incite harm. |

| Misinformation | Spread of false or misleading information. |

| Copyright infringement | Violation of copyright laws. |

| Inappropriate content | Content that is sexually suggestive, graphic, or exploits, abuses, or endangers children. |

Comparison of Restriction Policies

| Platform | TikTok | YouTube | |

|---|---|---|---|

| Hate Speech | Prohibited | Prohibited | Prohibited |

| Harassment | Prohibited | Prohibited | Prohibited |

| Misinformation | Prohibited | Prohibited | Prohibited |

| Copyright Infringement | Prohibited | Prohibited | Prohibited |

| Inappropriate Content | Prohibited | Prohibited | Prohibited |

Identifying Problematic Content

TikTok’s content moderation system aims to maintain a safe and positive environment for its users. However, the process of identifying and classifying problematic content is complex and often involves nuanced judgments. This necessitates understanding the criteria TikTok uses, the types of content frequently flagged, and the potential challenges and biases inherent in the moderation process.The platform’s algorithms and human reviewers work together to flag content that violates community guidelines.

These guidelines, while striving for clarity, can be open to interpretation, leading to instances of subjectivity and potential biases. A crucial aspect of understanding problematic content on TikTok involves recognizing the dynamic nature of societal norms and evolving expectations.

Criteria Used to Flag Content as Problematic

TikTok employs a multifaceted approach to identifying problematic content. This includes automated systems that scan content based on s, visual patterns, and reported user feedback. Human reviewers play a crucial role in examining flagged content and making subjective judgments based on community guidelines. The guidelines are regularly updated to address emerging issues and concerns.

Types of Problematic Content, Tiktok restrict accounts problematic topics for you feed

Several categories of content are frequently flagged as problematic. These include hate speech, harassment, misinformation, and content promoting dangerous or illegal activities. Content that exploits, abuses, or endangers children is also strictly prohibited. The line between acceptable and unacceptable content can be blurry in some cases. The platform continually strives to refine its approach to address these challenges.

TikTok’s restrictions on certain topics in your feed can be a real pain, right? It’s frustrating when you’re trying to discover new things, but it’s also interesting to consider how this relates to the safety and accuracy of health information. For example, if you’re curious about the Apple Watch Series 4 EKG, understanding the FDA approval vs. clearance process is key, as explained in detail on this helpful resource: apple watch series 4 ekg fda approved vs cleared meaning safe.

Ultimately, these restrictions on TikTok likely stem from a desire to keep the platform safe and user-friendly, even if it means some content gets filtered out.

Potential Biases and Subjectivity in Content Moderation

The content moderation process is not without potential biases. Cultural differences, varying interpretations of community guidelines, and even the demographics of the review team can all contribute to subjectivity. This means that different users might experience varying responses to similar content. Furthermore, the constant evolution of online trends and slang can make it difficult for algorithms to keep up, leading to instances where content may be misinterpreted.

Potential Challenges in Defining Problematic Content

Defining “problematic” content is challenging due to the constantly evolving nature of online discourse and cultural norms. What is considered offensive or harmful in one community may not be viewed similarly in another. This makes establishing universal criteria a difficult task. Furthermore, the rapid pace of online communication can make it challenging for moderators to keep up with emerging trends and slang.

Comparison and Contrast of Problematic Content Examples

Hate speech, for example, often involves explicit targeting of individuals or groups based on attributes like race, religion, or gender. Harassment, on the other hand, focuses on aggressive or threatening behavior directed at specific individuals. Misinformation typically involves the dissemination of false or misleading information, often with the intent to deceive or cause harm. These examples, while distinct, often overlap in practice, requiring nuanced judgments from moderators.

Categories of Problematic Content

| Category | Common Characteristics |

|---|---|

| Hate Speech | Includes derogatory language, insults, and threats targeting individuals or groups based on protected characteristics. |

| Harassment | Involves aggressive or threatening behavior directed at specific individuals, often through repetitive or escalating actions. |

| Misinformation | Dissemination of false or misleading information, frequently intended to deceive or cause harm, often through manipulation of facts. |

| Content Promoting Dangerous or Illegal Activities | Includes content that encourages or facilitates harmful or unlawful behaviors, ranging from self-harm to illegal activities. |

Varied Responses to Problematic Content

| Type of Content | Potential Responses |

|---|---|

| Hate Speech | Removal of the content, account restrictions, or even permanent account bans. |

| Harassment | Removal of the content, blocking of the perpetrator, and potential account restrictions. |

| Misinformation | Content labeling, warnings, or removal depending on the severity and potential impact. |

| Content Promoting Dangerous or Illegal Activities | Immediate removal, account restrictions, and potential legal action in extreme cases. |

Impact on User Experience

TikTok’s content restrictions, while aiming to maintain a positive environment, can significantly impact user experience. These restrictions, whether temporary or permanent, can alter how users engage with the platform, discover content, and interact with the community. Understanding these effects is crucial for evaluating the overall health and satisfaction of the TikTok user base.Content restrictions, by their nature, often lead to a reduction in user engagement.

When users encounter content that has been flagged or removed, it can disrupt their viewing habits and the flow of their experience. This can lead to a decrease in time spent on the platform, as users may find less engaging content or feel that their interests are not being served. Furthermore, the restrictions themselves can be frustrating and confusing for users, especially if they are unclear about the reasoning behind the removal.

Effect on User Engagement

Restrictions can lead to decreased user engagement due to a lack of relevant content, potentially impacting user satisfaction and overall platform usage. Users might find their feeds less interesting or discover fewer videos that align with their interests. This decrease in engagement could also lead to a decline in the overall number of views, comments, and shares, impacting the platform’s algorithm and further diminishing the discoverability of suitable content.

Impact on Content Discoverability

Content restrictions can significantly impact the discoverability of content, especially if the algorithm prioritizes restricted content. Users may miss out on relevant and engaging content, leading to a less diverse and personalized experience. This impact can be particularly detrimental to creators whose content falls within the restricted categories, as their reach and visibility may be reduced, potentially affecting their ability to grow their audience and earn income.

Examples of Interaction Limitations

Restrictions can affect users’ ability to interact with content in various ways. For instance, users might be unable to comment on or share specific videos, potentially limiting their ability to express their opinions or engage with the creator. This limitation can create a sense of disconnect or frustration for users, especially if they feel that their interaction is being unfairly curtailed.

Consequences on User Growth

Content restrictions can have negative consequences on user growth. Users might become disillusioned with the platform, leading them to seek out alternative platforms that better suit their needs and interests. This outflow of users can significantly impact the platform’s overall user base and potential for growth, particularly for creators whose content falls within the restricted categories. Furthermore, restricted content can create a negative perception of the platform, discouraging new users from joining.

Comparison of User Reactions

User reactions to content restrictions can vary significantly. Younger users might be more susceptible to frustration due to the limited content options, while older users might be more tolerant, as they may be less reliant on TikTok for daily engagement. Users who heavily rely on the platform for entertainment or information might be more negatively affected compared to those who use it less frequently.

These diverse reactions highlight the importance of considering the various demographics and their experiences with restrictions.

Table: Varying User Experiences

| User Demographic | Potential Experience with Restrictions |

|---|---|

| Younger Users (13-24) | Increased frustration, reduced engagement, search for alternative platforms |

| Middle-Aged Users (25-44) | Potential for decreased engagement, but potentially more tolerant due to alternative entertainment options |

| Older Users (45+) | Potential for decreased engagement, but possibly less reliant on TikTok for daily needs |

Table: Impact of Restrictions Across Demographics

| User Demographic | Impact on Content Consumption | Impact on Content Creation | Impact on User Engagement |

|---|---|---|---|

| Younger Users | Reduced variety in feed, decreased discovery of content, possible frustration | Reduced reach and visibility, potential for decreased income | Lower platform usage, increased likelihood of seeking alternative platforms |

| Middle-Aged Users | Potential for reduced consumption if restrictions target their interests | Potential for reduced reach and visibility, potentially impacted income | Potential for decreased engagement if restrictions affect preferred content |

| Older Users | Limited impact if restrictions don’t affect their preferred content | Limited impact if restrictions don’t affect their preferred content | Limited impact if restrictions don’t affect their preferred content |

Content Moderation Techniques

TikTok’s content moderation is a complex process aimed at maintaining a safe and positive environment for its users. It involves a multifaceted approach combining sophisticated algorithms with human oversight to identify and address potentially harmful or inappropriate content. The platform strives to balance user freedom of expression with the need to protect its community from harmful content.

TikTok’s Content Moderation Strategies

TikTok employs a multi-layered approach to content moderation, integrating various techniques to identify and address problematic content. This strategy involves a combination of automated systems and human review. The platform prioritizes user safety and community well-being.

Identifying and Addressing Problematic Content

TikTok utilizes a combination of automated systems and human review to identify and address problematic content. The automated systems analyze content based on s, image recognition, and user reports, flagging potential violations of community guidelines. Human moderators review flagged content and make final determinations on whether the content violates community standards. The platform constantly refines its algorithms and guidelines to improve its ability to detect and address problematic content.

Role of Human Moderators and AI

Human moderators play a crucial role in content moderation, particularly in nuanced cases where automated systems may struggle. They assess context, intent, and potential harm, ensuring a more comprehensive understanding of the content. AI tools aid human moderators by rapidly identifying and categorizing flagged content, freeing up human resources to focus on more complex issues. The combination of human judgment and AI capabilities allows TikTok to handle a vast volume of content effectively.

Effectiveness of Current Content Moderation Strategies

TikTok’s content moderation strategies have demonstrably improved over time. However, the effectiveness is still a subject of ongoing evaluation and refinement. Challenges remain in identifying and addressing harmful content in real-time, especially with the rapid evolution of online trends and the creative use of technology.

Comparison of Content Moderation Techniques Across Social Media Platforms

Different social media platforms employ various content moderation techniques. Some rely heavily on automated systems, while others prioritize human oversight. The effectiveness of each approach varies, depending on the platform’s specific community guidelines and the types of content it hosts. Factors like scale, resources, and the nature of the platform’s content significantly influence the techniques utilized.

TikTok’s Content Moderation Methods

| Method | Description |

|---|---|

| Automated Filtering | Algorithms identify potentially problematic content based on predefined s. |

| Image Recognition | AI systems analyze images and videos to detect harmful imagery. |

| User Reporting | Users can flag content they believe violates community guidelines. |

| Human Review | Trained moderators review flagged content, considering context and intent. |

| Community Guidelines Enforcement | Content is assessed against established community guidelines. |

Comparison of Social Media Content Moderation Policies

| Platform | Primary Content Moderation Approach | Human Oversight Level | Examples of Problematic Content Targeted |

|---|---|---|---|

| TikTok | Combination of AI and human review | High | Hate speech, harassment, violence, misinformation, self-harm content. |

| Combination of AI and human review | High | Hate speech, misinformation, violence, incitement to violence, harassment. | |

| Combination of AI and human review | Moderate | Hate speech, harassment, violence, misinformation, impersonation. | |

| YouTube | Combination of AI and human review | High | Hate speech, harassment, violence, misinformation, copyright infringement. |

User Perspective on Restrictions

TikTok’s content restriction policies have a significant impact on user experience, and understanding user perspectives is crucial for effective moderation. Users often feel strongly about the content they see and the restrictions imposed. This section delves into how users perceive these restrictions, examining opinions on fairness, feedback examples, and the impact of subjectivity.User perception of content restrictions is often complex and multifaceted.

TikTok’s restrictions on certain account topics in your feed can be problematic, limiting exposure to diverse perspectives. This isn’t just about entertainment; it’s about the flow of information. For example, consider the recent executive order by President Biden, along with Kamala Harris’s involvement in the labor task force, focused on worker organizing president biden labor task force executive order kamala harris worker organizing.

This highlights the need for open discourse and the potential for these restrictions to stifle important discussions, ultimately hindering a well-rounded feed experience.

Users react to restrictions based on their personal values, the specific content restricted, and the perceived rationale behind the restriction. A variety of factors influence user sentiment, from feeling empowered by a system that addresses harmful content to feeling frustrated by perceived censorship or unfairness.

User Opinions on Fairness of Restrictions

User opinions on the fairness of restrictions vary widely. Some users believe restrictions are necessary to maintain a safe and positive community environment, while others argue that the restrictions are too broad or overly subjective. Their opinions are often tied to specific experiences and the perceived impact of those restrictions on their ability to freely express themselves and engage with content.

Examples of User Feedback about Restrictions

Direct feedback from users reveals a spectrum of reactions. Some users praise TikTok’s efforts to address harmful content, while others express concern about the potential for misuse or misapplication of restriction policies.

- Some users express gratitude for the removal of harmful content, highlighting the importance of platforms addressing inappropriate material.

- Conversely, some users feel that certain restrictions are disproportionate or unwarranted, leading to feelings of censorship or unfair treatment.

- Many users point out the challenges in defining what constitutes “harmful” content, leading to concerns about the subjectivity of the restriction process.

User Concerns Regarding Subjectivity of Restrictions

A recurring concern among users is the subjectivity inherent in content moderation. The lack of clear guidelines and transparent criteria can lead to inconsistent enforcement, creating a sense of uncertainty and potentially unfair treatment. Users often highlight the difficulties in defining and categorizing problematic content without precise standards.

Examples of User Reactions to Content Restrictions

User reactions to content restrictions range from acceptance and understanding to frustration and anger. These reactions are often public and visible on the platform, illustrating the significance of user feedback in shaping content moderation strategies.

- Some users accept restrictions as a necessary measure to protect the platform’s community.

- Others express dissatisfaction with restrictions they deem unfair or overly broad.

- User comments and discussions often highlight the perceived subjectivity of restrictions, leading to concerns about bias and potential discrimination.

User Feedback Regarding Content Restrictions

User feedback on content restrictions is crucial for platforms to improve their policies. This table provides a snapshot of user sentiment and the variety of concerns voiced.

| Category | User Feedback |

|---|---|

| Positive | Appreciates platform’s effort to address harmful content |

| Negative | Feels restrictions are too broad or subjective |

| Neutral | Expresses concern about the subjectivity of restrictions and inconsistent application |

User Complaints Regarding Fairness of Restrictions

User complaints regarding the fairness of restrictions highlight the need for more transparent and consistent content moderation policies. This table summarizes some of the frequent complaints.

| Complaint Type | Example |

|---|---|

| Unfair Targeting | Feeling targeted for content that others haven’t been restricted for |

| Lack of Transparency | Inability to understand the reason behind a restriction |

| Subjectivity in Enforcement | Feeling that restrictions are applied inconsistently |

Potential Solutions

TikTok’s content moderation system, while crucial for maintaining a safe and positive platform, faces challenges in balancing user freedom with community well-being. Improving this system requires a multi-faceted approach, focusing on transparency, consistency, and user experience. This necessitates a comprehensive review of existing policies and procedures, along with a willingness to adapt to evolving societal norms and user expectations.Addressing user concerns and improving the content moderation process requires a nuanced approach.

The current system needs enhancements to avoid overly broad restrictions and ensure fairness in the application of policies. This involves creating a more user-friendly and transparent feedback mechanism, enabling users to understand and challenge restrictions more effectively.

Improving Content Moderation Process

TikTok’s content moderation process can be enhanced through several key improvements. These improvements should focus on preventing problematic content from surfacing in the first place, while also ensuring fairness and accuracy in the enforcement of existing policies. This involves a careful consideration of the various factors involved in content creation, distribution, and user interaction. Robust training for moderators, coupled with ongoing evaluation and feedback mechanisms, is crucial to maintain consistency and prevent bias in decisions.

- Automated Filtering with Enhanced AI: Implementing advanced AI algorithms that can detect and flag problematic content in real-time can significantly improve the efficiency of the moderation process. This technology should be constantly refined and updated to address emerging trends in harmful content. For example, AI could be trained to recognize subtle cues and patterns in text and images that indicate potentially problematic behavior, such as hate speech or cyberbullying.

This would help to identify and remove harmful content before it reaches a wider audience. This automated filtering would free up human moderators to focus on more complex cases, and would potentially prevent harmful content from being flagged and taken down too late.

- Human Review with Specialized Training: While AI is invaluable, human oversight remains crucial. Content moderators should undergo specialized training to recognize and address complex issues, such as nuanced hate speech, misinformation, or incitement. Training programs should focus on empathy, cultural sensitivity, and ethical considerations to ensure responsible moderation practices. This includes detailed training on the platform’s policies, legal frameworks, and ethical considerations regarding content moderation.

Designing Transparent and Consistent Restriction Policies

Transparency in restriction policies is essential for building trust. Users should have clear, accessible guidelines on what constitutes problematic content, and the rationale behind specific restrictions. This involves developing a more accessible FAQ section, creating clear and concise policy documents, and providing user-friendly explanations of the platform’s content guidelines.

- Detailed Policy Explanations: Creating a comprehensive and user-friendly document outlining TikTok’s content guidelines is crucial. This should include clear definitions of problematic content categories (hate speech, misinformation, harassment, etc.) and specific examples. This document should be regularly reviewed and updated to reflect evolving societal norms and technological advancements. This would give users a clearer understanding of what is and isn’t allowed on the platform.

- Appeals Process Enhancements: Users should have a clear and efficient appeals process for content restrictions. The appeals process should be transparent, allowing users to understand the reasoning behind a restriction and providing opportunities for dialogue with the platform. The appeals process should be easily accessible and understandable, minimizing bureaucratic hurdles and maximizing user satisfaction.

Addressing User Concerns Regarding Restrictions

A crucial aspect of improving the user experience is directly addressing concerns about content restrictions. Creating a platform for feedback, incorporating user input into policy revisions, and actively monitoring and responding to user complaints can significantly improve user satisfaction and trust.

- Dedicated Feedback Channels: Establishing dedicated channels for users to provide feedback on restrictions and content guidelines can be instrumental. These channels could include dedicated email addresses, online forums, or in-app feedback mechanisms. Users should feel comfortable expressing their concerns and perspectives without fear of reprisal. This could be a dedicated section within the TikTok app or a dedicated website for user feedback.

- Transparency in Enforcement: Providing clear explanations of the reasons behind content restrictions, even when automated, is essential. Users should be informed of the specific policies violated and the rationale behind the action. This transparency fosters trust and helps users understand the platform’s decision-making process.

Improving User Experience Regarding Content Restrictions

User experience with content restrictions is crucial for maintaining user engagement and trust. Strategies to improve this experience should focus on clarity, efficiency, and user control.

- Simplified Restriction Notifications: Notifications regarding content restrictions should be clear, concise, and easy to understand. Users should receive timely notifications about restrictions, including a clear explanation of the violation. This could be implemented using a notification system within the TikTok app, along with helpful links to relevant policies.

- User Control Mechanisms: Providing users with tools to manage their content and interactions can enhance the overall user experience. This could include options for blocking users or reporting content, enabling users to more actively participate in the platform’s content moderation process. For example, users should be able to easily report content they find problematic and be notified if their report is successful or not, and why.

Potential Solutions Table

| Solution | Description | Impact on Algorithm | Impact on Policies |

|---|---|---|---|

| Automated Filtering | AI-powered detection and flagging of problematic content | May alter content discovery by removing problematic posts | Reinforces existing policies, potentially prompting updates |

| Specialized Training | Training moderators on complex content issues | May not directly impact algorithm, but improves content moderation | Enhances policy enforcement, potentially leading to stricter standards |

| Detailed Policy Explanations | Comprehensive, user-friendly policy document | May slightly affect content creation based on clear guidelines | Increases transparency, enabling user understanding |

| Appeals Process Enhancements | Improved appeals process with clear explanation | May lead to adjustments in how restrictions are handled | Demonstrates commitment to fairness and accountability |

Conclusive Thoughts: Tiktok Restrict Accounts Problematic Topics For You Feed

In conclusion, TikTok’s approach to restricting accounts for problematic content is a complex issue with significant implications for users and creators. While aiming to maintain a safe platform, the system faces challenges related to bias, transparency, and user experience. Addressing these issues requires a multifaceted approach, involving improvements to content moderation processes, clearer guidelines, and a more consistent application of policies.

Ultimately, a balance between safety and freedom of expression is crucial for a healthy and thriving platform.