Voice technology speech analysis mental health risk privacy is a rapidly evolving field, raising critical questions about how we use voice data to understand and support mental well-being. From mood tracking to risk assessment, the potential of voice analysis is immense, but so too are the ethical and privacy concerns. This exploration dives into the intricate relationship between voice technology, speech patterns, mental health, and the vital need for robust privacy protections.

This article will delve into the technical aspects of voice-activated devices, examining their capabilities in monitoring emotional states and identifying potential risks. We’ll also explore the ethical implications, including consent procedures and data security protocols, to ensure responsible use of this powerful technology. Finally, we’ll analyze the potential societal impacts, considering both benefits and drawbacks, and highlighting the importance of mitigating biases within voice recognition systems.

Voice Technology and Mental Health

Voice-activated devices are increasingly integrated into our daily lives, offering convenience and accessibility. Beyond simple tasks, these technologies have the potential to play a crucial role in mental health monitoring, offering early detection and intervention. This exploration delves into the fascinating possibilities and ethical considerations surrounding the use of voice data for assessing emotional states.Voice-activated devices can analyze vocal patterns to detect subtle changes indicative of emotional states.

This analysis, when combined with other factors, can potentially serve as a powerful tool in mental health care. For example, changes in vocal pitch, volume, or speed can reflect underlying emotional shifts.

Voice technology’s ability to analyze speech patterns raises concerns about mental health risks and privacy. Similar to how unauthorized app distribution platforms like those involved in apple tutuapp piracy ios apps developer enterprise program misuse can compromise security and data integrity, voice analysis tools need robust safeguards to protect user data. Ultimately, these technologies hold great promise, but we need careful consideration of the ethical implications to ensure their responsible use.

Methods for Tracking Mood and Emotional States

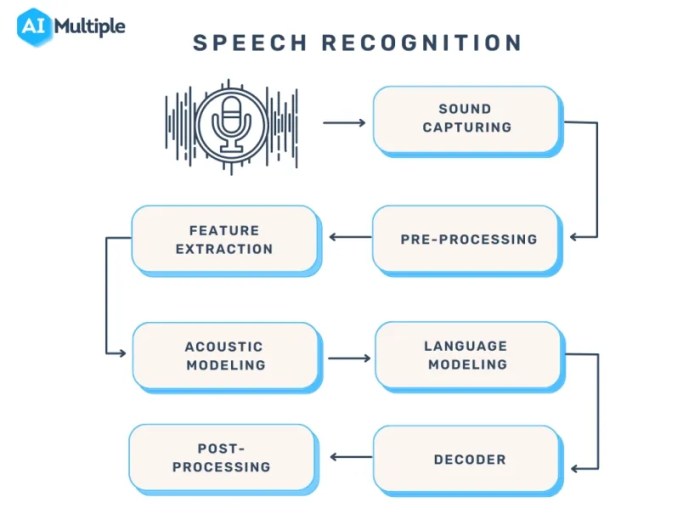

Voice-activated devices can track mood and emotional states by analyzing various vocal parameters. Sophisticated algorithms analyze speech characteristics like pitch, intensity, tempo, and pauses. These parameters are then correlated with pre-existing emotional profiles to identify patterns associated with specific emotional states.

Examples of Applications Monitoring Vocal Patterns

Numerous applications leverage voice data to monitor potential distress signals. For instance, some apps use voice analysis to identify potential signs of depression, anxiety, or stress. These apps can analyze vocal patterns and provide users with feedback or recommendations to manage their emotional well-being. Another example is in crisis hotlines, where voice analysis tools can assist in identifying individuals experiencing heightened emotional distress.

This helps in quickly directing them to appropriate support resources.

Ethical Implications of Using Voice Data

The use of voice data for mental health assessments raises significant ethical considerations. Privacy concerns are paramount, and rigorous data security measures are essential. Furthermore, the potential for misinterpretation or bias in the algorithms needs careful consideration. The accuracy and reliability of voice-based assessments must be established, and the potential for stigmatization or discrimination must be addressed.

Ensuring User Consent and Data Privacy

Protecting user consent and data privacy is crucial in any application involving personal data. Transparency about how voice data will be used, along with explicit consent, must be mandatory. Data encryption and secure storage protocols are essential to prevent unauthorized access. Clear guidelines on data retention and disposal are necessary to ensure privacy.

Comparative Analysis of Voice-Based Mental Health Monitoring Systems

| System | Strengths | Weaknesses |

|---|---|---|

| System A | Utilizes advanced machine learning algorithms for accurate vocal pattern recognition. Offers detailed reports on emotional states. | Requires extensive data training for accurate results; potential for bias if training data isn’t diverse enough. High cost of implementation. |

| System B | Relatively low-cost implementation; user-friendly interface. Provides immediate feedback on emotional states. | Accuracy may be lower compared to more advanced systems. May not capture the full spectrum of emotional nuances. |

| System C | Integrates with existing mental health platforms; allows for seamless data sharing with healthcare providers. Promotes user engagement. | Interoperability with different platforms may be challenging. Potential for data silos if not properly managed. |

This table provides a comparative overview of different voice-based mental health monitoring systems. Each system has its own strengths and weaknesses, and the optimal choice depends on specific needs and resources.

Speech Analysis and Risk Assessment

Unveiling the potential of speech analysis to identify individuals at risk of mental health conditions is a rapidly evolving field. This method offers a non-invasive, accessible, and potentially early detection approach, complementing traditional diagnostic methods. By analyzing subtle linguistic patterns in speech, researchers aim to develop predictive models capable of identifying individuals at risk of emotional distress or cognitive decline.

The hope is to intervene early, enabling preventative measures and improving overall well-being.

Potential of Speech Analysis for Risk Identification

Speech analysis holds significant promise for identifying individuals at risk of various mental health conditions. Acoustic features, such as pitch, intonation, and speech rate, can reflect emotional states and cognitive processes. For example, decreased speech rate and increased pauses may indicate anxiety or depression, while a heightened pitch and rapid speech could signal agitation or mania. This approach allows for continuous monitoring and assessment, offering a unique opportunity for early intervention.

Identifying Linguistic Patterns

Linguistic patterns indicative of emotional distress or cognitive decline are complex and multifaceted. Researchers are exploring various linguistic features, including sentence structure, word choice, and grammatical errors. For instance, individuals experiencing anxiety may exhibit increased use of negative emotional words, while those experiencing cognitive decline might display difficulty with complex sentence structures and word-finding problems. Recognizing these subtle nuances through sophisticated algorithms is key to developing accurate predictive models.

Building Predictive Models

Constructing predictive models from speech patterns involves several key steps. First, large datasets of speech samples from individuals with and without mental health conditions are gathered. These samples are then carefully annotated to identify specific linguistic features associated with each condition. Next, machine learning algorithms are employed to analyze these features and learn patterns indicative of risk.

This process involves model training, validation, and testing to ensure accuracy and reliability. Examples of models include Support Vector Machines (SVM) and Recurrent Neural Networks (RNNs). The final step involves refining the model and ensuring it can generalize well to new, unseen data.

Comparison of Speech Analysis Techniques

Different speech analysis techniques offer varying degrees of effectiveness. Acoustic analysis, focusing on measurable features like pitch and intensity, can be relatively straightforward to implement. However, it might not capture the nuanced complexities of human language. Linguistic analysis, on the other hand, considers the structure and content of speech, offering a more comprehensive view. Hybrid approaches combining acoustic and linguistic features often yield the best results.

The optimal technique will depend on the specific mental health condition being assessed.

Factors Influencing Speech Patterns

- Emotional State: Anxiety, depression, and other emotional states can significantly impact speech patterns. Increased hesitation, altered pitch, and changes in speaking rate are common indicators.

- Cognitive Function: Cognitive decline, including dementia and Alzheimer’s disease, often manifests in speech patterns. Difficulty with word retrieval, impaired sentence structure, and reduced fluency are frequently observed.

- Cultural Background: Cultural norms and communication styles can influence speech patterns. Careful consideration of cultural factors is crucial to avoid misinterpretations and ensure accurate risk assessment.

- Medical Conditions: Physical conditions like Parkinson’s disease and stroke can also impact speech. Distinguishing between these factors and mental health conditions requires careful consideration.

- Language Proficiency: Individuals with varying levels of language proficiency may exhibit different linguistic patterns. These differences need to be accounted for in the analysis to avoid misinterpretations.

| Factor | Potential Impact on Risk Assessment |

|---|---|

| Emotional State | Increased hesitation, altered pitch, changes in speaking rate can indicate emotional distress |

| Cognitive Function | Difficulty with word retrieval, impaired sentence structure, reduced fluency may indicate cognitive decline |

| Cultural Background | Varied communication styles can lead to misinterpretations if not considered |

| Medical Conditions | Distinguishing between physical and mental health conditions is critical for accurate assessment |

| Language Proficiency | Differences in language proficiency can affect linguistic patterns and require careful analysis |

Mental Health and Privacy Concerns

Voice technology, particularly when applied to mental health, presents a delicate balance between potentially life-saving interventions and the safeguarding of personal privacy. The intimate nature of voice data raises crucial questions about the ethical and legal frameworks necessary to ensure responsible use and protect vulnerable individuals. This necessitates a comprehensive understanding of the risks to privacy, the types of sensitive information that can be gleaned from voice data, and the measures required to safeguard it.The analysis of voice data for mental health purposes holds significant promise for early detection and intervention.

However, the potential for misuse and breaches of personal privacy demands robust safeguards. Protecting user confidentiality is paramount to ensuring the trust and widespread adoption of this technology. This includes establishing clear ethical guidelines and implementing strong data security protocols.

Potential Risks to Personal Privacy

The inherent sensitivity of voice data, especially when reflecting emotional states, creates a significant risk to personal privacy. Voice recordings can reveal a wealth of information about an individual’s mental well-being, including their mood, stress levels, anxiety, and even suicidal ideation. Furthermore, voice data can be linked to other personal information, potentially creating a detailed and sensitive profile of the user.

This raises concerns about potential misuse by unauthorized individuals or institutions. Examples of such misuse include discriminatory practices, targeted advertising, or even blackmail.

Sensitive Information Revealed Through Voice Data

Voice data can expose a variety of sensitive information that could potentially harm individuals if mishandled. These include:

- Emotional state: Vocal tone, pitch, and speed can reflect underlying emotional states, ranging from happiness to sadness, anxiety, and even anger. This can provide insights into an individual’s emotional well-being, but also raises concerns about the potential for misinterpretation or misuse.

- Mental health conditions: Specific verbal patterns, speech impediments, and content of conversations can hint at the presence of mental health conditions like depression, anxiety, or PTSD. However, this is not definitive diagnosis, and caution is crucial to avoid misinterpretations.

- Personal details: Voice data can reveal personal details such as location, relationships, and lifestyle choices, potentially exposing an individual to unwanted attention or discrimination.

- Suicidal ideation: The content of conversations can sometimes reveal suicidal thoughts or intentions. The responsible use of voice data in mental health contexts necessitates careful attention to these indicators and immediate action if necessary.

Privacy Protection Measures for Voice Data

Several measures are necessary to protect user privacy when analyzing voice data for mental health purposes. These include:

- Data anonymization and pseudonymization: Transforming identifiable information into non-identifiable data or using unique identifiers instead of real names to protect individual privacy. This is a crucial step to prevent linking voice data to personal identities.

- Robust data encryption: Using strong encryption protocols to protect voice data from unauthorized access and ensure confidentiality during storage and transmission. This includes using industry-standard encryption algorithms.

- Secure storage systems: Employing secure data storage systems that limit access to authorized personnel only. This should include multiple layers of security to prevent breaches and data leaks.

- User consent and control: Obtaining informed consent from users before collecting and analyzing their voice data. Users should have the ability to control the use of their data and access or correct any inaccuracies.

Data Encryption and Security Protocols

Protecting voice data necessitates the implementation of strong encryption and security protocols. The following table illustrates some commonly used methods:

| Protocol | Description | Security Considerations |

|---|---|---|

| Advanced Encryption Standard (AES) | A widely used symmetric encryption algorithm. | Strong encryption but requires secure key management. |

| Rivest-Shamir-Adleman (RSA) | An asymmetric encryption algorithm. | Secure key exchange but computationally more intensive. |

| Transport Layer Security (TLS) | A protocol for secure communication over networks. | Ensures confidentiality and integrity of data transmission. |

| Hashing algorithms (e.g., SHA-256) | Used to create unique fingerprints of data. | Crucial for data integrity and verification. |

Ethical Guidelines for Using Voice Data in Mental Health Research

Adhering to ethical guidelines is essential to ensure the responsible use of voice data in mental health research. These include:

- Informed consent: Users must be fully informed about how their voice data will be collected, used, and stored, and they must explicitly consent to its use.

- Data minimization: Only collect and analyze the minimum amount of voice data necessary for the research purpose.

- Confidentiality and anonymity: Protecting the confidentiality and anonymity of participants is crucial.

- Data security: Implementing robust security measures to protect voice data from unauthorized access, use, or disclosure.

- Transparency: Being transparent about the research process and its potential implications.

Voice Technology and Societal Impact

Voice technology is rapidly integrating into various aspects of our lives, and its influence on mental health is a burgeoning area of concern and opportunity. From virtual assistants to telehealth platforms, voice-based interactions are becoming increasingly commonplace. Understanding the potential ramifications of this technology on mental health trends, accessibility, and societal impact is crucial for responsible development and deployment.Voice technology presents both exciting possibilities and potential pitfalls for mental health support.

Its ability to facilitate easier access to resources, coupled with the potential for bias in assessment, requires careful consideration. Addressing these challenges will be vital in ensuring that this powerful technology is used to promote, not hinder, mental well-being.

Impact on Mental Health Trends and Support Systems

Voice-based interaction with mental health support systems could significantly reshape the landscape of care. Increased accessibility through mobile applications or voice-activated platforms could reach individuals who are geographically isolated, financially constrained, or hesitant to seek in-person help. This broadened access could lead to earlier intervention and potentially mitigate the impact of mental health crises. However, the potential for increased reliance on automated systems could also create a dependence that undermines the importance of human interaction and professional guidance.

Role of Voice Technology in Fostering Greater Accessibility, Voice technology speech analysis mental health risk privacy

Voice technology holds the promise of revolutionizing mental health accessibility. Individuals with physical limitations, speech impediments, or those who prefer not to interact face-to-face could benefit greatly from voice-activated support systems. Furthermore, geographically remote communities could gain access to professionals and resources they previously lacked. This broadened reach could result in more rapid diagnoses, early interventions, and reduced stigmas associated with mental health issues.

Telehealth platforms, driven by voice technology, could make mental health professionals available to a wider range of people, reducing wait times and increasing accessibility.

Potential Biases in Voice Recognition Systems

Voice recognition systems, while increasingly sophisticated, are not immune to bias. These systems are trained on vast datasets, and if those datasets reflect existing societal prejudices, the systems will perpetuate them. This could lead to inaccurate assessments of mental health conditions or the misdiagnosis of individuals from underrepresented groups. For example, a voice recognition system trained primarily on data from one demographic might misinterpret the speech patterns of another, leading to skewed results and unequal treatment.

Strategies for Mitigating Biases in Voice Recognition Systems

Addressing biases in voice recognition systems requires a multi-faceted approach. First, the datasets used for training these systems must be diverse and representative of the population they aim to serve. Second, the algorithms used for voice recognition should be rigorously tested for bias and continuously refined. Third, transparent guidelines and protocols for the use of voice-based mental health tools should be established to ensure fair and equitable access.

Continuous monitoring and evaluation of these systems are essential to ensure their unbiased operation and to adapt to evolving societal needs. This requires collaboration among researchers, developers, and mental health professionals.

Potential Societal Benefits and Drawbacks

| Societal Benefit | Societal Drawback |

|---|---|

| Increased accessibility to mental health resources | Potential for over-reliance on automated systems, reducing the importance of human interaction |

| Faster intervention and earlier diagnosis | Risk of misdiagnosis due to biases in voice recognition systems |

| Reduced stigma associated with mental health issues | Data privacy concerns related to voice-based interactions |

| Enhanced support for vulnerable populations | Potential for exacerbating existing inequalities if not implemented carefully |

Voice technology offers the potential to revolutionize mental health support, but its responsible and equitable application is critical to realizing its full benefit.

Voice Technology and Data Security

Voice technology, particularly in mental health applications, raises critical concerns about data security. The sensitive nature of the data collected necessitates robust protocols for storage, transmission, and analysis to safeguard user privacy. Maintaining confidentiality and preventing unauthorized access is paramount to building trust and encouraging responsible use of these technologies.Protecting voice data involves more than just technical measures; it requires a holistic approach that considers the entire data lifecycle, from collection to disposal.

This includes not only the technical security protocols but also ethical considerations, user education, and legal compliance. A strong security framework must be adaptable to evolving threats and technological advancements.

Security Protocols for Voice Data

Robust security protocols are crucial for safeguarding voice data related to mental health. These protocols need to address both the storage and transmission of the data. Strong encryption techniques, access controls, and secure data centers are essential components of a comprehensive security strategy.

Analyzing speech patterns through voice technology raises some serious concerns about mental health risks and privacy. It’s a fascinating field, but the potential for misuse is huge. Interestingly, Epic Games CEO Tim Sweeney’s support for Nvidia’s GeForce Now cloud gaming service, particularly in relation to Fortnite, highlighting the potential for similar tech-driven advancements in other fields , reminds us that similar technological leaps in voice analysis could be used for good or ill.

We need to carefully consider the ethical implications of these advancements.

- Encryption: End-to-end encryption is a fundamental security measure. It ensures that only authorized parties can access the data, protecting it from interception during transmission. This is critical for voice data, particularly when it’s being transmitted across networks or stored in cloud environments.

- Access Controls: Implementing strict access controls limits who can access voice data. This includes roles and permissions, with only authorized personnel having access to specific data sets. This ensures that only individuals with a legitimate need to access the data can do so.

- Secure Data Centers: Storing voice data in secure data centers with physical security measures, such as controlled access, surveillance systems, and fire suppression, is vital. This helps prevent physical breaches and protects the data from environmental hazards.

Data Anonymization and De-identification

Data anonymization and de-identification are essential for protecting user privacy in speech analysis. These processes remove or mask identifying information, making it impossible to link the data back to specific individuals. This is critical for preventing re-identification and maintaining confidentiality.

Analyzing voice patterns for mental health risks raises privacy concerns, especially with voice technology becoming more prevalent. The use of speech analysis for these purposes is fascinating, but we need to be cautious. The intricate connection between genetics and physical traits, like in the study of yetis, bears, and the abominable snowman here , might offer some parallels.

Ultimately, as we delve deeper into voice analysis for mental health, we need to consider the implications for individual privacy.

- Data Masking: Data masking techniques replace sensitive information with non-sensitive data, while preserving the integrity of the data for analysis. This helps protect sensitive information while maintaining the usefulness of the data.

- Pseudonymization: Pseudonymization replaces identifying information with unique identifiers, allowing for data analysis without revealing the identity of the individuals. This method balances data utility with privacy concerns.

- Data Aggregation: Aggregating data from multiple users can reduce the risk of re-identification, particularly in large datasets. However, care must be taken to avoid disclosing any patterns that could potentially reveal individual information.

Methods for Securing Voice Data

Several methods can be used to secure voice data during storage and transmission. These methods should be integrated into the overall system design.

- Hashing: Hashing algorithms create unique fingerprints of data, making it difficult to alter or tamper with it. This can be used to verify data integrity.

- Digital Signatures: Digital signatures provide authentication and non-repudiation, verifying the origin and integrity of the data.

- Secure Protocols: Secure protocols, such as HTTPS, are crucial for transmitting voice data over networks. These protocols encrypt the data during transmission.

Security Breaches and Vulnerabilities

Potential security breaches can compromise voice data, leading to privacy violations. Understanding these potential breaches is crucial for designing robust security systems.

| Type of Breach | Description |

|---|---|

| Unauthorized Access | Gaining access to voice data without authorization. |

| Data Tampering | Altering or corrupting voice data. |

| Data Leakage | Unintentional or intentional disclosure of voice data. |

| Malware Attacks | Infections that compromise voice data security. |

| Insider Threats | Malicious actions by authorized personnel. |

Building a Robust System for User Privacy

A robust system for protecting user privacy in voice technology applications needs a multi-faceted approach. This includes not only technical measures but also policies and procedures that ensure user awareness and control over their data.

- Clear Privacy Policies: Transparent policies outlining how voice data will be collected, used, and protected are essential.

- User Consent and Control: Users should have the right to access, modify, and delete their voice data.

- Regular Security Audits: Regular security audits help identify vulnerabilities and ensure the effectiveness of security measures.

Illustrative Case Studies

Voice technology is rapidly transforming mental health care, offering innovative approaches to assessment, support, and intervention. This section delves into specific case studies, exploring user experiences, potential applications, and ethical considerations. We’ll examine how speech analysis can detect early warning signs, analyze the steps in a data-driven approach, and highlight successful implementations in real-world settings.The following case studies illustrate the diverse potential and challenges of voice technology in mental health, demonstrating how it can be integrated into existing care models and the importance of careful consideration of ethical implications.

A Case Study on Voice Technology in Mental Health Care

A hypothetical case study examines the use of a voice-activated mental health support system for adolescents. The system, accessible via smartphone, allows users to record their thoughts and feelings. Sophisticated algorithms analyze the tone, pitch, and cadence of the speech, identifying patterns indicative of potential distress. The system then proactively prompts users with coping strategies and resources.

User feedback emphasized the system’s accessibility and anonymity, encouraging open communication and reducing barriers to seeking help. However, privacy concerns were also raised, highlighting the need for robust data security measures.

A Fictional Case Study on Speech Analysis for Early Detection

Imagine a young adult experiencing increasing anxiety. Their speech patterns, analyzed by a voice-analysis application, reveal subtle shifts in tone and speed, indicators of escalating stress. The app alerts the user’s therapist, enabling early intervention and preventing a potential worsening of the condition. This fictional case illustrates how speech analysis can complement existing mental health assessments, providing a non-invasive and continuous monitoring tool.

Crucially, this early detection mechanism allows for more targeted interventions and support, ultimately improving treatment outcomes.

Comparative Analysis of Ethical Implications

Different case studies reveal varying ethical implications of voice technology in mental health. One study focuses on the use of voice-activated tools in group therapy settings, highlighting the potential for unintended biases in analysis due to cultural differences in speech patterns. Another examines the privacy implications of storing voice data, demonstrating the need for robust data security protocols.

The comparative analysis underscores the importance of ethical guidelines and ongoing research to mitigate potential harm and maximize benefits.

Data-Driven Approach to Analyzing Voice Data

A data-driven approach to analyzing voice data involves several key steps. First, identifying relevant speech features, such as pitch, intensity, and rhythm, is crucial. Next, the collected data is processed and cleaned, removing irrelevant noise or artifacts. Algorithms then analyze these patterns to identify potential trends. Finally, trained clinicians interpret the results, integrating them into the overall assessment of the patient.

Successful Implementations of Voice Technology

Several successful implementations demonstrate the positive impact of voice technology in mental health settings. One example involves a telehealth platform utilizing voice analysis to assess patients’ emotional states during virtual therapy sessions. This allows clinicians to provide timely support and adjust treatment strategies accordingly. Another instance demonstrates how voice technology is being used to provide personalized support for individuals with anxiety disorders, allowing them to practice coping mechanisms through automated feedback.

Final Conclusion: Voice Technology Speech Analysis Mental Health Risk Privacy

In conclusion, the intersection of voice technology, speech analysis, and mental health presents both exciting opportunities and significant challenges. The potential for early detection and personalized support is undeniable, but the need for robust privacy protections and ethical guidelines is equally crucial. Ultimately, a thoughtful and balanced approach is essential to harness the power of this technology while safeguarding individual well-being and privacy.